- AMD Community

- Communities

- PC Graphics

- PC Graphics

- No Improvement with Crossfire

PC Graphics

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No Improvement with Crossfire

I've installed two MSI RX580 8G V1 graphic cards in my system which is built using an AMD FX-8350 CPU and has 16GB of memory.

Everything I can see shows that Crossfire is enabled, however, when I run Performance Test from Passmark there is no difference in the results from one RX580 to the two Crossfire connected RX580s on any of the graphic tests. Shouldn't I see some improvement? Am I missing something? Does Passmark correctly measure cards in Crossfire Mode. Performance Test says Crossfire is enabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Crossfire is a picky thing at best when it works and most the time IMHO is not worth pursuing. Most reviewers will tell you and I believe it true to just buy the best single card solution you can afford. The hay-day of Crossfire and SLI are over. Neither Nvidia or AMD are really pursuing this tech at this point. AMD support has been lackluster for about a year and a half now. Firstly the percentage of people that use it is just about none on the overall scheme of things. Next the are both looking to DX12 and beyond as the future, and they are exactly that, where it is all going. DX12 doesn't use crossfire or sli. DX12 implements MULTI-GPU, a similar tech but standardized and controlled as a standard in Direct X 12 itself. This change means the driver, therefore AMD and NVIDIA don't control or optimize this feature in any way. DX12 MULTI-GPO is controlled completely in the games engine itself and even then only if the developer CHOOSES to support it. The speculation is that most won't, as again most end users won't use multiple cards, so the developer won't wast resources because of this.

-----------

So really the performance gains you get with Crossfire are very dependent on how well the driver and it crossfire profile are working, and how well the game is optimized for crossfire. That varies hugely and only very few games see big improvements approach anywhere near double peformance. It is very common to see results in modest 5 to 20% range. This is why so many just give up on it, as it isn't worth the additional headaches such a more power and stability issues.

Your situation, it seems your processor may be a bit weak to drive 2 of those cards IMHO.

Also I don't know what motherboard you have, but if you added that cpu to an older board, it is widely known that many boards throttle with those cpus, pretty much only the most recent AM3+ boards with the thermal requirements work properly with those cpus.

I understand that many just want to save money. You would likely have been better take the money you spent on a second card and get a ryzen, MB and ram.

We could offer maybe better more targeted help/advice if you could provide the information requested in the forum headers:

When posting a new question, please provide as much detail as possible describing your issue making sure to include the relevant hardware and software configuration.

For example:

- Issue Description: [Describe your issue in detail here]

- Hardware: [Describe the make and model of your: Graphics Card, CPU, Motherboard, RAM, PSU, Display(s), etc.]

- Software: [Describe version or release date of your: Operating System, Game/Application, Drivers, etc.]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am experiencing a similar issue.

Hardware:

GPU: 2 x Sapphire Radeon RX 580 NITRO+ 8GB

CPU: AMD Ryzen 5 2600X

MB: GIGABYTE AORUS X470 ULTRA GAMING

RAM: Corsair Vengeance 8 GB

PSU: FSP HEXA 85+, 80+ Bronze, 650W

Display: LG 27UD600 4k

Software:

OS: Win 10 home edition

Drivers: win10-64bit-radeon-software-adrenalin-edition-18.12.1.1 and win10-64bit-radeon-software-adrenalin-edition-18.9.3

Games: GTA V, Assassin's Creed Odyssey, Formula 1 2015

Tests SW: GPU-Z, FPS-Monitor

Issue:

I have installed a second RX 580 besides my existing RX 580, Radeon Settings suggests that Crossfire is enabled, GPU-Z also suggests that it is enabled.

While playing/benchmarking any of the above games the second GPU is not used at all fans stay at 0 and frequency around 300 MHZ with occasional jumps to 600/900 MHZ usage at 0% with occasional jumps of load for a very short peiod.

I have attempted to Uninstall/Reinstall the drivers, tested both GPU's individually to make sure that they are working properly (they are..), checked both PCI Express slots, removed 1 card, installed the drivers, added the second card, uninstalled the driver, added both cards installed the driver... disabled enabled ULPS, played around with crossfire settings in individual games AFR/1 x 1.

Nothing helps with every driver, every setting the experience is the same.

What I noticed is that when Crossfire is enabled I get a lot more stuttering than when it is not enabled.

It is hard for me to believe that it is impossible to start Crossfire with my actual configuration.

Can I find some logs on what is happening somewhere?

Thanks in advance for the help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does the game you are playing have a crossfire profile? Does the games maker, claim crossfire support?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From my knowledge Assassin's Creed Odyssey and Formula 1 2015 have official crossfire support and I did try Assassin's Creed Odyssey wit it's profile with no luck.

Regarding GTA 5 forums reported excellent performance although I believe that crossfire is not officially supported by GTA 5.

I believe that my problem is a general Windows/Driver issue rather than game specific as I see the same behavior in all games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if they do or they don't support it. I don't crossfire, gave up that pipe dream a few generations back. Got tired of trying to get one game to sorta work then tons others wouldn't because of it. I know and fully believe some games still do work with is. Never said otherwise. Only said it would have to be one that did support it, to truly benefit. In 2018 those that benefit are pretty few a far between, and IMHO probably not worth the trade off of potential issues. I do wish anyone still using crossfire all the luck in the world. We all certainly want to get what we paid for, and after all the specs on some AMD products still claim Crossfire support, so it should work if it isn't. If the game says it supports it and your card says it supports it, and it isn't working then report it to AMD, the links to do that are in Radeon Settings under the preferences tab.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After checking some reviews I believed that it would be a good idea now I have a different opinion.

I have opened 1 ticket with AMD support and 1 ticket with sapphire.

AMD advised to claim warranty from sapphire and sapphire to report a bug for AMD software ![]()

I have sent their messages to each-other, I might go for the warranty.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

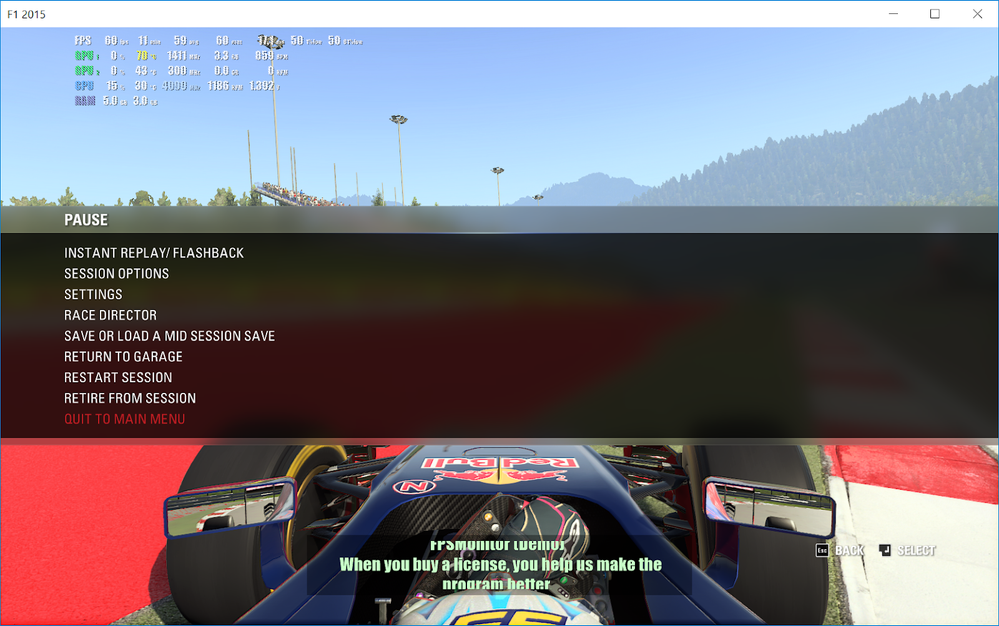

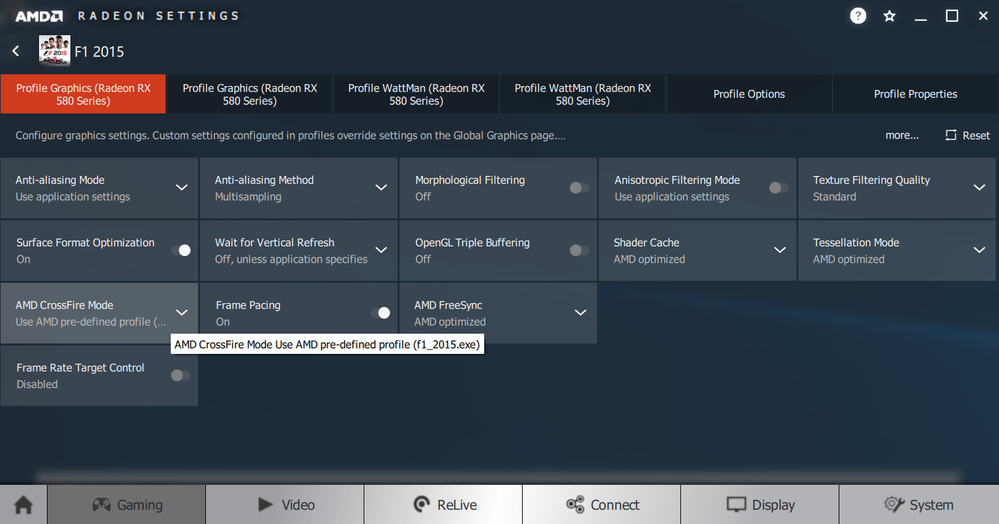

Attached 2 screenshots of Radeon Settings and F1 2015 gameplay using it's crossfire profile from Radeon Settings.

To note that in the gameplay image the first GPU is also at 0% but that has a realy helthy 60-99% range when playing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From Ubi spec for odyssey, so one down,

You'll have to look up for other games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes but I get the same behavior in all games, and F1 2015 actually has a crossfire profile and nothing happens with the second GPU. (see above images)

So if I would manage to start Crossfire with some application than at least it would be confirmed that it is functional and I need to check the individual games.

My only hint is that I am on the limit with the PSU and it refuses to start because of this...

Any other opinion is very welcomed ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That may be, and hopefully someone here can offer advice that helps, I know many others know things I don't, we all pickup tricks as we go along. As I already mentioned let AMD know the issue reports and e tickets are the only way we users have to directly talk to AMD, the e tickets are the only way they respond back. The link to that is also on the AMD contact us page at the bottom. These forums are USER TO USER help only. None of us contact anyone in driver development from here. Only the couple official AMD mods have an avenue for that and only if they choose to get involved. In my experience that is not what I would call often.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the feedback.

Regarding PSU 1 card has a max usage limit of 175 W from what I see with an optional increase of 20% (I have not done any overclock etc...)

So if the 2 cards are 200 W each the CPU is ~100 W adds up to 500 W.

The rest of 150 W should be enough for the 2 SSD's the 140 mm CPU fan and the 3 120 mm system fans.

In theory ![]()

Can you help me with a link on where is best to apply for technical support?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One link is in Radeon Settings / Preferences. Another is here: Online Service Request | AMD

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I have opened a ticket with AMD and another with sapphire.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps you tried this already, but if you make the second card the primary display adapter, does it work on it's own as well, or is it just the first card?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I have disconnected the primary card and used the secondary to run a game and it works perfectly.

I have also tested the secondary PCI slot on the Motherboard by having the secondary card in it, this works as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

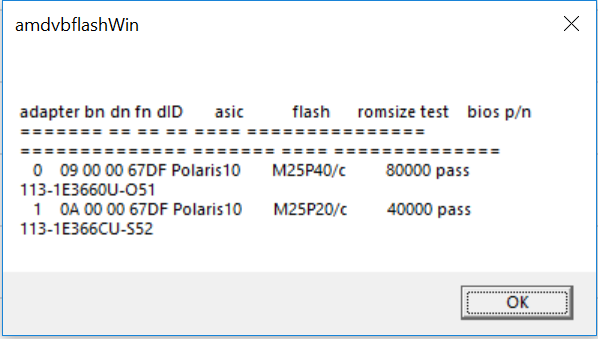

I might have missed a detail here.

I have picked up the second GPU from a local ebay like website, for a good price and still under warranty.

Most probably the GPU was used for mining, this is why I have started checking the GPU's bios to see if it was changed/updated.

As you can see bellow there is a difference with the bios unsure why, card 0 is the GPU bought as new and card 1 is the GPU bought used.

I saved the bios from GBU0 and attempted to flash it to GPU1 to have the same bios on both cards but for some unknown reason the bios on GPU0 is 512KB and the one on GPU1 is 256 KB. To note that both GPU's are from Sapphire same model same year ...

If I download the official bios it also has 256 KB so I am unsure what to do, I would not flush GPU0 with a 256 KB bios as that is my new card and that is how it came out of the factory.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would ask the company that made that card for advice on the bios difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks pokester for the reply and explanation. Guess I'm late for the dance.

I've been reading about Crossfire and SLi for years and thought it was supposed to be a great application. Regret learning that it's of little use.

I have an ASRock 990FX Extreme9 motherboard, AMD FX-8350 CPU and 16GB ram. The manual says to use PCIE1 and PCIE4 for dual cards and shows they are x16. The manual goes on to state that a third Crossfire board can be put in PCIE5 slot but then the 4 and 5 PCIE slots will run at x8.

However, the Radeon app shows (my two card config with Crossfire enabled) that PCIE4 is running in x8 mode. I emailed ASRock and they explained that in dual-card mode that both slots will run x16 however when Crossfire is enabled both slots run in x8 mode. So another reason I'm not getting all the performance I was hoping for.

I have a PCIE card in slot 5 running a NVME board for a Samsung 960 EVO m.2 hard drive that I boot from. I wonder if that is putting slot 4 into x8 mode but I'd have to remove my boot drive to find out and boot from another drive, yada yada and that is more effort than I want to put in for what will probably be no benefit anyway.

Thanks again for the response. Too often when I post to a forum I get no reply or answer so I appreciate you taking time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Do you see Crossfire scaling in benchmarks like Firestrike for example? That benchmark definitely supports crossfire, so if you see identical scores with and without crossfire enabled there is something else going on. Check your manual on that m.2 drive. My board has limited PCIe lanes, and if my secondary M.2 slot is populated with a drive, one of the PCIe ports drops to a lower speed.

And it isn't like you shouldn't see any scaling in crossfire, but it is really dependent on the game/engine the game is developed with. DX12 games are more hit and miss, because if the developer didn't implement MultiGPU, the second card won't be used at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My pleasure. I think the hope is that with DX12 directly controlling MULTI-GPU that maybe this becomes viable. My concern with that is a fear that developers will still see multi card users as such a minority, that they won't consider it worth their time supporting in their upcoming engines. Even though DX12 supports it, doesn't mean the game will. It is entirely on the developers at this point. Frankly most of them are pretty cheap and games are buggy these days, unless they prove popular long enough they actually get fixed. The bugs in this latest BFV for instance are a disgrace. Still a super fun game that doesn't deserve all it's bad press, but when you open the door for negativity, that's what happens.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It isn't really entirely up to the developers, there are actual hardware limitations at play, and the way rendering engines are designed that limit the effectiveness of MultiGPU setups.

There are many rendering techniques employed now that help games both look better (Multipass AA, temporal techniquies), but also buffer common data between frames so certain elements don't have to get computed again. This helps games run at a higher frame rate on lower end hardware, but simultaneously creates frame dependencies where information in the previous frame is needed by the subsequent frame . For a single GPU, that isn't much of an issue, that information can be buffered in cache or VRAM and pulled up again in the next frame, freeing up the GPU to do other work.

In the DX11 and earlier world of crossfire and SLI those dependencies become problematic. DX11 and earlier is pretty much limited to alternate frame rendering (AFR), where one GPU renders one frame and the other GPU renders the next, because the API and the applications never see more than one rendering unit, dividing the work has to be done by the driver. In an application with no frame dependencies, the expected performance would be close to the 100% improvement.

However, as rendering engines get more complex to produce better visuals on lower end hardware (like in consoles), frame dependencies exist in order to make more efficient use of the hardware. In an SLI/Crossfire setup, you can then recomputed all the information for each frame, which is what is often done. You then lose the efficiencies gained by the single GPU setup and wind up with less than 100% scaling. You can also copy the data shared by the frames between GPUs, but the application needs to signal the driver that certain data needs to be copied between GPUs, as the applications can only see one rendering unit. This is more difficult to implement, and a lot of engines don't bother with it.

In DX12 and Vulkan, and application can see all the GPUs on the system, and a developer can decided exactly how to employ MultiGPU. Here, developers like to employ what is called a rendering pipeline, where one GPU will start a frame and at a predefined point, pass it to the next GPU. Yet, with these implementations you don't get perfect scaling and a lot of developers don't bother. That is, as pokester said, because not many users have multiple GPUs, but also because a rendering pipeline winds up being hamstrung by the PCIe bus. To copy data from one GPU to the next, the data have to pass over the PCIe bus. That data rate is much to slow to allow one GPU to render half a frame and copy everything it has done to the second. So the gains you get by pipelining are ultimately hamstrung by the slow interconnect between GPUs.

In summary, a developer can either develop a rendering pipeline and copy data between GPUs, or completely recompute all information every frame, depending on which produces a smaller hit to frame rates. If there are enough inter frame dependencies in the engine, the gains from multiGPU setups can be so small, it isn't worth implementing. You can then get rid of those dependencies in the engine, but doing that will make the engine run less efficiently in single GPU setups in regard to both frames and graphic fidelity.

There is hope however, NVidia has there NVLink now in consumer cards which massively increases the bandwidth between GPUs. Products link that will allow developers to build frame pipelining engines that account for the higher interconnect, increasing the benefit of a MultiGPU setup, and may get more developers to implement it, as there would be a tangible benefit. AMD has their version of NVLink in the works as well, xGMI, which should be able to accommodate the same sort of interconnect bandwidth.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to start by saying that I know you said nothing to the contrary and what I am saying is because I find most any new tech from Nvidia concerning. I can't deny their implementations are good, and AMD could currently take some lessons from their ability to maker their base happy with driver. However I'd call that NVLink promising, if it didn't also sound PROPRIETARY. Many of the issues we are in today with graphics is because the green team doesn't want to play with others. They want to be a monopoly, they want to dictate technology and how it gets souly to make the money. Like the new Ray Tracing, this isn't a new feature really. It is something that is already in the DX12 spec. Something that can be done on AMD's compute cores. NVIDIA introduced this to control how compute is used on their cards. Not to give a new feature. They want you to buy a much more expensive Quadro if you want compute. All this was, is another PROPRIETARY attempt to control product usage an pricing. AMD has time and again been and innovator that share that tech with the world openly. So that being said this new tech while sounding great, unless adopted as an open standard. Sounds like yet another stumbling block to sorta replicate or work around. Microsoft should do better at policing this stuff and demand that if they support it, it is an open standard for all hardware makers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While I understand your trepidation where NVidia is concerned, NVLink doesn't seemed to be that type of proprietary product. One of the major issues right now in MultiGPU setups from a programming standpoint, is the bandwidth available to communicate between GPUs. It really limits the scaling and the rendering techniques that can be used. NVLink only raises that bandwidth, so now I, as a developer can develop my engine assuming a new threshold on inter GPU bandwidth.

The application won't care how the bandwidth is obtained, but the application will only perform optimally if that amount of bandwidth is present. So for a AMD MultiGPU setup to give similar scaling they would have to provide similar bandwidth to NVLink, which it sounds like they will do with xGMI. Of course, the AMD version is baked right into the hardware and requires nothing from the end user. NVLink you have to buy separately, not included in the price of your $1200 GPU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A question here.

Do you know of some possible error log/code that I could read from somewhere?

As if I would have an error code I could specifically search/report the issue.

With only having screenshots to report the lack of crossfire the potential issues can be many.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PassMark is not much of a 3d-gaming benchmark, imo. Only a small subset of its included benches actual test your GPU to any extent. Try UL's 3dMark instead--an order of magnitude better. Or the Uengine's Heaven and Valley benches--all show significant gains with Crossfire. (Free versions exist for all of these.) I'm running an RX-590/480 8GB Crossfire rig right now and I get *stunning* results! Bear in mind that Crossfire won't work in every game--but it works in a lot of them!...;)

Remember that DX12 actually supports Crossfire *natively* now, and a great game example of current developer support for it is Shadow of the Tomb Raider--the DX12 mode supports Crossfire natively in the game engine itself--whereas of course the DX11 mode does *not*! (I have tested it, myself!) In the benchmark included in SotTR, DX12 Crossfire nets me 59 fps average at 3840x2160, whereas the 590 by itself (running with Crossfire disabled) averages 32 fps @ 3840x2160. So contrary to popular belief, Crossfire is on the verge of working better and in more games than ever, because of DX12's native support! Prior to DX12, we depended on either nVidia or AMD to write custom profiles for games in order for multi-gpu performance to work--not any more! I decided to go Crossfire just a couple of weeks ago simply because of DX12's rapid emergence and developer support--as I had not done it since many years ago with twin 4850's . It worked OK, then--but I am really impressed with the latest Adrenalin drivers and Crossfire--you don't want to run anything except the 18.12.2's and up from now on! (Windows10x64, build 17763.168, Ryzen 5 1600 @ 3.8GHz, 16GB's 3200Mhz DDR4-2x8 for dual-channel.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good information. Careful with the terminology though. There is no such thing as crossfire in DX12. It is called MULTI-GPU and it is not symantecs, MULTI-GPU is controlled in the game engine itself. Crossfire DX11 or SLI were driver dependent. Thank you though great information and advice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To expand on pokester's point, it is called "Multi-GPU" in DX12 and Vulkan because the low level APIs actually see more than one rendering device, which allows application programs to explicitly direct workflow to them. In DX11 and earlier, the applications only ever see one rendering device regardless of how may were present. Workload had to be directed implicitly to any additional display adapters via the display driver.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, and good point, guys...;) I'm so used to saying "Crossfire" that it just rolls off the tongue--but you are entirely correct. Multi-GPU it is--because you can even pair a nVidia & and AMD GPU to run in tandem--though I would not recommend it...! Yes--I was surprised to see that SotTR supported DX12 multi-GPU as I thought it would take some time for such support to begin appearing in game engines--but apparently building support into the engine is not as onerous as I had expected--D3d12 seems to be taking off fast--especially when we look at the history of d3d 9.0c, eh?...;) I guess it's because the market is so much bigger now than it was in 2002!

Thanking both pokester and ajlueke, here! I shouldn't be saying "Crossfire" at all relative to DX12. I do want to say that if I explicitly disable Crossfire in the 18.12.2/3 drivers--then DX12 multi-GPU support in SotTR fails and drops back to a single GPU--my 590. that's sort of interesting--perhaps the driver is telling the game engine "run only GPU 0", eh? Thanks again for the comments!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is interesting. As I thought that is exactly what you are supposed to do, for multi-gpu.