- AMD Community

- Communities

- PC Drivers & Software

- PC Drivers & Software

- Limited resolution options with Win 10 Pro and HD ...

PC Drivers & Software

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Limited resolution options with Win 10 Pro and HD 8570

Any advice or help would be greatly appreciated. I just built a web development workstation and put an HD 8570 (1GB) in it to power dual displays and I can't set the resolution to 1920x1080 (which my monitors use). It maxes out at 1600x1200 in the Windows settings and I have the latest drivers and Crimson software installed. I don't know what the problem is, I haven't owned a video card in 15 years that couldn't run 1920x1080 resolution and since the 8570 is the equivalent of the R7 240 I can't see if being too old or something. I tried installing Windows 8 era Catalyst drivers just to see if it's a bug, but it won't get higher than 1920x1080. The integrated graphics (Intel HD 2500) can run 1920x1080 fine so it's not Windows 10 or the monitors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

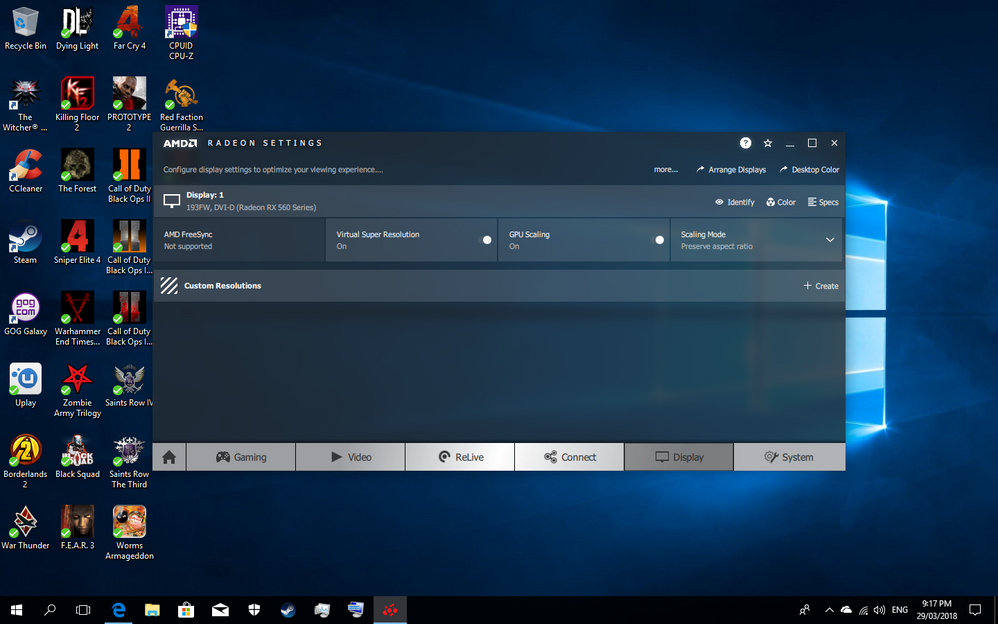

Do you have GPU scaling enabled in Wattman? Enable it and see if that helps. Are both monitors native resolution 1920x1080? Are both monitors plugged in to the graphics card?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run GPU scaling on a not so great even work station card makes a huge diffrence with scaling. 10s scaling can be a bit hit of miss but your HD8570 with GPU scaling enabled should be able run your 1920 with ease. I am on an old AOC monitor with only a DVI cable connected & stepsons on a R7360OC 2G with a 32ich TV as main monitor & GPU scaling makes a huge diffrence for running 1920 as a native resolution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And its on 18.2.1 drivers both builds can you post your captures of whats enabled so we can guide to to something maybe over looked.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OP this may be an issue of bandwidth. Are you using DVI output or DP output? Someone on the Dell community forums had a similar issue where the 8570 had the ability to drive dual-display 2560x1600 resolution, but the single-link DVI port did not have the bandwidth to support it. The solution was to output with DisplayPort.