- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Shader execution time & Wavefront scheduling in GCN3

Hi everyone,

I´m trying to predict shader execution times, and in order to do that I’m trying to know how GCN3 architecture works.

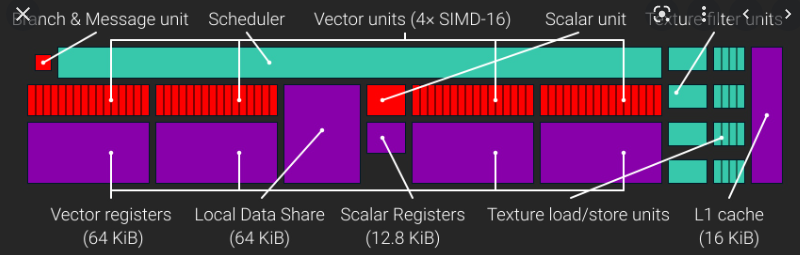

So, if i´m not wrong GCN3 architecture it’s divided into Compute Units where each Compute Unit has 4xSIMD (16-wide) and a Scalar ALU as it can be seen in the following image.

So far so good, the problem comes when I execute a shader and measure execution times, using GPA 3_10 (https://github.com/GPUOpen-Tools/gpu_performance_api/blob/v3.10/README.md#documentation ), exactly the "Execution Time" counter.

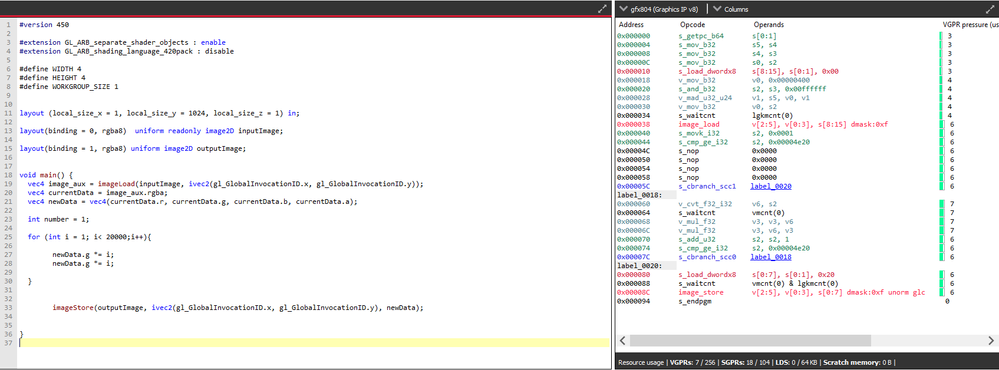

I´m using Vulkan for executing the compute shader exposed in the next image that it´s converted to instructions using de Radeon GPU Analyzer. The local_size_y varies with the size of the input image, forcing all threads to belong to the same WorkGroup and in consequence in the same CU. Only the local_size_y it’s varying since the input images are column matrix.

The test I’m doing consists in creating column matrix of random values and increasing the size of those matrix from 1x1x1 to 1x1024x1 (max workgroup size), so we start running the shader for 1 thread and end up running it for 1024 threads in the same CU.

For the first 4 Wavefronts (256 threads) the execution times are the same, as it makes sense since each of them run on 1 SIMD in parallel. The problem or what makes no sense to me it’s that the execution times continue exactly the same until the 9th Wavefront where it increases the value and the execution time fluctuate until the 12th wavefront where it remains constant for the next 4 wavefronts.

I’m using an E9171 graphics card that is not connected to any display, just being used to run this compute shaders.

The data associated with this test is attached in the excel file (the experiment has been repeated 2 times so there are the results of both experiments and the difference in time between them):

My question are:

-This execution times are the same (until the 9th WaveFront) because of the hardware scheduler? As far as I know the scalar ALU schedules for 1 SIMD in each clock cycle going from SIMD0 to SIMD3 and repeating the loop, but it’s someway optimizing so it can run up to 8 WF in parallel.

-I have repeated the experiment and in the fluctuating zone (9th to 12th WaveFront) the times may vary over 1 sec for the same size input matrix between one experiment and the other. Why is that?

-Does the scheduling process depends on the shader size? In some test done with smaller shaders (one multiplication instead of two) the times start to change when reaching 13th wavefront.