- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OpenGL: INT_2_10_10_10_REV vertices are fetched incorrectly. A new driver bug?

Consider a vertex buffer with the following content:

FFFIFFFIFFFIFFFIFFFIFFFI

Here F are float and I are 32-bit values which will be pointed to as INT_2_10_10_10_REV. No gaps or padding are present, so each component is four bytes aligned.

Vertex data in the shader is specified as

layout(location = 0) in vec3 v1;

layout(location = 2) in vec3 n1;

layout(location = 6) in vec3 v2;

layout(location = 7) in vec3 n2;

and all OpenGL state is set according to the specification (omitting details, see link to the full source below):

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, stride, (void*)(0));

glVertexAttribPointer(2, 4, GL_INT_2_10_10_10_REV, GL_TRUE, stride, (void*)(3 * sizeof(float)));

glVertexAttribPointer(6, 3, GL_FLOAT, GL_FALSE, stride, (void*)(triangle2Begin));

glVertexAttribPointer(7, 4, GL_INT_2_10_10_10_REV, GL_TRUE, stride, (void*)(3 * sizeof(float) + triangle2Begin));

We will draw 3 vertices as GL_TRIANGLES, so v1 will read three first FFF's from the buffer, n1 will read the first I's (at positions 3, 7, 11 starting from 0). v2 will read second three F's and n2 will read the second three I's.

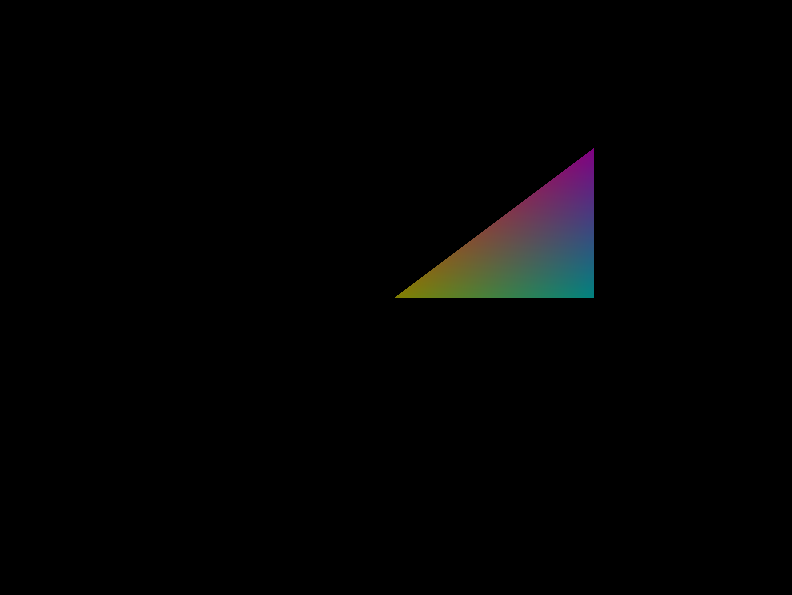

Expected behavior: we should see a window with a smoothly colored triangle:

this is what we see on Nvidia or Intel hardware, and on AMD hardware (tested with RX550, Windows 10) with the driver version below 27.20.14501.

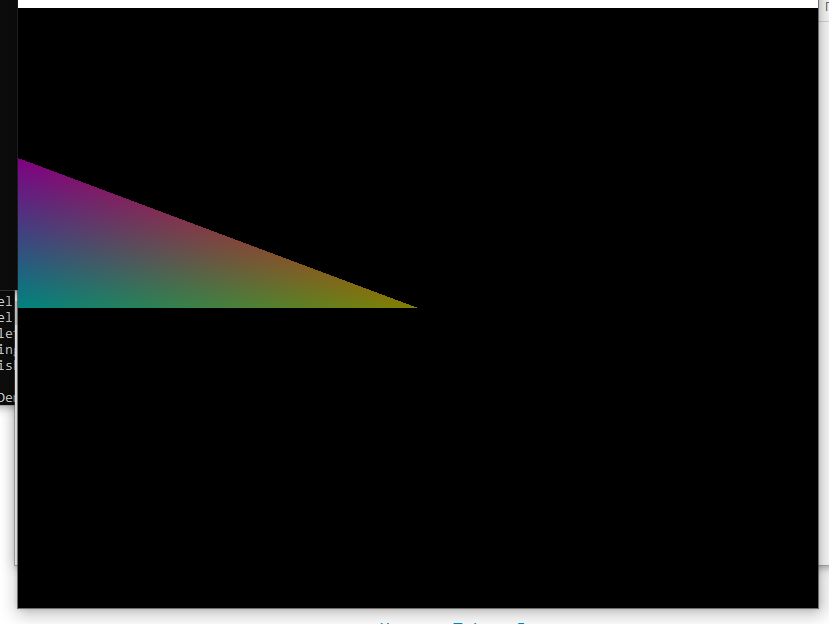

Observed behavior (with new 27.20.14501 driver):

i.e. all v2 and n2 vertices are sampled from the first vertex they should be sampled from. The problem is widespread: we got reports from various RX5**, R7 and R9 owners.

Link to a repro executable:

https://drive.google.com/file/d/12rEKVJVjwNe4_NBNDQMkHdI-OSeiit_d/view?usp=sharing

Here is an executable that works correctly on all machines:

https://drive.google.com/file/d/1xeWT5ulZScq-bWCY9NU777DZKE2J-43w/view?usp=sharing

INT_2_10_10_10_REV are replaced with 3 floats to illustrate that such buffer usage works with a type other than INT_2_10_10_10_REV.

Example sources:

https://drive.google.com/file/d/1X7nXyljeeLx5JU8ZpwLe6-Dl0dgKv0zQ/view?usp=sharing

On Windows with MSVC can be compiled as

cmake -G"NMake Makefiles" path\to\source nmake

Compiled amd_test_2_10_10_10.exe reproduces the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I installed the latest driver who reports GL_VERSION as

4.6.14761 Compatibility Profile/Debug Context 21.30.23.01 30.0.13023.1012

The problem is still here, but now the test program looks like this:

Also I found another workaround. In the test program the 4th argument of glVertexAttribPointer (bool normalize) was true. If we set it to false, and normalize the value in the shader manually (i.e. divide by 512.0 in case of GL_INT_2_10_10_10_REV format), the program works as expected.