- AMD Community

- Communities

- Red Team

- Off Topic Discussions

- Re: 10-bit

Off Topic Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-bit

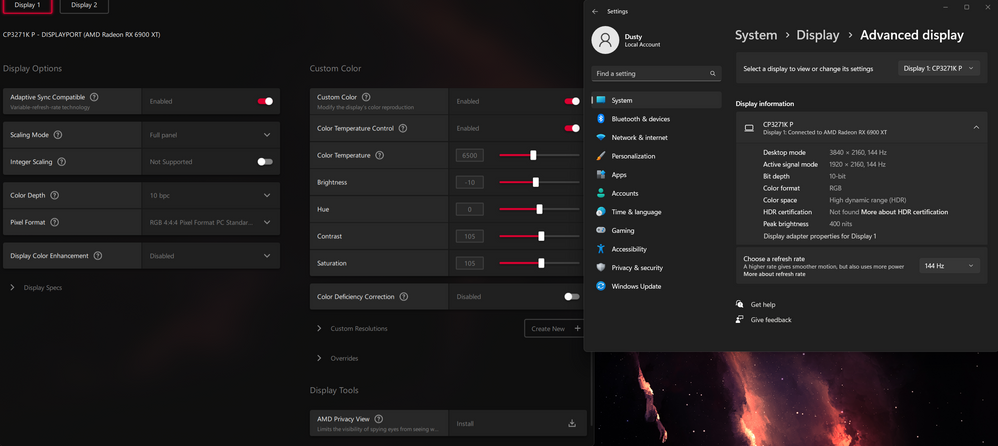

Anyone else mess around with 10-bit on their displays since 22.10.1? I was looking at my settings recently and noticed I could once again use them without apparent issue

- Labels:

-

Other

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

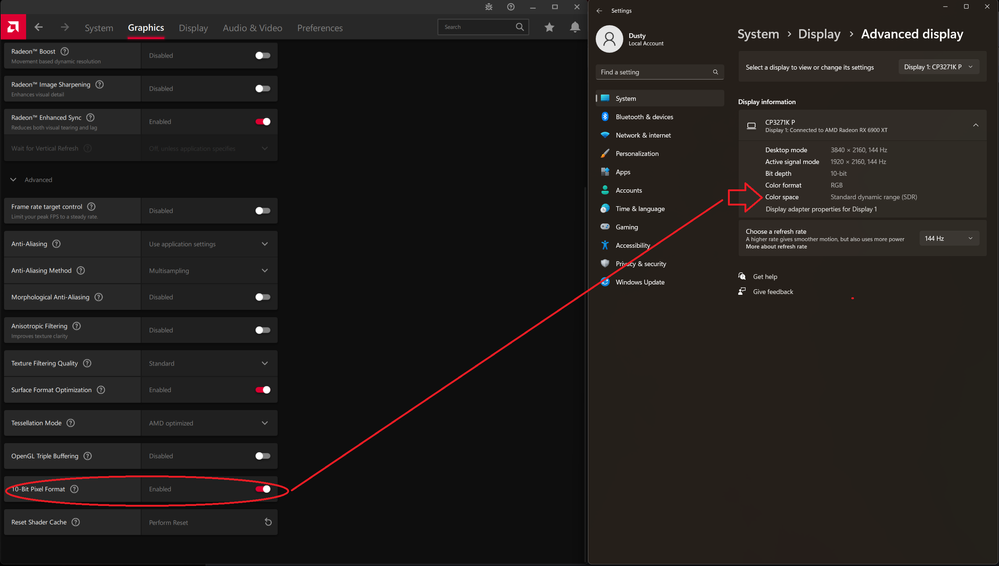

Interestingly though, if I enable this, it disables HDR capabilities apparently

However it's still 10-bit which I find interesting. Anyone know why it's disabling (Windows) HDR capabilities when that's toggled?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder if 10-bit is an infinitely greater advancement than HDR, and so is there a conflict? I know of "4K", but more pixels on a screen are not more bits inside of pixels, meaning but an increase of 20% and in a "bit" amount, speaking advancement-point wise, sounds extraspectacularially.

I wonder if certain amount of resolutions only pertain to certain size screens, unless the bits are there? I thought an answer to your question if true be: 10-bit is a greater technology, and HDR is not, but the tech is still great stuff, so don't tax your monitor? eeps! "Emergency" edit: maybe it is don't tax your neato graphics card with faulty unfortunately often, dependent, techs!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Must be something with your display or the cable you are using.

I have 10bit in Adrenaline and HDR is on in Windows.

I am using 22.3.1 though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps, I've tested it with 2 *very* expensive sets of cables though. (the monitor requires 2x DP cables to fully function)

Both sets ~45-60/each cable, sold from reputable vendors and companies with the appropriate certifications of their claimed ratings

I've also tried a cheaper set of Furui Nylon cables which claim 80gbps (~45/2) with the same results