The persistent growth of data and the need for real-time results are the industry drivers pushing the limits of innovation to tackle the diverse and complex requirements of artificial intelligence (AI) and high performance computing (HPC) applications. The architecture required by HPC and AI implementations have similar needs of high levels of compute, storage, large memory capacity and high memory bandwidth, but how they are used are slightly different.

AI applications require significant computing speed at lower precisions with big memory to scale generative AI, train models and make predictions, while HPC applications need computing power at higher precisions and resources that can handle large amounts of data and execute complex simulations required by scientific discoveries, weather forecasting, and other data-intensive workloads. The newest family of AMD accelerators, the AMD Instinct™ MI300 Series, featuring the third-generation Compute DNA (AMD CDNA™ 3) architecture, offer two distinct variants designed to address these specific AI and HPC requirements. The updated AMD ROCm™ 6 open production-ready software platform provides partners the ability to develop and quickly deploy scalable solutions across all modern workloads to address some of the world’s most important challenges with incredible efficiency.

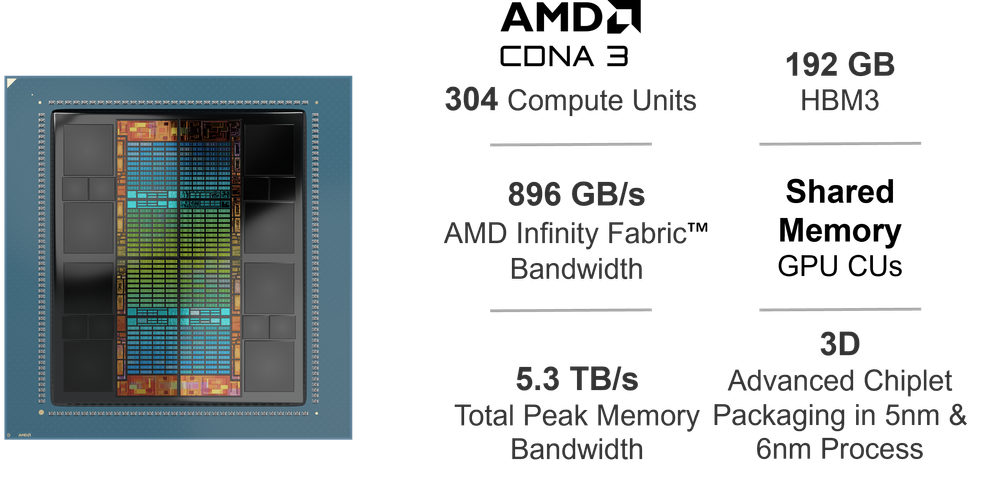

AMD CDNA™ 3 Architecture

AMD CDNA 3 architecture (Figure 1 below) is a fundamental shift for the AMD accelerated computing strategy that embraces advanced packaging to enable heterogeneous integration and deliver exceptional performance. Changing the computing paradigm with a coherent programming model that tightly couples CPUs and GPUs together allows customers the density and power efficiency to tackle the most demanding problems of our era. The newly redesigned architecture fully leans into packaging and fundamentally repartitions the compute, memory, and communication elements of the processor across a heterogenous package that integrates up to eight vertically stacked compute dies and four I/O dies (IOD) all tied together with the 4th Gen Infinity Architecture. Also, integrated are eight stacks of high-bandwidth memory allowing enhanced performance, efficiency, and programmability in the latest AMD Instinct™ MI300 Series family. This enables easily constructing AMD Instinct accelerator variants with CPU and GPU chiplet technologies to meet the requirements of different workloads. In the case of the new AMD Instinct MI300A APU, where three of the compute dies are “Zen 4” x86 CPUs tightly coupled with six 3rd Gen AMD CDNA architecture GPU accelerator compute dies (XCD) all sharing a single pool of virtual and physical memory, along with sharing an AMD Infinity Cache™ memory with extraordinarily low latency creating the world’s first high-performance, hyper-scale class APU.

Figure 1: AMD CDNA™ 3 Architecture At-a-Glance

AMD Instinct™ MI300A APU: Purpose-built HPC APU

The AMD Instinct MI300A APU accelerator is purpose-built to handle large datasets, making it ideal for compute-intensive modeling and advanced analytics. The MI300A (Figure 2 below) leverages a groundbreaking 3D chiplet design that integrates 3D-stacked “Zen 4” x86 CPUs and AMD CDNA 3 GPU XCDs onto the same package with high-bandwidth memory (HBM.) The AMD Instinct MI300A APU has 24 CPU cores and 14,592 GPU stream processors. This advanced architecture provides the MI300A accelerators breakthrough performance, density, and power efficiency for accelerated HPC and AI applications and is expected to be leveraged by many of the world’s largest, most scalable data centers and supercomputers for discovery, modeling and prediction, allowing the scientific and engineering communities to help further advancements in healthcare, energy, climate science, transportation, scientific research and more. This includes powering the future two-exaflop El Capitan supercomputer, expected to be one of the fastest in the world when it comes online.

Figure 2: AMD Instinct™ MI300A APU accelerator

AMD Instinct™ MI300X Accelerator: Designed for Cutting-Edge AI

The AMD Instinct MI300X (Figure 3 below) accelerator is designed for large language models and other cutting-edge AI applications requiring training on massive data sets and inference at scale. To accomplish this, the MI300X replaced three “Zen 4” CPU chiplets integrated on the MI300A with two additional AMD CDNA 3 XCD chiplets and added an additional 64GB of HBM3 memory. With up to 192GB of memory, this creates an optimized GPU that can run larger AI models. Running larger language models directly in memory allow cloud providers and enterprise users to run more inference jobs per GPU than ever before, can reduce the total number of GPUs needed, and can speed up performance for inference and helps lower the total cost of ownership (TCO.)

Figure 3: AMD Instinct™ MI300X accelerator

Open. Proven. Ready.

The AMD ROCm™ 6 open-source software platform is optimized to extract the best HPC and AI workload performance from AMD Instinct MI300 accelerators, while maintaining compatibility with industry software frameworks. ROCm consists of a collection of drivers, development tools, and APIs that enable GPU programming from low-level kernel to end-user applications and is customizable to meet your specific needs. You can develop, collaborate, test, and deploy your applications in a free, open source, integrated software ecosystem with robust security features. Once designed the software is then portable, allowing movement between accelerators from different vendors or between different inter-GPU connectivity architectures—all in a device-independent manner. ROCm is particularly well-suited to GPU-accelerated high performance computing (HPC), artificial intelligence (AI), scientific computing, and computer aided design (CAD). A collection of advanced GPU software containers and deployment guides for HPC, AI & Machine Learning applications are now available on the Infinity Hub to help speed-up your system deployments and time to insights.

Conclusion

Data growth and the need for real-time results are going to continue to push the boundaries of HPC and AI applications. AMD continues to support an open-source strategy to democratize AI and drive industry-wide innovation, differentiation, and collaboration with our partners and customers from single server solutions, up to the world’s largest supercomputers. Whether preparing to run the next supercomputer scientific simulation or find the most economic shipping route, the next-generation AMD CDNA 3 architecture, AMD Instinct MI300 Series accelerators, and the ROCm 6 software platform are built to optimize your applications to further your goals. Stay tuned for upcoming announcements from our system solution partners.

Learn More

Learn more about the 3rd Gen AMD CDNA™ architecture.

Learn more about the latest AMD Instinct™ MI300 Series accelerators.

Visit the AMD Infinity Hub to learn about our AMD Instinct™ supported containers.

Learn more about the AMD ROCm™ open software platform.

Guy Ludden is Sr. Product Marketing Mgr. for AMD. His postings are his own opinions and may not represent the AMD positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.