We are delighted to announce that starting with the PyTorch 1.12 release, AMD ROCm™ support has moved from Beta to Stable. The Stable release leads to long-term maintenance for PyTorch on the ROCm software stack, with performant features that support end user adoption with proven value-add. This is an important evolutionary step for AMD ROCm as AMD Instinct™ GPUs gain mainstream support with an installable Python package hosted on PyTorch.org. The AMD Radeon™ Pro W6800 graphics cards are supported as well for developers.

PyTorch is one of the most popular open-source machine learning frameworks used by researchers, scientists, academics, and a broad range of industries. There has been a strong partnership between PyTorch and AMD to make this shift towards ROCm Stable support. "We are excited to partner with AMD to grow our PyTorch support on ROCm enabling the vibrant PyTorch community to quickly adopt the latest generation of AMD Instinct GPUs with great performance for major AI use cases running on PyTorch." says Soumith Chintala (Software Engineer Lead, PyTorch)

Installing PyTorch

Install PyTorch Using Wheels Package

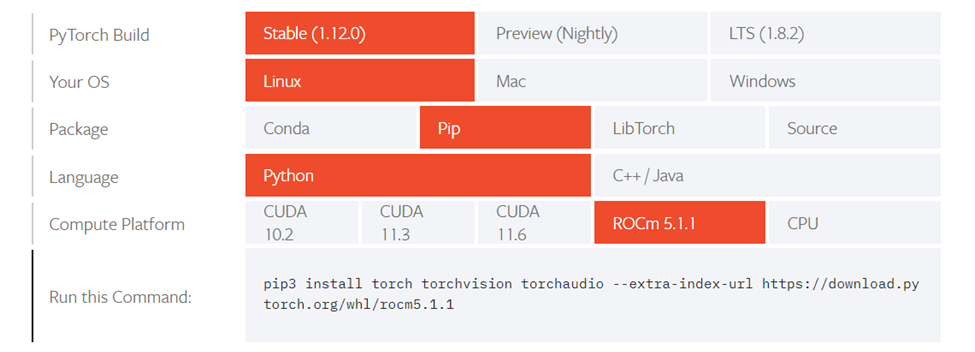

PyTorch supports the ROCm platform by providing tested wheel packages. To access these packages, refer to https://pytorch.org/get-started/locally/, and choose the ‘ROCm’ compute platform.

Figure 1: Installation Matrix from Pytorch.org

Follow the installation instructions below. An example of the steps is provided below for your convenience.

1. Obtain a base docker image with the correct user-space ROCm version installed from https://hub.docker.com/r/rocm/dev-ubuntu-20.04 or download a base OS docker image and install ROCm following the installation directions. In this example, ROCm 5.1.1 is installed, as supported by the installation matrix from the pytorch.org website.

docker pull rocm/dev-ubuntu-20.04:5.1.1

2. Start the Docker container.

docker run -it --device=/dev/kfd --device=/dev/dri --group-add video rocm/dev-ubuntu-20.04:5.1.1

3. Install any dependencies needed for installing the wheels inside the docker container.

apt update

apt install libjpeg-dev python3-dev

pip3 install wheel setuptools

4. Install torch, torchvision, and torchaudio as specified by the installation matrix.

pip3 install torch torchvision torchaudio --extra-index-url

https://download.pytorch.org/whl/rocm5.1.1

Others options and instructions for PyTorch installations including AMD Infinity Hub docker containers available at Deep Learning Frameworks (amd.com)

Using Docker gives you portability and access to a pre-built Docker container that has been rigorously tested within AMD. This might also save on the compilation time and should perform exactly as it did when tested, avoiding potential installation issues.

Conclusion

The AMD ROCm software platform support continues to grow and gain traction in the industry. With ROCm support transitioning from Beta to Stable, PyTorch researchers and users can continue to innovate using AMD Instinct GPUs and the ROCm software stack with all the major features and functionality.

More Information

PyTorch webpage: PyTorch

ROCm webpage: AMD ROCm™ Open Software Platform | AMD

ROCm Information Portal: AMD Documentation - Portal

AMD Instinct Accelerators: AMD Instinct™ Accelerators | AMD

AMD Infinity Hub: AMD Infinity Hub | AMD

Mahesh Balasubramanian is Dir. of Product Marketing Mgr. in the AMD Data Center GPU Business Unit. His postings are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.