- AMD Community

- Communities

- General Discussions

- General Discussions

- Re: Microsoft says vaguely that DXR will work with...

General Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Microsoft says vaguely that DXR will work with hardware that's currently on the market

Microsoft says vaguely that DXR will work with hardware that's currently on the market and that it will have a fallback layer that will let developers experiment with DXR on whatever hardware they have. Should DXR be widely adopted, we can imagine that future hardware may contain features tailored to the needs of raytracing. On the software side, Microsoft says that EA (with the Frostbite engine used in the Battlefield series), Epic (with the Unreal engine), Unity 3D (with the Unity engine), and others will have DXR support soon.

This was taken from an article today about the new tech being spear headed from Nvidia and Microsoft.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Of course it will.

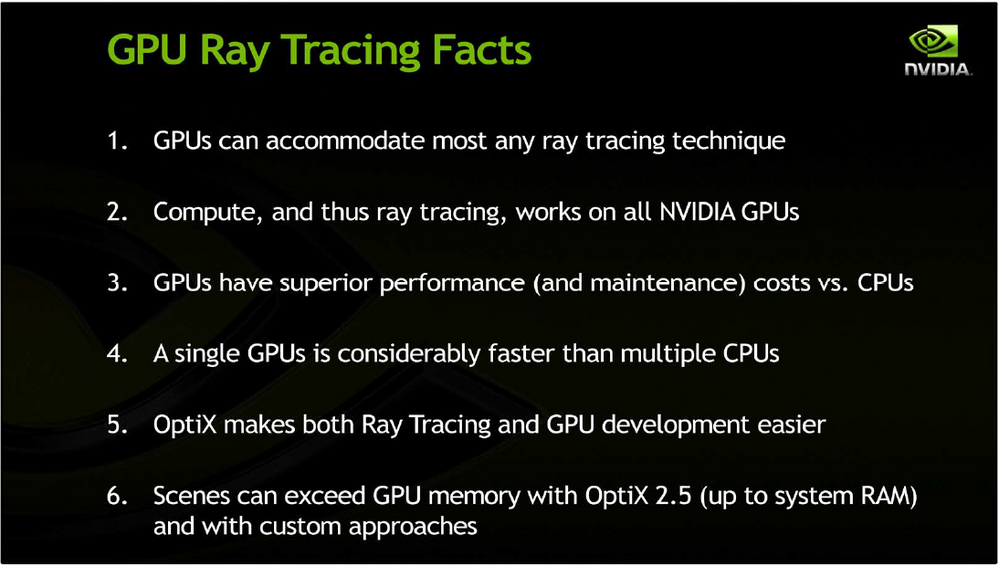

From NVidia's slide deck at SIGGRAH 2012 concerning ray-tracing on Kepler.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ray Tracing with DXR is just a regular compute workload for the GPU. This should work with any GPU with support for DX12 (which goes back a ways). Just depends on how each GPU will perform under that load which I'm sure is not good with current GPUs on market lol. That's why Nvidia built this whole Hardware Accelerated RTX engine for their 20 series that's coming so they can get the playable performance first.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure that is completely why. Nvidia doesn't want to cannibalize their Pro Cards by giving regular users more compute units. They can control what processes without giving them other ability the way they are doing it. So it may be more of a control issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just added more compute units wouldn't be efficient though. At the 12nm, I think they are using now for Turing? Adding double or more then the number of compute units then Pascal for example would result in a huge expensive power hungry chip and they would probably have much lower success in making fully functioning ones than usual. This just wouldn't make since for a gaming card so, it's why they are going this RTX route, to get this ray tracing performance sooner while not having to create giant chip fully of compute units.

I can see AMD doubling up compute unit from current 14nm Vega to 7nm Vega maybe? If we even get a 7nm Vega gaming card, here's hoping. Maybe that would be something AMD is doing to get good DXR performance. Just waiting for this Ray Traced 3DMark Timespy bench to come out though so we can see how our current hardware runs in a DXR load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From Microsoft's site concerning DXR.

"The primary reason for this is that, fundamentally, DXR is a compute-like workload. It does not require complex state such as output merger blend modes or input assembler vertex layouts. A secondary reason, however, is that representing DXR as a compute-like workload is aligned to what we see as the future of graphics, namely that hardware will be increasingly general-purpose, and eventually most fixed-function units will be replaced by HLSL code. The design of the raytracing pipeline state exemplifies this shift through its name and design in the API. With DX12, the traditional approach would have been to create a new CreateRaytracingPipelineState method. Instead, we decided to go with a much more generic and flexible CreateStateObject method. It is designed to be adaptable so that in addition to Raytracing, it can eventually be used to create Graphics and Compute pipeline states, as well as any future pipeline designs."

Interesting how the thinking behind the DX12 API and Microsoft closely mirrors AMD's approach with Vega, one piece of hardware for all applications. Since Vega is already much stronger in compute than NVidia's gaming cards, I don't think the ray-tracing will slow them down much. If anything, they would be able to run a higher number of samples than NVidia.

And the RTX core essentially uses machine learning via tensor cores found in Volta. Even NVidia's 2000 series doesn't have anywhere near the hardware to fully eliminate rasterisation and fully ray-trace a scene. And really, that isn't necessary, rasterisation has become quite acceptable for many applications. It is particularly bad at things like shadow edges, reflections, and distance to a light source. Ray-tracing even for just those applications is a pretty heavy process, and consumer GPUs can only run enough rays to produce a pretty low resolution image. That is where the tensor cores come in. The Tensor cores are capable of multiple precision execution (two FP16 matrixes can be multipled to form a single FP32 matrix), meaning it can perform both FP16 or FP32 on a single core. FP16 is what is typically used in machine learning, and NVidia leverages that, along with a proprietary algorithm to "denoise" the ray-traced image and overlay it over the rasterized image in real-time. This gives the appearance of an image that was sampled with more rays than are actually there.

Vega already has more compute functionality than either Pascal or Turing, so Vega could likely produce a better image simply by running more ray-traced samples. But, since Vega is also used in the Instinct line, it comes with "Rapid packed Math", which is just AMD's term for exactly what the Tensor core does, multiple precision execution. So there is no reason, AMD couldn't execute the same denoising approach that NVidia has, the hardware is already there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think this will slow VEGA down much either. I always thought AMD took the more conventional and open approach to takling problems like this. Vega is a computing beast and the more of that computational power it has, the better it is for takling things like this just be working on improving it's strengths. I think AMD they will just fine with this DXR load if they continue to improve Vega like they are doing with Ryzen on the CPU side.

Nvidia on the other hand doesn't have as much raw compute power in their GPUs than Vega has. So Nvidia has their crazy engineers finding ways to get to these results using optimization techniques and proprietary hardware to accelerate these processes.

I'm interested to see how where Intel is going be in this dGPU landscape and how they handle Ray tracing because it's inevitably going to be a THING in games, soon than later I think.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Nvidia on the other hand doesn't have as much raw compute power in their GPUs than Vega has. So Nvidia has their crazy engineers finding ways to get to these results using optimization techniques and proprietary hardware to accelerate these processes."

The thing is, they did have the compute power on their consumer GPU back in the Kepler days. The slide I posted above was from NVidia's presentation for ray-tracing on Kepler at SIGGRAPH 2012. I think what pokester was referring to was after the launch of the Titan Black, NVidia saw a lot of professional users purchase those cards for compute workloads. The Quadro cards usually sell at much higher margins, and NVidia effectively cannibalized a much higher margin segment with a cheaper "gaming" card. After that, NVidia has done everything it can to avoid blurring the lines between it's product stacks, whether it be "Gameworks" or Turing. They keep trying to find ways to achieve compute based effects without having to add more compute units in. Whether their motivation behind maintaining clearly defined product stacks is to uphold their profits or the more altruistic motivation of keeping gaming hardware in the hands of gamers is debatable.

I think with Intel entering the fray, and the hiring of both Jim Keller as well as Raja Koduri, their implementation will closely mirror AMDs. It will be really interesting to see if that influences developers to move away from all the NVidia end arounds.

So to bring things full circle, I think NVidia is accurate in saying that DXR won't work (at least not well) in their own GPUs prior to the 2000 series. Without the tensor cores to do the denoising, there simply isn't enough compute power there to produce usable ray traced data in real time. But, they are incorrect in saying it won't work on any other GPU. Vega, with both much higher compute abilities from the get go as well as mixed precision execution will likely be fine if not better. I am eager to see how the different GPUs stack up on Futuremark's DXR benchmark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, I guess we'll have to wait to see when we somethings to bench against each other with. Would like to see how my Vega 56 would handle real time Ray Tracing because it's like you said, Vega has all the computing tricks that Nvidia is trying to implement by different means. Vega might have all the things it needs to take on a DXR load with great speed, unless Microsoft working with Nvidia gimped DXR somehow just so no one would have an upper hand. I believe that's what happened with DX10 back then lol. DX10 should have been what DX11 is but Nvidia's chips definitely weren't as prepared for that as the AMD cards were. But that's something that cantc be confirmed lol.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://www.hardcoregames.biz/dirextx-ray-tracing/

you need a rather substantial box to do DXR development, I have been using that for a while since it was being developed

I have been using DX12 tools for some time now.