Speed Up DeepSeek R1 Distill 4-bit Performance and Inference Capability with NexaQuant on AMD Client

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Nexa AI, today, announced NexaQuants of two DeepSeek R1 Distills: The DeepSeek R1 Distill Qwen 1.5B and DeepSeek R1 Distill Llama 8B.

Popular quantization methods like the llama.cpp based Q4 K M allow large language models to significantly reduce their memory footprint and typically offer low perplexity loss for dense models as a tradeoff. However, even low perplexity loss can result in a reasoning capability hit for (dense or MoE) models that use Chain of Thought traces. Nexa AI has stated that NexaQuants are able to recover this reasoning capability loss (compared to the full 16-bit precision) while keeping the 4-bit quantization and all the while retaining the performance advantage1.

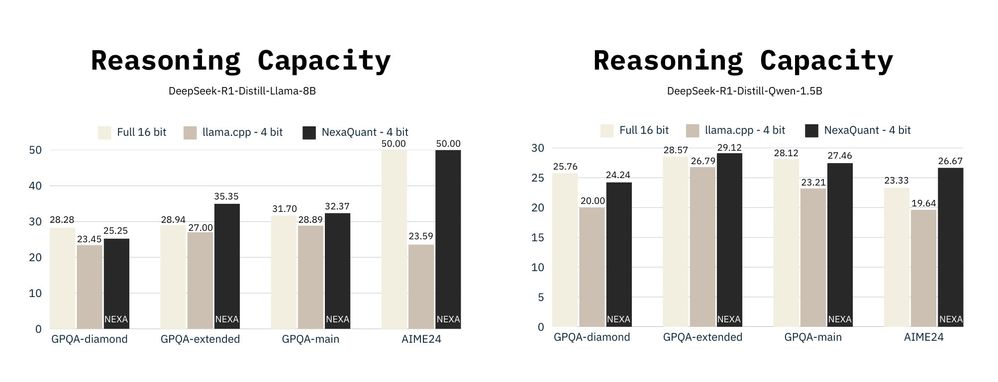

Benchmarks provided by Nexa AI can be seen below:

Benchmark scores provided by Nexa AI.

We can see that the Q4 K M quantized DeepSeek R1 distills score slightly less (except for the AIME24 bench on Llama 3 8b distill, which scores significantly lower) in LLM benchmarks like GPQA and AIME24 compared to their full 16-bit counter parts. Moving to a Q6 or Q8 quantization would be one way to fix this problem – but would result in the model becoming slightly slower to run and requiring more memory.

Nexa AI has stated that NexaQuants use a proprietary quantization method to recover the loss while keeping the quantization at 4-bits1. This means users can theoretically get the best of both worlds: accuracy and speed.

You can read more about the NexaQuant DeepSeek R1 Distills over here.

The following NexaQuants DeepSeek R1 Distills are available for download:

NexaQuant DeepSeek R1 Distill Qwen 1.5B 4-bit

NexaQuant DeepSeek R1 Distill Llama 8B 4-bit

How to run NexaQuants on your AMD Ryzen processors or Radeon graphics card

We recommend using LM Studio for all your LLM needs.

- Download and install LM Studio from lmstudio.ai/ryzenai

- Go to the discover tab and paste the huggingface link of one of the nexaquants above.

- Wait for the model to finish downloading.

- Go back to the chat tab and select the model from the drop-down menu. Make sure “manually choose parameters” is selected.

- Set GPU offload layers to MAX.

- Load the model and chat away!

According to this data provided by Nexa AI, developers can also use the NexaQuant versions of the DeepSeek R1 Distills above to get generally improved performance in llama.cpp or GGUF based applications.

Endnotes:

1All performance and/or cost savings claims are provided by Nexa AI and have not been independently verified by AMD. Performance and cost benefits are impacted by a variety of variables. Results herein are specific to Nexa AI and may not be typical. GD-181.

GD-97 - Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

GD-220e - Ryzen™ AI is defined as the combination of a dedicated AI engine, AMD Radeon™ graphics engine, and Ryzen processor cores that enable AI capabilities. OEM and ISV enablement is required, and certain AI features may not yet be optimized for Ryzen AI processors. Ryzen AI is compatible with: (a) AMD Ryzen 7040 and 8040 Series processors and Ryzen PRO 7040/8040 Series processors except Ryzen 5 7540U, Ryzen 5 8540U, Ryzen 3 7440U, and Ryzen 3 8440U processors; (b) AMD Ryzen AI 300 Series processors and AMD Ryzen AI PRO 300 Series processors; (c) all AMD Ryzen 8000G Series desktop processors except the Ryzen 5 8500G/GE and Ryzen 3 8300G/GE; (d) AMD Ryzen 200 Series processors and Ryzen PRO 200 Series processors except Ryzen 5 220 and Ryzen 3 210; and (e) AMD Ryzen AI Max Series processors and Ryzen AI PRO Max Series processors. Please check with your system manufacturer for feature availability prior to purchase.