Editor’s Note: This content is contributed by Bingqing Guo, Sr. AI Product Marketing Manager.

Offering More AI Inference Acceleration Platform Choices for Your Applications

The AMD Vitis™ AI platform 3.0 release offers upgraded capabilities for AI inference development. Read this blog to learn about the product upgrades and benefits of adopting the Vitis AI 3.0 platform.

More Acceleration Platform Choices with AI Engine-ML

A new feature with the AMD Vitis AI platform 3.0 release is support for AIE-ML architecture in two new AMD Versal™ ACAP-based hardware platforms, the AMD Versal AI Edge series VEK280 evaluationn kit, AMD Versal AI Core series, and Versal AI Edge series to accelerate different AI inference applications from edge to cloud—meeting performance requirements from lower latency to higher throughput.

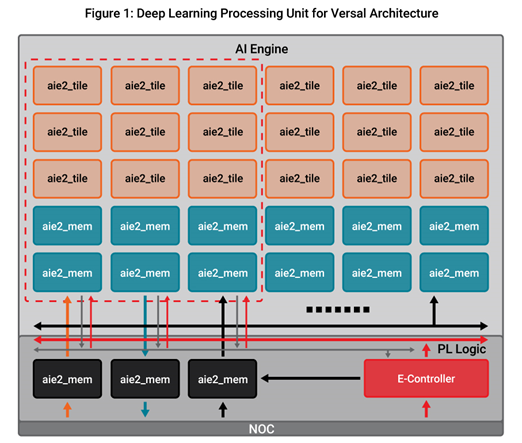

The deep-learning processor unit (DPU) core makes optimal use of the Versal ACAP highly scalable and adaptive architecture by invoking the rich on-chip resources of AI Engine-ML (AIE-ML), LUTs, and DSPs to achieve breakthrough AI acceleration performance for systems that require higher real-time or concurrent processing capabilities. The uniquely optimized DPU for AIE-ML reduces programmable logic (PL) resource usage, particularly RAM and DSP, providing more flexibility to allocate on-chip resources while harnessing AI acceleration capabilities.

Figure 1: Deep-Learning Processing Unit for Versal Architecture

Figure 1: Deep-Learning Processing Unit for Versal Architecture

A large portfolio of CNN deep learning models has been verified successfully on the VEK280 evaluation kit and V70 card through customer early access. Models already supported on the Versal AI Core series VCK190 evaluation kit with the AMD Vitis AI platform 2.5 release can also be run on the VEK280 evaluation kit with the Vitis AI platform 3.0 release, including ResNet50, MobileNet, the Yolo series (v4, v5, v6, x), RefineDet, and more. Users have the flexibility to choose the most suitable hardware platform for their target AI applications within the same set of algorithm models.

Contact your local sales representative for Vitis AI early access to the VEK280 evaluation kit or V70 card. A notable DPU for AIE-ML feature is that it will offer advanced optimization for even higher performance in single-batch and multi-batch configurations in the coming general release version. Vitis AI platform will also expand support for non-CNN workloads, such as Bert, RNN, and models based on DPU for AIE-ML complex operator patterns.

Broader Selection of AI Models with ONNX Runtime Supported by the Vitis AI Platform

As with previous releases, AMD Vitis AI continues to provide native PyTorch and TensorFlow support. With the Vitis AI platform 3.0 release, we are introducing a new Vitis AI ONNX Runtime Engine (VOE) that enables users to deploy a wider variety of models. This is accomplished by exporting the model from the Vitis AI quantizer in a quantized ONNX format. With VOE, the integration of a AMD Vitis AI Execution Provider into the ONNX Runtime makes it possible to run this quantized ONNX model on the target. The Vitis AI Execution Provider provides metadata to the ONNX Runtime detailing the operators that can be supported on the DPU target. The graph is partitioned by the ONNX Runtime based on this metadata. The partitions or subgraphs that can be supported by the DPU are then compiled by the Vitis AI Compiler, which has been integrated into the ONNX Runtime in VOE. The result is that operators that can be accelerated by the DPU are deployed there, while remaining operators are deployed on the CPU all without requiring manual modifications. This feature increases the ability to deploy user-defined models on AMD platforms.

Future Vitis AI platform releases will build upon this capability offering native parsing support to directly ingest ONNX format models.

Simplified WeGO Deployment Flow for Data Center Applications

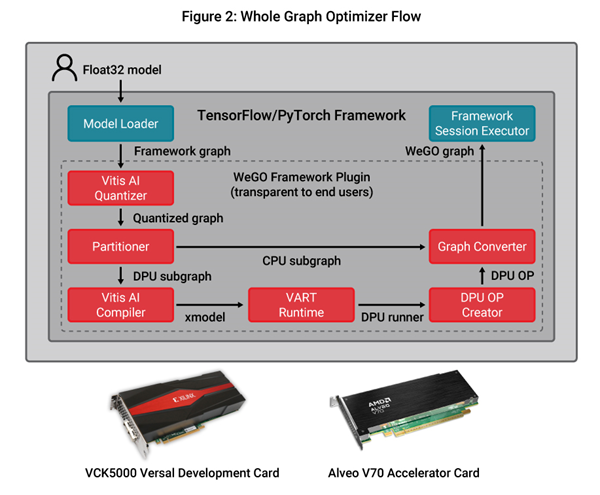

Developers targeting data center AI acceleration can take advantage of the Vitis AI Whole Graph Optimizer (WeGO) for model deployment. WeGO leverages the native PyTorch and TensorFlow frameworks to deploy operators on the host machine when those operators cannot be compiled for execution on the DPU. In Vitis AI platform 3.0, we have updated WeGO to support PyTorch 1.12 and TensorFlow 2.10, thus enabling support for many of the latest models.

WeGO ease-of-use improvements include both WeGO model serialization and deserialization enabling graph compilation once, and the ability to run it anytime without re-compiling the model thus improving model deployment efficiency. WeGO can now support on-the-fly quantization by integrating the Vitis AI quantizer within its workflow. Previously, the user needed first to quantize the model and then input the fixed-point model into WeGo. Now, the user can directly use the floating-point model as input for WeGO by calling the post-training quantization within this framework, and then completing the deployment flow. The entire process is simpler and easier to operate making the cloud-end AI plug-and-play possible.

Figure 2: Whole Graph Optimizer Flow

Figure 2: Whole Graph Optimizer Flow

In addition to the enhancements mentioned above, AMD Vitis AI implements numerous updates and improvements to the existing IDE components, including the quantizer, optimizer, libraries, and DPU IP that have been widely adopted by existing end users. In the Model Zoo, new models include Super Resolution 4X, 2D, and 3D Semantic Segmentation, 3D-Unet, MaskRCNN, updated Yolo series, and models for AMD CPUs and GPUs. The quantizers, compilers, libraries, and Vitis AI runtime were also updated to adapt to new software functions, hardware boards, and model frameworks.

Next Steps

We bring you a fully upgraded Vitis AI Github.IO covering Vitis AI platform related technical items, documentation, examples, frequently asked questions, and other resources. We invite you to join Early Access for the AIE-ML DPU on VEK280 kit and V70 card, and welcome you to download and install the latest Vitis AI Development Platform 3.0 packages from GitHub. Please email any comments or questions to: amd_ai_mkt@amd.com.

------------------------------------

©2023, Advanced Micro Devices, Inc. All rights reserved. AMD, and the AMD Arrow logo, Alveo, Versal, Vitis, UltraScale+, Zynq and combinations thereof are trademarks of Advanced Micro Devices, Inc.