Hello Team,

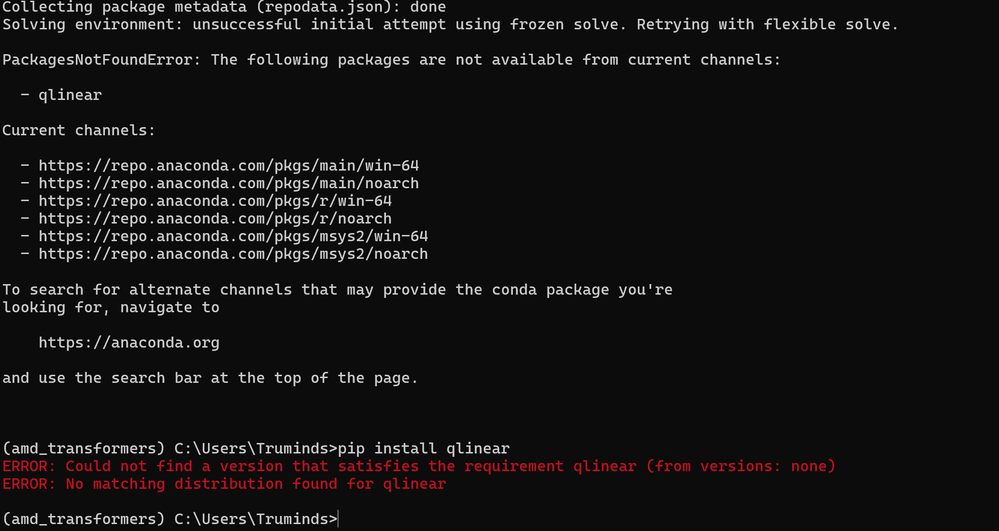

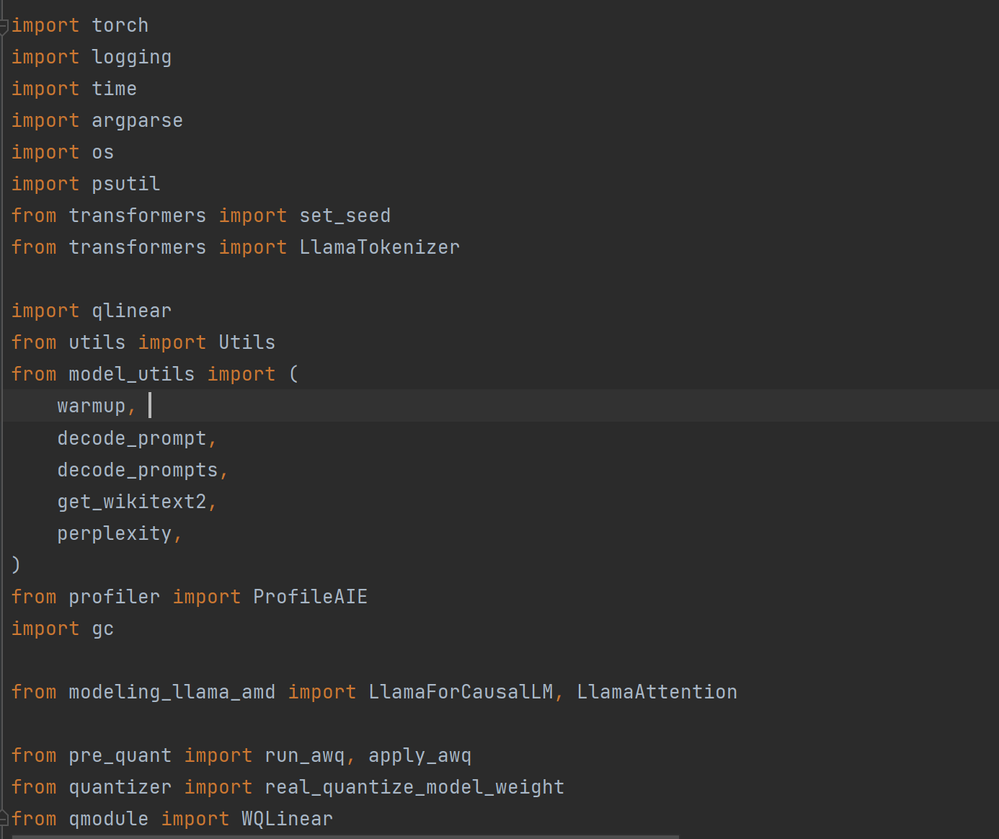

I am currently implementing Llama2 7B model with my Ryzen AMD laptop. I have followed the steps which were there in documentation provided by AMD. However, when I was trying to execute the script I was facing issue with one library called "qlinear" (imported in script). I have tried to install with PIP, CONDA but it said that package is not available. Can you please tell me how can I install or is there any alternatives of qlinear? It is an only bottleneck at this point of time I am facing, please suggest or help for the same.

Refer github link: https://github.com/amd/RyzenAI-SW/tree/main/example/transformers

I am attaching screenshot for your reference:

Glad to get any response will be appreciate.

Thanks,

Nimesh