- AMD Community

- Blogs

- Instinct Accelerators

- Exploring AMD Vega for Deep Learning

Exploring AMD Vega for Deep Learning

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

[Originally posted on 11/16/17 by Carlos E. Perez]

AMD’s newly released Vega architecture has several unique features that can be leveraged in Deep Learning training and inference workloads.

The first noteworthy feature is the capability to perform FP16 at twice the speed as FP32 and with INT8 at four times as fast as FP32. This translates to a peak performance of 24 teraflops on FP16 and 48 trillion operations per second on INT8. Deep Learning workloads have known to work well with lower precision arithmetic. It is as if AMD architects were aware of this reality and designed VEGA to exploit this characteristic. The second noteworthy feature of Vega is its new memory architecture that permits the addressability of up to 512GB of memory. The third benefit is favorable coupling with AMD’s ThreadRipper and EPYC lines of microprocessors.

On Deep Learning

Deep learning (DL) is a technology that is as revolutionary as the Internet and mobile computing that came before it. The current revival of interest in all things “Artificial Intelligence” (AI) is driven by the spectacular results achieved with deep learning. There are other AI technologies like expert systems, semantic knowledge bases, logic programming and Bayesian systems. Most of classical AI has not changed much, if any, in the last 5 years. The recent quantum leap disproportionately been driven by deep learning progress.

When Google embarked on converting their natural language translation software into using deep learning, they were surprised to discover major gains. This was best described in a recent article published in the New York Times, “The Great AI Awakening”:

The neural system, on the English-French language pair, showed an improvement over the old system of seven points. Hughes told Schuster’s team they hadn’t had even half as strong an improvement in their own system in the last four years. To be sure this wasn’t some fluke in the metric, they also turned to their pool of human contractors to do a side-by-side comparison. The user-perception scores, in which sample sentences were graded from zero to six, showed an average improvement of 0.4 — roughly equivalent to the aggregate gains of the old system over its entire lifetime of development. In mid-March, Hughes sent his team an email. All projects on the old system were to be suspended immediately.

Let’s pause to recognize what happened at Google. Since its inception, Google has used every type of AI or machine learning technology imaginable. In spite of this, their average gain for improvement per year was only 0.4%. In Google’s first implementation, the improvement due to DL was 7 percentage points better.

Google likely has the most talented AI and algorithm developers on the planet. However, several years of handcrafted development could not hold a candle to a single initial deep learning implementation.

ROCm

ROCm is software that supports High Performance Computing (HPC) workloads on AMD hardware. ROCm includes a C/C++ compiler called the Heterogeneous Compute Compiler (HCC). HCC is based on the open-source LLVM compiler infrastructure project. This HCC compiler supports the direct generation of native Radeon GPU instruction set (known as GSN ISA). Targeting native GPU instructions is crucial to get maximum performance. All the libraries under ROCm support GSN ISA.

Included with the compiler is an API called HC which provides additional control over synchronization, data movement and memory allocation. The HCC compiler is based on previous work in heterogeneous computing at the HSA foundation. The design allows CPU and GPU code to be written in the same source file and supports capabilities such as a unified CPU-GPU memory space.

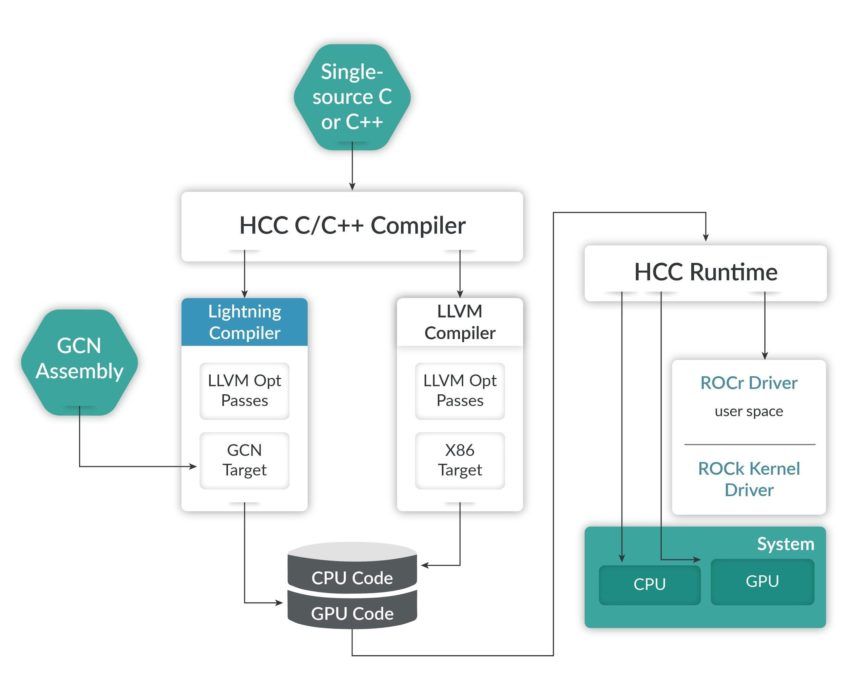

The diagram above depicts the relationships between the ROCm components. The HCC compiler generates both the CPU and GPU code. It uses different LLVM back ends to generate x86 and GCN ISA code from a single C/C++ source. A GSN ISA assembler can also [1] be used as a source for the GCN target.

The CPU and GPU code are linked with the HCC runtime to form the application (compare this with HSA diagram). The application communicates with the ROCr driver that resides in user space in Linux. The ROCr driver uses a low latency mechanism (packet based AQL) to coordinate with the ROCk Kernel Driver.

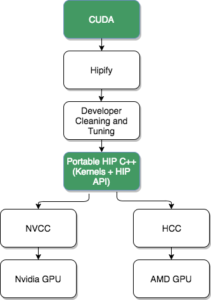

To further narrow the capability gap, the ROCm Initiative created a CUDA porting tool called HIP (let’s ignore what it stands for). HIP provides tooling that scans CUDA source code and converts it into corresponding HIP source code. HIP source code looks similar to CUDA code, but compiled HIP code can support both CUDA and AMD based GPU devices.

The ROCm initiative provides the handcrafted libraries and assembly language tooling that will allow developers to extract every ounce of performance from AMD hardware. This includes a rocBLAS. This is implemented from scratch with a HIP interface. AMD also provides an FFT library called rocFFT that is also written with HIP interfaces. MIOpen is a native library that is tuned for Deep Learning workloads, it is AMD’s alternative to Nvidia’s cuDNN library. This library includes Radeon GPU-specific optimizations.

hipCaffe

AMD currently has ported Caffe to run using the ROCm stack. You can try examples here. I ran some benchmarks found here and here is a chart of the results:

Caffe is run on unspecified GPU hardware.

I don’t know the specific hardware that was used in these benchmarks, however, this comparison does show that the performance improvement is quite significant as compared to alternatives. One thing to observe is that the speedup is most impressive with a complex network like GoogleNet as compared to simpler one like VGG. This is a reflection of the amount of hand-tuning that AMD has done on the MIOpen library.

Deep Learning Standard Virtual Machines

Deep learning frameworks like Caffe have internal computational graphs. These graphs specify the execution order of mathematical operations, similar to a dataflow. These frameworks use the graph to orchestrate its execution on groups of CPUs and GPUs. The execution is parallel and this is one reason why GPUs are ideal for this kind of computation. There are however plenty of untapped opportunities to improve the orchestration between the CPU and GPU.

The current state of Deep Learning frameworks is similar to the fragmented state before the creation of common code generation backends like LLVM. In the chaotic good old days, every programming language had to re-invent its way of generating machine code. With the development of LLVM, many languages now share the same backend code. Many programming languages use LLVM as their backend. Several well-known examples of this are Ada, C#, Common Lisp, Delphi, Fortran, Haskell, Java bytecode, Julia, Lua, Objective-C, Python, R, Ruby, Rust, and Swift. The frontend code only needs to parse and translate source code to an intermediate representation (IR).

Deep Learning frameworks will eventually need their own “IR”. The IR for Deep Learning is, of course, the computational graph. Deep learning frameworks like Caffe and TensorFlow have their own internal computational graphs. These frameworks are all merely convenient fronts to the internal graph. These graphs specify the execution order of mathematical operations, analogous to what a dataflow graph does. The graph specifies the orchestration of collections of CPUs and GPUs. This execution is highly parallel. Parallelism is the one reason why GPUs are ideal for this kind of computation. There are however plenty of untapped opportunities to improve the orchestration between the CPU and GPU.

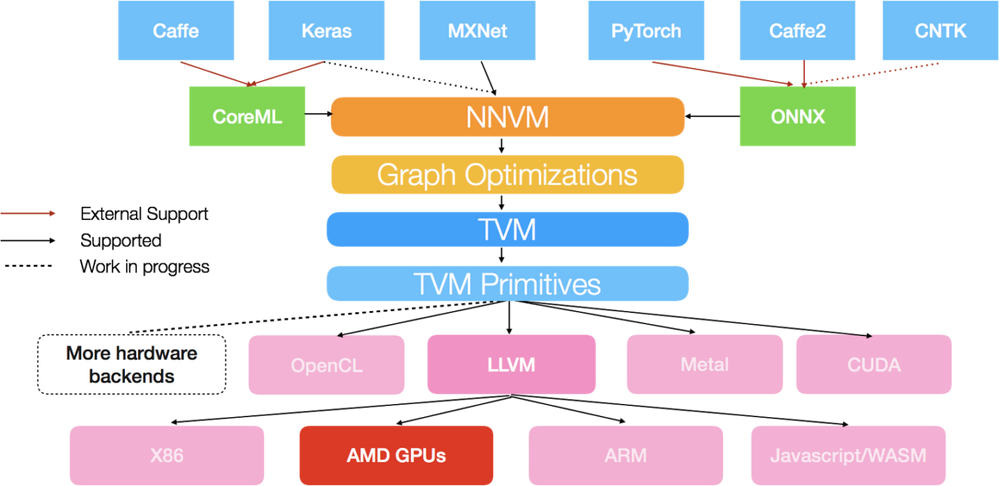

New research is exploring ways to optimize the computational graph in a way that goes beyond just single device optimization and towards more global multi-device optimization. NNVM is one such framework that performs a computation graph optimization framework using an intermediate representation. The goal is for NNVM optimizers to reduce memory and device allocation while preserving the original computational semantics.

A more recent development is the port of NNVM to support AMD GPUs. The NNVM compiler can compile to the TVM stack. The TVM stack is a compilation an end-to-end compilation stack that supports multiple backends. TVM compiles a high-level computation description written in TVM frontend down to an optimized native GPU code. It leverages an LLVM based code generator in TVM and LLVM’s ROCm capabilities. This new project can be found at: https://github.com/ROCmSoftwarePlatform/nnvm-rocm.

The NNVM and TVM stacks perform optimizations in a global manner across either the computational graph or an alternative declarative specification. Conventional DL frameworks, however, have code generation and execution all intertwined with their code base, making opportunities to develop optimization solutions less portable. Ideally, one would like to see a common standard, a DL virtual machine instruction set, where the community can collective contribute optimization routines. Open Neural Network eXchange (ONNX) is one such standard. ONNX is a project supported by Facebook and Microsoft. They are building support for Caffe2, PyTorch and Cognitive Toolkit. The recent TVM port reveals the potential of AMD support for a wider range of DL frameworks:

TVM transforms the computational graph by minimizing memory, optimizing data layout and fusing computational kernels. It is a reusable framework that is designed to support multiple hardware back-ends. NNVM provides a high-level intermediate representation that represents tasks scheduling and memory management. TVM is a low-level IR for optimizing computation. A proof of concept showed that the approach of optimizing low-level operations lead to around a 35% improvement over hand-engineered kernels. This end-to-end optimization combined with AMD’s open sourced computational libraries like MIOpen is a very promising development.

Conclusion

There are many Deep Learning frameworks in existence today. Different frameworks have their own strengths and weaknesses. The field is making good progress to develop standardization that allows interoperability of these frameworks. This is through a common standard Deep Learning virtual machine. ONNX is one of these more recent standards.

In addition to standardization, global optimization of the computational graph found in Deep Learning frameworks is a means towards higher performance. The TVM framework and its integration with AMD’s LLVM based backend opens up the opportunity for end-to-end optimization of not only AMD GPUs but also the combination of CPUs and GPUs.

-

AMD Instinct Accelerators

15 -

AMD Instinct Accelerators Blog

10 -

Instinct AI

9 -

Other

1 -

ROCm

25 -

ROCm Blogs

10

- « Previous

- Next »