Most Machine Learning (ML) engineers use single precision (FP32) datatype for developing ML models. TensorFloat32 (TF32) has recently become popular as a drop-in replacement for these FP32 based models. However, there is a pressing need to provide additional performance gains for these models by using faster datatypes (such as BFloat16 (BF16)) without requiring additional code changes.

At AMD, we have developed an approach where existing TF32 applications use BF16 matrix operations by automatically casting the weights and activations in the model to BF16 and accumulating the output in FP32. With this approach, an application that uses existing TF32 infrastructure would observe acceleration without additional code changes. Our code is available at: ROCmSoftwarePlatform/pytorch at release/1.13_tf32_medium (github.com). As an initial drop, we have focused on Large Language Models (LLMs) with acceleration for Pytorch Linear layers; subsequent drops would include additional primitives such as convolutions.

Implementation Details

Pytorch supports three levels of precision for FP32 models: Highest (model uses FP32 based GEMMs), High (model uses native TF32 based GEMMs – if available), and Medium (model uses BF16 based GEMMs -Not implemented in current Pytorch). We use the infrastructure for Medium precision to implement our approach, which we refer to as TF32-Emulation. In the current implementation, the Linear layers in Pytorch use TF32-emulation. Support for additional operators is under consideration at AMD.

Our code is available at: ROCmSoftwarePlatform/pytorch at release/1.13_tf32_medium (github.com). This feature can be utilized by building PyTorch using the above mentioned code link and adding “torch.backends.cudnn.allow_tf32 = True” in one’s code at the beginning of the main file after the import statements.

Performance Comparisons

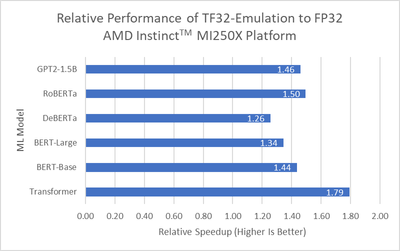

We compare the performance of TF32-Emulation approach to the default FP32 implementation on a set of ML models widely used in the community. As shown in the Figure 1, we observe a speedup of up to 1.79x over the default implementation on Transformer derived from MLPerf implementation; the relative speedup varies corresponding to the time spent on GEMM operations relative to elementwise/reduction/norm operations. For brevity purposes, we have not included convergence results, although we observed expected convergence for TF32-Emulation relative to FP32 based implementations for these models.

Figure 1: Relative Speedup of TF32-Emulation to FP32 on AMD InstinctTM MI250X Platform

Contributors:

Abhinav Vishnu: Abhinav Vishnu is a Fellow at AMD with interests in performance modeling and optimization on Generative AI and large-scale training.

Dhwani Mehta: Dhwani Mehta is a Member of Technical Staff Software System Design Engineer at AMD with focus on ML model analysis and performance optimization for both training and inference.

Jeff Daily: Jeff Daily is a Principal Member of Technical Staff at AMD where he serves as the Tech Lead for ML Frameworks. His long-term contributions to PyTorch were recognized by the PyTorch Foundation with the PY-TORCHBEARER award.

End Note:

Machine setup: MI250X - (Processor server with 8x AMD InstinctTM MI250X (128 GB HBM2e) 560W GPUs, 2GCDs, 4x AMD EPYCTM 7V12 64-Core Processor)

Disclaimer

The information presented in this document is for informational purposes only and may contain technical

inaccuracies, omissions, and typographical errors. The information contained herein is subject to change

and may be rendered inaccurate for many reasons, including but not limited to product and roadmap

changes, component and motherboard version changes, new model and/or product releases, product

differences between differing manufacturers, software changes, BIOS flashes, firmware upgrades, or the

like. Any computer system has risks of security vulnerabilities that cannot be completely prevented or

mitigated. AMD assumes no obligation to update or otherwise correct or revise this information.

However, AMD reserves the right to revise this information and to make changes from time to time to

the content hereof without obligation of AMD to notify any person of such revisions or changes.

THIS INFORMATION IS PROVIDED ‘AS IS.” AMD MAKES NO REPRESENTATIONS OR WARRANTIES WITH

RESPECT TO THE CONTENTS HEREOF AND ASSUMES NO RESPONSIBILITY FOR ANY INACCURACIES,

ERRORS, OR OMISSIONS THAT MAY APPEAR IN THIS INFORMATION. AMD SPECIFICALLY DISCLAIMS ANY

IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR ANY PARTICULAR

PURPOSE. IN NO EVENT WILL AMD BE LIABLE TO ANY PERSON FOR ANY RELIANCE, DIRECT, INDIRECT,

SPECIAL, OR OTHER CONSEQUENTIAL DAMAGES ARISING FROM THE USE OF ANY INFORMATION

CONTAINED HEREIN, EVEN IF AMD IS EXPRESSLY ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

AMD, the AMD Arrow logo, AMD InstinctTM MI250X, AMD ROCmTM, AMD EPYCTM and combinations thereof are trademarks of Advanced Micro Devices, Inc. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

© 2023 Advanced Micro Devices, Inc. All rights reserved.