- AMD Community

- Blogs

- EPYC Processors

- Chiplets Speed Performance By Bringing Data Where ...

Chiplets Speed Performance By Bringing Data Where It’s Needed

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Lynn Comp, AMD CVP, Server CPU Marketing, recently traveled to Israel to speak at ChipEx2023 this summer, the annual event for that country’s chip industry. There, she talked about how chiplets are helping improve performance for data centers and the cloud and how AMD is leading that drive. She also spoke about other solutions — CXL™ interconnects and edge computing — that can improve different aspects of the location-related imbalance between processor and data.

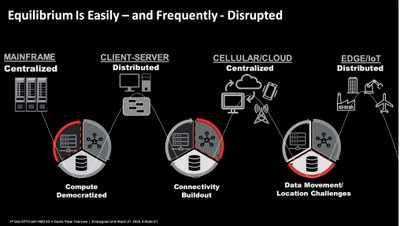

Compute, storage and networking are the engines driving the digital industry, and the slowest of these limits overall performance. As the industry evolves over years and decades, we perpetually speed up one engine to keep up with the other two, overcorrecting one technology and then moving on to the next imbalance.

During the mainframe era, compute was the choke point. Computers of the 1950s to early 1980s were available only in limited quantities for specialized uses, including complex scientific calculations and accounting.

Client/server came next, with decentralized computing running on PCs. Networking was then the bottleneck, making it difficult to get information out to PCs where needed.

Later, the emergence of the Internet and mobile data networks accelerated networking. The problem became storage: the location of the data necessary to complete a processing task.

Thatʼs the world we live in today. Mobile devices, edge computing, and the Internet of Things demand fast access to the data they need to operate, and the relationship between local storage and network speeds is a key challenge the industry faces.

That seems like another networking problem. Latency—the speed data moves from the origin to the edge—is also challenging. Whether on a motherboard, in a data center, or in the cloud, latency is a function of distance and connections between resources.

For example, in High-Performance Computing (HPC), some of our biggest customers share that ideally, every Floating Point Operation Per Second (FLOPS) of compute performance should be matched by 1 word of data, so the pipeline is constantly fed. Unfortunately, they also note that today’s HPC systems typically provide nearly 100 FLOPS per word transferred. Compute can't perform at maximum capacity because it is not getting the data it needs.

The problem is extreme in AI, where a prominent tech company driving interactive immersive AR and VR experiences has shared that there are instances when up to 57% of processing time is spent just waiting for data from somewhere on the network. GPU resources are far too valuable and scarce to have those resources idle.

Where data resides and how to move data to processing pipelines is a complex challenge at every level of computing: within the package, on the motherboard, on the server, in the data center and between network-connected data centers.

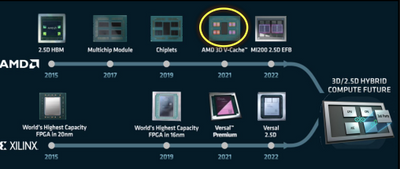

Chiplets are an important part of the solution. Chiplets are an alternative to building larger, expensive monolithic dies inside the package. Each chiplet houses multiple processor cores, and different chiplets can be added to a package to create higher-performance processors, offering extreme scalability and flexibility.

AMD faced skepticism when we pioneered chiplets for high-performance data center workloads nearly five years ago, but now chiplets are part of the solution roadmap for many of the processor vendors targeting the data center.

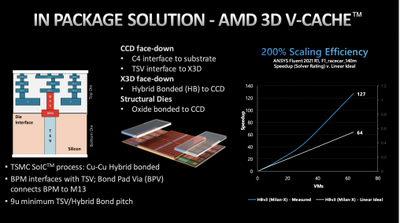

In the years AMD has delivered chiplets, weʼve proven the approach’s capability and flexibility. Our third generation AMD EPYC™ processor leveraged this flexibility to enhance the AMD EPYC™ CPU with AMD 3D V-Cache™ technology in 2021, delivering 768MB of L3 memory in the package. On workloads such as ANSYS® Fluent® 2021 R1, F1_racecar_140m we experienced superlinear scaling efficiency, delivering incremental performance gains beyond what would be expected by increasing processor core count MLNX-041. This was accomplished by bringing data closer to the cores, where the program is executing.

Looking ahead, multiple processor manufacturers are now working with Universal Chiplet Interconnect Express (UCIe™), an industry organization developing standards to allow semiconductor solution providers to mix and match chiplets from multiple manufacturers. That should allow for some interesting scenarios.

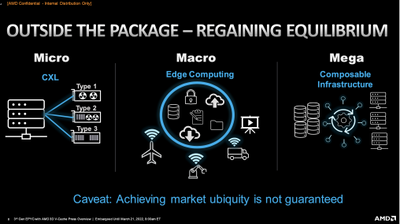

Getting data in the right place at the right time to maintain processing pipeline efficiency is a complex problem with multiple levels. To address the problem at the system, node and rack levels, Compute Express Link™ (CXL) is an industry standard for high-speed, high-capacity CPU-to-device and CPU-to-memory connectivity.

The CXL standard allows adding memory and accelerators in a low-latency interface at the system level. CXL operates at a larger scale than 3D V-Cache and UCIE. If 3D V-Cache is like a small building block for adding memory to a CPU, then CXL is analogous to larger bricks allowing MUCH greater levels of scale.

Edge computing is another solution for improving compute pipeline efficiency by moving computing resources closer to where the data is, at the network edge, thereby improving pipeline performance by reducing the distance data needs to travel.

Achieving equilibrium between computing resources to have efficient pipelines is complex engineering. But engineering isn’t enough to solve problems on a global scale. Solutions also need to achieve market ubiquity. Often the best solutions in performance and features are not the market leaders in revenue or market share. Why?

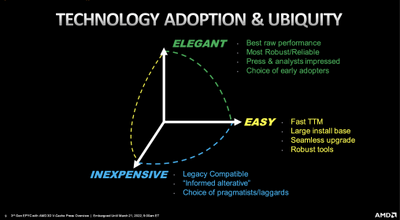

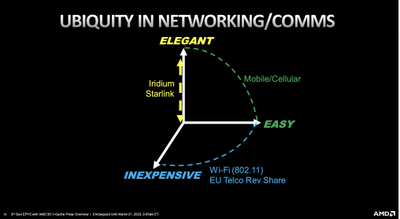

Technology adoption can be boiled down to three rules: Is it elegant? Is it easy to deploy? And is it inexpensive?

If you can get all three of these qualities—elegant, easy, and inexpensive—youʼre in great shape for fast and broad adoption. In my experience in different industries and their technology adoption cycles, you need at least two of the three to succeed. Many architectures are elegant but expensive and difficult to deploy or integrate, and went nowhere.

Mobile, wireless cellular networks are elegant and easy for the user to deploy, but not inexpensive. Wi-Fi is easy and inexpensive but not elegant in providing quality of service relative to cellular.

The lesson is to avoid assuming that the best, most amazing architecture will drive market adoption. To hit maximum adoption as quickly as possible, tech companies should strive for all three, though they can succeed with two.

MLNX-041: ANSYS® FLUENT® 2022.1 comparison based on AMD internal testing as of 02/14/2022 measuring the rating of the selected Release 19 R1 test case simulations. LG15 is the max result. Comb12, f1-140, race280, comb71, exh33, aw14, and lg15 all scale super-linear on 8-nodes. Configurations: 1-, 2-, 4-, and 8-node, 2x 64C AMD EPYC™ 7773X with AMD 3D V-Cache™. 1-, 2-, 4-, and 8-node, 2x 64C AMD EPYC™ 7763 referenced with 8-node EPYC 7773X is ~52% faster than 8-node 7763 on the Boeing Landing Gear 15M (lg15) test case.

COPYRIGHT NOTICE ©2023 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, EPYC, and combinations thereof are trademarks of Advanced Micro Devices, Inc. Ansys, Fluent, and any and all Ansys, Inc. brand, product, service and feature names, logos and slogans are registered trademarks or trademarks of Ansys, Inc. or its subsidiaries in the United States or other countries under license. PCIe is a registered trademark of PCI-SIG Corporation. CXL is a trademark of Compute Express Link Consortium Inc. Universal Chiplet Interconnect Express™ and UCIe™ are trademarks of the UCIe™ Consortium. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.