- AMD Community

- Blogs

- Server Processors

- 4th Gen AMD EPYC™ 8004 Series Processors Designed ...

4th Gen AMD EPYC™ 8004 Series Processors Designed for Intelligent Edge

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

AMD launched the first line of 4th Gen AMD EPYC™ processors (formerly codenamed “Genoa”) in November of 2022. That was just the beginning: AMD announced the advent of 4th Gen AMD EPYC processors with AMD 3D V-Cache™ technology at the Datacenter and AI Technology Premier event in June of 2023. These processors (formerly codenamed “Genoa-X”) triple the L3 cache to a whopping maximum of 1152 GB to turbocharge the performance of High-Performance Computing (HPC), Electronic Design Automation (EDA), and simulation workloads. We simultaneously continued our long history of innovation by modifying the “Zen 4” core to create AMD EPYC 97x4 processors optimized for cloud-native computing. These processors (formerly codenamed “Bergamo”) contain up to an incredible 128 cores in a single socket.

Today, I am excited to talk about the newest member of the 4th Gen AMD EPYC product family: AMD EPYC 8004 Series Processors (formerly codenamed “Siena”). These latest additions to the 4th Gen AMD EPYC lineup propel datacenter and AI technology toward new horizons because they are purpose-built to deliver strong performance with high energy efficiency and built-in security capabilities in a 1P package optimized for diverse workloads including, telco, edge, remote office, and datacenter deployments.

AMD EPYC 8004 Series Processors achieve these ambitious goals by leveraging the same 5nm process technology found in all 4th Gen AMD EPYC processors to extend the highly efficient "Zen 4c” core architecture to lower core count CPU offerings with a reduced TDP range between 70W and 200W. They also use the new SP6 socket that delivers 8 to 64 CPU cores with up to 128 threads (SMT). This specialized configuration delivers high power efficiency and temperature tolerance, low cooling requirements, and excellent acoustic characteristics. The SP6 form factor includes support for up to 6 channels of DDR5 4800 MHz memory and 96 lanes of PCIe® Gen 5 for high-speed network and storage connectivity.

These features make AMD EPYC 8004 Series Processors the ideal choice to meet the skyrocketing demand for “outside the datacenter” use cases. AMD EPYC 8004 processors are also designed to be Network Equipment Building System (NEBS™) friendly, which is key for telecom hardware that must endure tough physical conditions as they deploy NEBS compliant systems.

AMD EPYC 8004 Series Processors expand on AMD established track record of over 300+ world records for performance and power/performance leadership across the entire AMD EPYC processor family. These records reflect an ongoing testament to AMD persistent focus and relentless pursuit of performance leadership and industry-leading energy efficiency [1] with outstanding Total Cost of Ownership (TCO).

AMD is grateful for our broad ecosystem of partners who continue to collaborate with our engineers to deliver a wide range of datacenter solutions. AMD and our partners collaborate to find and seize every opportunity to fine tune workloads to fully use the “Zen 4c” core architecture for maximum performance and power efficiency. Let’s look at some of these use cases and performance results.

Designed for Telco

Telecommunication service providers and network operators are foundational to today’s “always connected” world. Telco providers are therefore on an ongoing mission to build an ever-evolving fabric of connectivity that enables reliable, sustainable, scalable, performant, cloud native, and protected services. They must deploy these networks across a complex web of infrastructure ranging from well-managed datacenters to extremely harsh environments across the globe, all of which require consistent, reliable, and predictable Quality of Service (QoS). These requirements have spurred the development of 5G and other open standards under 3GPP, ETSI, and other industry bodies. All these solutions are built and rely on standard x86 server platforms.

AMD EPYC 8004 processors deliver the following benefits to telco providers:

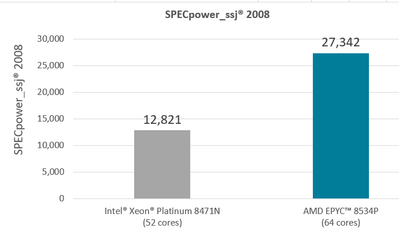

- Leadership Power Efficiency: AMD EPYC 8004 Series Processors blend cutting-edge features with exceptional energy-efficient performance. These processors feature low TDPs of between 70 and 200 watts that maximize efficiency from node to rack while delivering strong throughput, including in space- and power-constrained environments. For example, the 64-core AMD EPYC 8534P processor delivers an incredible ~2.13x performance per system versus the 52-core Intel® Xeon® Platinum 8471N on the SPECpower_ssj® 2008 benchmark.[2]

Figure 1: AMD EPYC 8534 SPECpower_ssj® 2008 performance vs. Intel Xeon Platinum 8471N

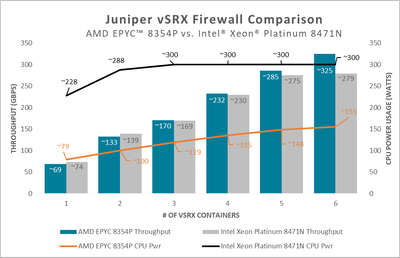

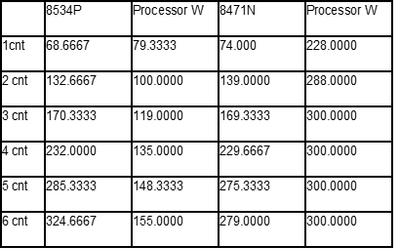

- Virtual Firewall Appliance: Juniper vSRX is a virtual firewall appliance solution for network providers and enterprises in both virtualized and cloud-native deployments. The 64-core AMD EPYC 8534P Processor delivers a ~1.16x throughput advantage while drawing only ~0.48x measured CPU power compared to the 52-core Intel Xeon Platinum 8471N on fully loaded systems. AMD achieved these results by running six (6) vSRX virtual firewall instances each with 8vCPU cores as individual containers.[3]

Figure 2: AMD EPYC 8354P Juniper vSRX performance vs. Intel Xeon Platinum 8471N

Optimized for Edge Computing

The explosive growth of the Internet of Things (IoT) shows no signs of slowing down. Today’s hyper connected world requires storing and processing data nearer to where it originates. This has important implications for processing and storage latencies because edge computing localizes processing and disaggregates data. Edge computing thus requires a specialized type of platform to handle these data and communication requirements in more rugged deployments with more challenging thermal, power, environmental, and real-estate constraints.

AMD EPYC 8004 Series Processors are purpose-built for edge computing. AMD has partnered with leading OSV/hypervisor and ISVs such as Microsoft, Nutanix, VMware, and leading Linux® distributions to support hyperconverged infrastructure (HCI). The AMD EPYC 8004 Series Processors fill the need for exceptional price-performance and performance per watt on some key edge computing workloads:

- IoT Gateway performance with TPCx-IoT: TPC-Benchmark-IoT™ is the first industry benchmark specifically designed to measure the performance of IoT gateway systems by providing verifiable performance, price-performance, and availability metrics for systems that ingest massive volumes of data from many devices while running real-time analytics queries. A cluster of systems based on two 64-core AMD EPYC 8534P and three 32-core AMD EPYC 8324P processors delivers compelling performance at ~36% lower cost per thousand transactions per second ($/kIoTps) than a cluster of systems based on two 96-core AMD EPYC 9654 and three 64-core AMD EPYC 9554 processors.[4]

- VDI for Branch Office: Virtual Desktop Infrastructure (VDI) solutions are becoming popular in enterprises enabling the ability to work in multi campus environments easily and smoothly. A 1P 32-core AMD EPYC 8324P system delivers ~1.3x the “Very Good QoS” desktop sessions per system watts/system cost versus a 1P 32-core Intel Xeon Gold 6421N system on LoginVSI.[5]

- Video encoding with FFmpeg: FFmpeg is a free, open-source software project that consists of a suite of libraries, codecs, and programs that handle video, audio, and other multimedia files and streams. The core FFmpeg program is designed for command-line processing of video and audio files. It is widely used for encoding, transcoding, editing, video scaling, video post-production, and standards compliance. A 64-core AMD EPYC 8534P processor outperformed a 52-core Intel Xeon Platinum 8471N by ~1.66x in aggregated transcoded frames per hour and by ~2.39x in relative performance per watt. AMD achieved this result transcoding the DTS_raw scene from 4K raw to the VP9 codec running multiple jobs that each used 8 threads.[6]

- End to end AI with TPCx-AI: The TPCx-AI™ benchmark emulates the behavior of AI workloads that are relevant to modern datacenters and cloud environments. A single 1P 32-core AMD EPYC 8324P system delivers a ~1.63x performance per core advantage over a 2P 32-core Intel Xeon Platinum 8462Y+ system at a Scale Factor of 10 GB (SF-10).[7]

Figure 3: AMD EPYC TPCx-AI performance vs. Intel Xeon Platinum 8462Y+ and AMD EPYC 9374F

Optimized Software Designed Storage

Today’s data driven economy means that data never dies. This challenges the IT infrastructure to store the ever-growing volumes of data generated by machines and applications along with cloud computing. Software Defined Storage (SDS) is one of the foundational infrastructure layers for meeting the increased demand for storage at scale and while optimizing TCO. Storage as a Service (STaaS) simplifies access to provisioned storage resources because users can subscribe to offerings from a cloud service provider and/or from within an enterprise instead of purchasing and managing physical storage hardware. Some common types of STaaS storage include:

- Block storage: Stores data in discrete blocks and is often used for applications that require high performance, such as databases and virtual machines.

- Object storage: Stores data as objects (typically files or folders) and is often used for applications that require high scalability and durability, such as backup and archiving.

- File storage: Stores files in a hierarchical structure and is used for applications that require easy access to files, such as file sharing and collaboration.

SDS solutions require a good balance of compute power and IO capabilities to process vast amounts of data. AMD EPYC 8004 Series Processors rise to this challenge in emerging markets such as edge computing, telco, AI, and genome sequencing applications that demand high IOPS, throughput, latency, scalability, and robust security features. Specific storage performance and CPU frequency requirements will continue evolving in lockstep with application demands.

AMD ran the SPECstorage™ Solution 2020 benchmark to determine how AMD EPYC 8004 Series Processors perform while interacting with AI and genomics applications. The AI workload tested used TensorFlow image processing environments with traces collected from systems running COCO, Resnet50, and Cityscape datasets. The genomics workload tested came from commercial and research facilities that perform genetic analysis. The results speak for themselves [8]:

- AI workload: 50 jobs with an ORT of 0.31 ms.

- Genomics workload: 200 jobs with an ORT of 0.09 ms.

The SPECstorage™ Solution 2020 workloads were run on 1P systems powered by AMD EPYC 8324P processors with 384 GiB memory and ten 3.84TB NVMe SSDs.

AMD EPYC 8004 Series Processors are also ideal for entry-level storage, especially for cloud edge infrastructure. For example, a 1P server powered by an 8-core AMD EPYC 8324P delivers up to ~1.8x the rack throughput of a 1P server powered by an 8-core Intel Xeon Bronze 3408U running CephFS storage workloads.[9]

Conclusion

AMD understands the need for continuous adaptation to meet rapidly evolving market segments, verticals, and software needs. We also understand just how critical it is to consistently deliver a best-in-class platform for our hardware, OEM, operating system, cloud, and software partners that empowers them to satisfy their own customer commitments. Today’s launch of AMD EPYC 8004 Series Processors is the latest example of AMD ongoing innovation. The “Zen 4c” core architecture is yet another milestone on our ongoing quest to continue delivering the world’s preeminent datacenter and “beyond the datacenter” processors.

AMD offers guidance around the best CPU tuning practices to achieve optimal performance on these key workloads when deploying 4th Gen AMD EPYC processors for your environment. Please visit AMD EPYC™ Server Processors to learn more.

Other key AMD technologies include:

- AMD Instinct™ accelerators are designed to power discoveries at exascale to enable scientists to tackle our most pressing challenges.

- AMD Pensando™ solutions deliver highly programmable software-defined cloud, compute, networking, storage, and security features wherever data is located, helping to offer improvements in productivity, performance and scale compared to current architectures with no risk of lock-in.

- AMD FPGAs and Adaptive SoCs offers highly flexible and adaptive FPGAs, hardware adaptive SoCs, and the Adaptive Compute Acceleration Platform (ACAP) processing platforms that enable rapid innovation across a variety of technologies from the endpoint to the EDGE to the cloud.

Raghu Nambiar is a Corporate Vice President of Data Center Ecosystems and Solutions for AMD. His postings are his own opinions and may not represent AMD’s positions, strategies, or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

References

- SP5-072A: As of 1/11/2023, a 4th Gen EPYC 9654 powered server has highest overall scores in key industry-recognized energy efficiency benchmarks SPECpower_ssj®2008, SPECrate®2017_int_energy_base and SPECrate®2017_fp_energy_base. See details at https://www.amd.com/en/claims/epyc4#SP5-072A

- Server-side Java® overall operations/watt (SPECpower_ssj®2008) claim based on 1P published results at spec.org as of 9/18/2023. 1P servers: EPYC 8534P (64-core, 200W TDP) scoring 27,342 overall ssj_ops/W, https://spec.org/power_ssj2008/results/res2023q3/power_ssj2008-20230830-01309.html, 52.5W active idle, 7,289,747 ssj_ops@100% target load @ 212W) vs. 1P Xeon Platinum 8471N (52-core, 300W TDP, https://spec.org/power_ssj2008/results/res2023q3/power_ssj2008-20230822-01294.html, 88.2W active idle, 6,749,219 ssj_ops@100% target load @ 419W) scoring 12,821 ssj_ops/W for (27,342/12,821=2.13=2.1x) 2.10x the SPECpower_ssj2008 overall ssj_ops/W. . SPEC and SPECpower_ssj® are registered trademarks of the Standard Performance Evaluation Corporation.

- SP6-015: Based on AMD Internal testing from August 19, 2023, to Sept. 8, 2023. A 1P 64-core AMD EPYC™ 8534P processor powered server provides ~1.16x the throughput of a 1P 52-core Intel® Xeon® Platinum 8471 processor powered server while drawing approximately ~0.48x the CPU power (based on measured CPU TDP) and delivering ~1.25x more performance/system watt running Juniper xVRX cloud native Firewall workload on 6 concurrent containers.

Testing performed on production servers with SMT/Hyperthreading enabled and using identical networking cards and memory DIMMS. The containers used hosted Juniper sVRX version 3.0 and were configured with OS Ubuntu 22.04; Kubernetes v1.27.2. Testing results may vary due to various factors including but not limited to system configuration, container configuration, network speed and OS or BIOS settings. - TPCx-IoT: As published on TPC© - the following is derived from: Two 64-core AMD EPYC 8534P and three 32-core AMD EPYC 8324P 4,529,397.35 IoTps, 54.85 USD per kIoTps https://www.tpc.org/5774; Two 96-core AMD EPYC 9654 and three 64-core AMD EPYC 9554 processors 5,739,514.34 IoTps, 86.42 USD per kIoTps https://www.tpc.org/5773; 1-(54.85/86.42) = 36% lower $/kIoTps

- SP6-010: Login VSI “knowledge worker” comparison based on AMD internal testing as of 8/18/2023. Configurations: 1P 32C EPYC 8324P (187.3 avg. VSImax, 302 avg system W, est $4,927 system cost USD) powered server versus 1P 32C Xeon Gold 6421N (185 avg. VSImax, 342 avg system W, est $5,674 system cost USD) powered server for 1.01x the performance, 12% lower system power (1.15x the performance/system W), 13% lower system cost (1.16x the performance/system $) for 1.32x the overall system performance/W/$. Assuming an 8kW rack deploying servers, 26 ea. EPYC 8324P vs. 23 ea. Xeon 6421N can fit within the power budget delivering 1.14x the total desktop user sessions/rack. Power assumptions based on Phoronix Test Suite max system W scores for these CPUs. Scores will vary based on system configuration and determinism mode used (default TDP power determinism mode profile used). This scenario contains many assumptions and estimates and, while based on AMD internal research and best approximations, should be considered an example for information purposes only, and not used as a basis for decision making over actual testing. Results may vary and are normalized to EPYC 8534P Performance Determinism mode (always 1 in all measurements). Estimated system pricing based on Bare Metal Server GHG TCO v9.52.

- SP6-014: Transcoding (FFmpeg DTS raw to VP9 codec) aggregate frames/hour/system W comparison based on AMD internal testing as of 9/16/2023. Configurations: 1P 64C EPYC 8534P (437,774 fph median performance, 16 jobs/8 threads each, avg system power 362W) powered server versus 1P 52C Xeon Platinum 8471N (263,691 fph median performance, 13 jobs/8 threads each, avg system power 522W) for ~1.66x the performance and ~2.39x relative performance per W. Scores will vary based on system configuration and determinism mode used (max TDP power determinism mode profile used). This scenario contains many assumptions and estimates and, while based on AMD internal research and best approximations, should be considered an example for information purposes only, and not used as a basis for decision making over actual testing.

- As published on TPC© - the following is derived from: End-to-end AI performance TPCx-AI on 1P 8324P and 1P 9374F vs. 2P 8462Y+. The performance comparison is based on audited and published TPCx-AI results for each of these platforms and posted here. Genoa: https://www.tpc.org/tpcx-ai/results/tpcxai_result_detail5.asp?id=123091802#, Siena: https://www.tpc.org/tpcx-ai/results/tpcxai_result_detail5.asp?id=123091801, Sapphire Rapids: https://www.tpc.org/tpcx-ai/results/tpcxai_result_detail5.asp?id=123061402. For each result, we compute the AIUCpm divided by the total number of cores in that platform. The chart shows this AIUCpm per core for each platform normalized for Sapphire Rapids 8462Y+ as 1.0x, 8324P as 1.63x and 9374F as 2.25x.

- As published on SPEC© @ SPEC.org - the following is derived from: SPECstorage™ Solution 2020: AI workload: 50 jobs with an ORT of 0.31 ms https://www.spec.org/storage2020/results/res2023q3/storage2020-20230820-2337.html; . Genomics workload: 200 jobs with an ORT of 0.39 ms https://www.spec.org/storage2020/results/res2023q3/storage2020-20230820-2335.html

- SP6-011: CephFS RADOS benchmark comparison based on Phoronix Test Suite paid testing as of 8/18/2023. Configurations: 1P 8C EPYC 8024P (1.05x relative performance, 209 avg system W, est $3,441 system cost USD) powered server versus 1P 8C Xeon Bronze 3408U (0.72x relative performance, 254 avg system W, est $3,721 system cost USD) powered server for 1.45x the performance, 18% lower system power (1.76x the performance/system W), 8% lower system cost (1.57x the performance/system $) for 1.91x the overall system performance/W/$. Assuming an 8kW rack deploying servers, 38 ea. EPYC 8024P vs.31 ea. Xeon 3408U can fit within the power budget delivering 1.77x the total storage throughput/rack. Testing not independently verified by AMD. Scores will vary based on system configuration and determinism mode used (default TDP power determinism mode profile used). This scenario contains many assumptions and estimates and, while based on AMD internal research and best approximations, should be considered an example for information purposes only, and not used as a basis for decision making over actual testing. Results may vary and are normalized to EPYC 8534P Performance Determinism mode (always 1 in all measurements). Estimated system pricing based on Bare Metal Server GHG TCO v9.52.

-

AI & Machine Learning

25 -

AMD

1 -

AMD Instinct Accelerators Blog

1 -

Cloud Computing

38 -

Database & Analytics

26 -

EPYC

118 -

EPYC Embedded

1 -

Financial Services

19 -

HCI & Virtualization

30 -

High-Performance Computing

36 -

Instinct

9 -

Supercomputing & Research

9 -

Telco & Networking

15 -

Zen Software Studio

4

- « Previous

- Next »