This article was originally published on August 20, 2019.

Editor’s Note: This content is contributed by Curt Wortman, Sr. Product Marketing Manager in Data Center Group.

A New Era in Data Movement

One of the most critical bottlenecks in building hardware architectures for complex datapath workloads is the speed of the memory subsystem. With the two latest additions to Xilinx's Alveo™ accelerator card portfolio, the Alveo U50 and Alveo U280, we set out to eliminate that bottleneck by integrating high bandwidth memory gen2 (HBM2) into each. HBM2 is stacked DRAM memory, located in-package to shrink the power and PCB footprint—but it also provides astounding memory bandwidth of 460GB/s. This amount of memory bandwidth is enough to eliminate the memory bottlenecks seen in complex compute and data intensive workloads, and it fully unlocks the incredibly parallel capability of FPGAs.

Virtex UltraScale+ HBM FPGA

Virtex UltraScale+ HBM FPGA

What are the major application benefits of HBM2?

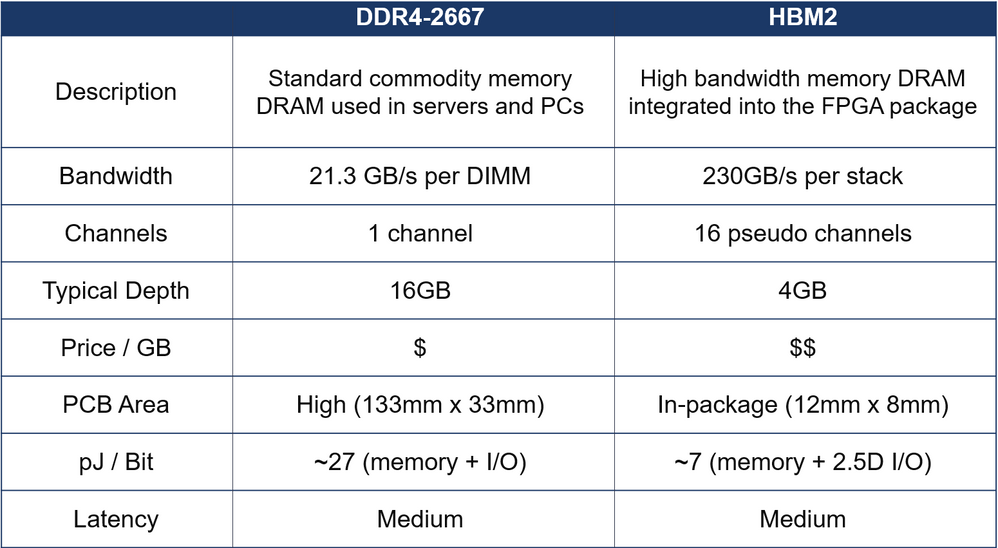

- Power Efficient: ~7pJ/Bit (0.25X in comparison to DDR4 DIMMs)

- High Bandwidth: 460 GB/s (20X over tradition a single DDR4-2400 DIMM channel)

- Compact Footprint: 12mm x 8mm (45X smaller than a single DDR4 DIMM of 133mm x 33mm)

- Sustainable Throughput: 32 channels (5.3X more channels than processors)

What does 460GB/s of bandwidth really mean for data center acceleration?

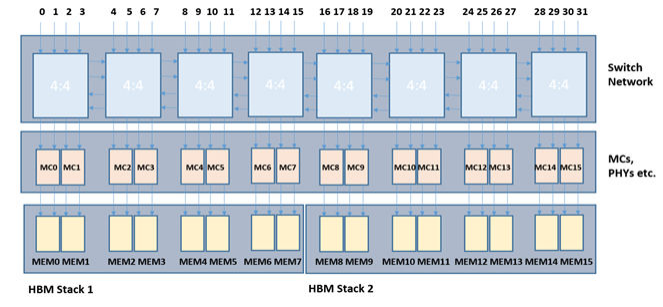

Many of today’s data analytics applications are being constrained by memory performance. Database software now relies upon in-memory storage of the data to access data in parallel, pulling from multiple memory channels simultaneously. Traditional Intel processors are limited to 6 memory channels, whereas both Alveo U280 and U50 cards support 32 memory channels, providing a boost of 5.3X more channels into a programmable fabric that can sustain and process a continuous stream of data in and out of all memory channels simultaneously. Memory intensive applications such as search, key value store, hash lookups, and pattern matching can take advantage of more channels, delivering a super-linear increase in acceleration.

Virtex UltraScale+ FPGA with HBM2

Virtex UltraScale+ FPGA with HBM2

What is the difference between using HBM2 and DDR4 DIMM on Alveo accelerator cards?

Each HBM channel is associated with 256MB, therefore, the maximum size buffer object that the host can transfer is 256MB per HBM channel. Xilinx’s FPGA includes an AXI switch, allowing for a single channel to access the full 8GB memory space. In contrast for DDR, there is support up to 1GB max size buffer object to fill 16GB DDR4 DIMMs.

DDR4 vs. HBM2

DDR4 vs. HBM2

How easy is it to migrate a design from DDR4 to HBM2?

If you’re using Xilinx’s SDAccel™ design flow, both RTL and HLS kernels can be directly reused without design changes. In their kernel design, a user specifies the use of AXI-4 memory mapped interfaces, which is generic to the underlying memory technology. The SDAccel tool allows for both automatic and manual mapping of the AXI ports to the memory technology at the linkage stage. By default, the tool will automatically map the memory channels based upon resource availability. For advance users that care about placement, the following additional param can be used:

What memory bandwidth is achievable using C++ design entry?

Software designers now can take full advantage of FPGAs with the SDAccel™ design flow to extract similar performance of highly optimized RTL design in a fraction of the development time. To demonstrate HBM ease-of-use for software developers, an example design demonstrating HBM2 bandwidth is provided on GitHub. The kernel is written in C++ and reads two vectors, while simultaneously adding and multiplying those vectors. The design contains 8 compute units of a kernel, accessing all 32 HBM channels at full bandwidth and benchmarked at 421.8GB/s. Very impressive for Xilinx’s SDAccel design flow to perform high level synthesis (HLS) of a C++ design.

To check out the latest Alveo accelerator cards with HBM2, visit here:

To download U50 product brief, click here.

Ecosystem partners with massive data movement challenges are also taking advantage of HBM memory. Check out one of our customer success stories.