Graphics Cards

- AMD Community

- Support Forums

- Graphics Cards

- Performance Drop of 40-50 FPS Navi 5700

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Performance Drop of 40-50 FPS Navi 5700

First, let me state I had no issues with the R 200/300/400/and 500 series cards. I used them all the time to make budget builds for friends looking to get into PC gaming for the first time.

For years when it was ATI, I was using the HD series - again no issues.

When nVidia came out with the 900 series GTX, I made a switch then for my own personal computer. It was more on whim/tired of using the same brand(I am one of those weird consumers). Never had any issues with the GTX 960. Two years ago, I bought a used GTX 1060 3GB for $100 - still worked great to the day I pulled it 3 days ago. It just couldn't pull high fps in single player 1080p high/ultra and in some BRs even with low settings(usually around90 fps/but 144hz monitor).

So, I bite at a RX 5700(not xt) on sale for $279 by VisionTek (made by AMD). It looked good, reviews made it sound good, some friends I have online said it was worth the price.

This card has been almost a disaster.

I cannot run 144hz. How does a card ship that cannot run 144hz? Nothing but screen flicker like crazy if I try to use 144hz. I can use 120hz just fine though. It doesn't matter if there is no difference to the eye - a GTX 950/960/and 1060 all ran this same 144hz monitor without issue and with 2 other monitors plugged in as well.

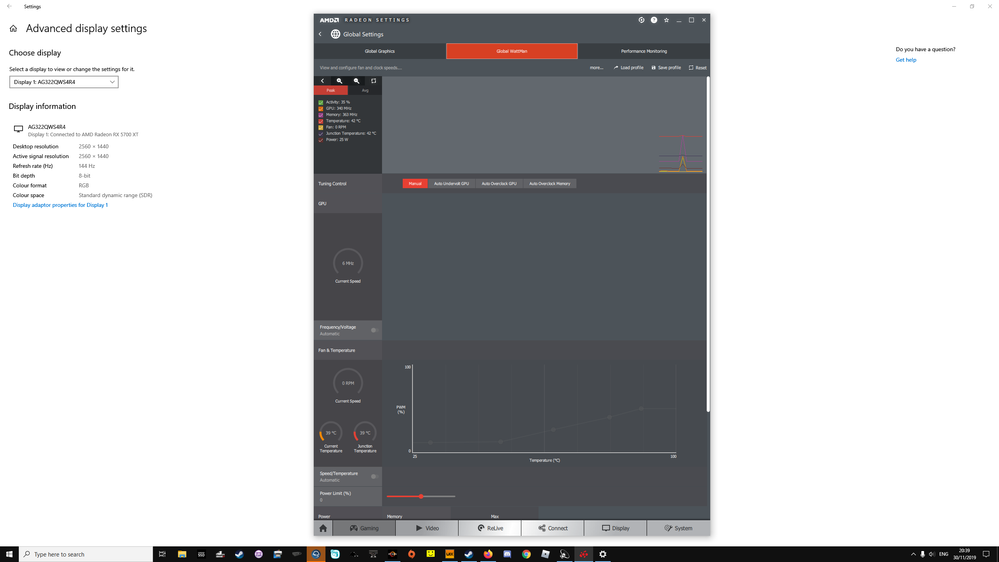

Next problem - can't run MSI Afterburner to even monitor the card because screen flicker... What? A monitoring program(extremely popular one at that) should not be causing a gpu to flicker. Whatever, swap to WattMan + GPUZ I guess to monitor the card.

Then that's when I noticed a problem when I started to play games: the wattage. What in the ever loving F were you thinking, AMD? A power-house mid/high range card that fluctuates at will during gameplay? Why? I've never had that issue on ANY nVidia card. Buddy wanted me to play some PUBG with him. I had the settings on low but with ultra draw distance and such to maintain a fluid 120fps(since I have to play on 120hz).

Everything seemed fine then I started getting severe 40-50fps drops for no reason. I began to watch WattMan during this. The card was pulling 60-70watt for a 180w card and it dipped to 30watts during these FPS drops. What? First, why is a 180w card only pulling 70w during gaming? Desktop/Windows basic stuff - I can understand that.

So, I crank PUBG to Ultra. I get 100-120fps depending and I notice the card now pulling 120watts with it dropping down to 70w which also causes fps drop to 50. Why? Why does it use less power for seemingly no reason? Keep in mid this entire time, my temps are under 60c because of my cooling settings - it's not throttling due to temps.

Other things of interesting note compared directly to the GTX 1060, a far inferior card according to benches:

-I can mine crypto and game at the same time without seeing any FPS impact(was done on accident a few times)

can't on the RX5700

-some information programs keep saying my card has 18compute units but AMD clearly states it is supposed to have twice that - what?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-CSGO gave me less FPS than the GTX 1060 250 compared to over 300

-single player games like RE2Remake maxed out looked great and ran perfectly fine at about 90fps

None of my build is overclocked.

Ryzen 7 1700x

32Gb 3000Mhz

NVME drive on the board

RX 5700 VisionTek branded 8Gb

600watt 80 plus rated EVGA PSU

Fresh Windows 10 Pro install 1 week ago.

No temperature issues.

No overclocking.

Nothing.

This RX 5700 has been extremely disappointing to say the least. Since it's only been a few days, it is very probable that I will return it and go back to my GTX 1060. No point in getting upset every time I want to game while I wait for supposed good drivers to show up. The card has been out since July - we are in month 6 for it. That is really bad.

Love your CPUs. The Ryzen series has been awesome. I just don't know what the hell you did with your 7nm cards. I won't be purchasing your GPUs for a while because of the experience.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no problems to 144hz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I cannot run 144hz. How does a card ship that cannot run 144hz? Nothing but screen flicker like crazy if I try to use 144hz. I can use 120hz just fine though. It doesn't matter if there is no difference to the eye - a GTX 950/960/and 1060 all ran this same 144hz monitor without issue and with 2 other monitors plugged in as well.

I have this problem and several other users:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's right! And it still has a very slow OpenGL API (it was faster in the 2017 Crimson ReLive Edition), broken Vulkan API (some textures), AMD company ignoring its community's strong appeal and not wanting to add specific extensions to the OpenGL and Vulkan APIs (VK_EXT_fragment_shader_interlock , GL_ARB_fragment_shader_interlock) that would help game developers and video game console emulators. With each driver release it improves slightly but it also greatly worsens the efficiency of the video driver. I'm also considering migrating to NVIDIA that doesn't go through these situations and has full support for game developers and emulators.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just use the DDU program because not all monitors have got new drivers, as you know how to do it yo use this program then the problem will disappear

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like you might have an issue, but wattage is usually just a symptom. The card downclocks because it has nothing to do. So it may be a CPU bottleneck if the AMD card is CPU-heavy and your CPU was just enough for your old card. Plus things like that can change with Windows updates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually, I'm having exact same problem with RX 590. In old games my card is using 50-60 watts maximum, and GPU usage constantly drops to 0-40%, fans not spinning, temps are at 50-55c. Sometimes it's working fine, and GPU usage is 80-100%- no FPS drops below 60, 0-40%- FPS drops to 40-50. Literally, it's just impossible to play older games anymore. I had 1050ti and FPS were fine and constant, GPU utilization also never dropped below 60%. I tried 4 different drivers with not luck. This card even struggles with 4K videos on youtube, making my CPU load to 100%. I even get skipped frames at FullHD 1080!!! No matter if hardware acceleration is off or on. Sometimes it's using my RX 590, SOMETIMES, i'm getting 30% load in GPU while watching videos. SOMETIMES...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please try updating to 19.12.1 as it contains a fix which may help your issue.

https://www.amd.com/en/support/kb/release-notes/rn-rad-win-19-12-1

Fixed Issues

- Radeon RX 5700 series graphics products may experience stutter in some games at 1080p and low game settings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

General consensus is the fix isn't a fix.

You might wanna put that back into known issues section...Not trying to be mean here, but it seems people are still having the issue, I wouldn't call it 100 percent fixed at the least.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still have that issue at 1080p and i've tried clean installing that driver with ddu and a clean windows installation as well didnt work the newest drivers doesnt work either so the issue is not fixed after 5 months that AMD claimed to have fixed... Definetly returning my 5700 to buy a rtx 2060

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

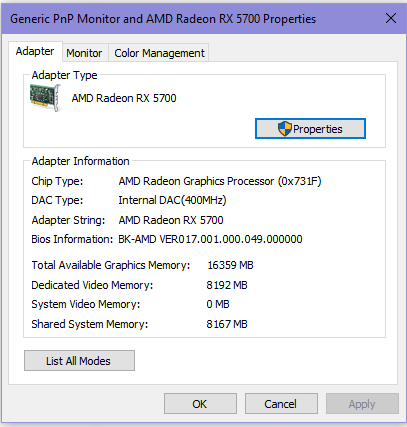

can you check your bios version / date please?

i think this is where things go wrong... my bios is from 2019/06/16 (when they released the card if i'm not mistaken). they quickly changed something because of these problems. if you're unlucky you have an old bios with the old problems...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yep that's the bios i'm talking about.

my guess is that those are cards which have been lying around in the store for a couple of weeks / months and they never took the effort to fix them before selling them...

knowing that asus is calling some of those cards back sais enough right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so.. did you get this fixed?

i'm still having these stutters like crazy and i'm getting really tired of it.