OpenCL

- AMD Community

- Communities

- Developers

- OpenCL

- Kernel runs slower for local workgroup size greate...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kernel runs slower for local workgroup size greater than 64

Hi bros, I'm a CS undergraduate student and I recently wrote a GPU path tracer using OpenCL. If you don't know what path tracing it's basically a method to generate photorealistic images by shooting rays through every pixel and applying light transport algorithms.

So the main reason I opened up this discussion is I noticed something strange. From what I gathered over the internet increasing the local workgroup size i.e. the number of work-items in a workgroup usually increases performance, more-so if they are a power of two and if the total work-items within a workgroup is a multiple of the wave-front size. I know the hardware groups up work-items into group of 64 threads called a wavefront.

Before I talk about the behviour i noticed in my path tracer I'd like to know some basic architectural things.

- Can there be more than 1 workgroup active on 1 CU at any given instance?

- The GCN white paper states that all the 4 SIMD lanes can have different wavefront active at any instance. Further it states that all 4 SIMDs can execute 1 operation simultaneously however later it states that out of the 7 different types of instructions each SIMD can execute a unique one. To be more specific here is the quote,

The CU front-end can decode and issue seven different types of instructions: branches, scalar ALU or memory, vector ALU, vector memory, local data share,

global data share or export, and special instructions. Only issue one instruction of each type can be issued at a time per SIMD, to avoid oversubscribing

the execution pipelines. To preserve in-order execution, each instruction must also come from a different wavefront

This means that in one clock cycle only 1 SIMD is allowed to do a memory read/write operation. Am I correct? If the 16 work items in a SIMD or more accurately in a quarter wave-front all access the same memory location, does the read gets coalesced or the memory is accessed 16 times serially?

My path tracer gives me the highest FPS when i set local workgroup size to 64. Increasing it further reduces FPS. I want to really understand why this is the case. I have 2 arrays of spheres and planes in the constant memory. Every work-item within the workgroup needs to perform an intersection test with every sphere 1 by 1. This means Each work-item will be accessing the same index, lets say,

sphere_arr[0]

before trying to check intersection with the next one. The only difference between the 2 cases are for local workgroup size of 64 we would have 1 wave front per workgroup, where as for 256 we would have 4 wavefronts per workgroup. Why does the setting with 4 wavefronts run slower?

- Labels:

-

OCL Performance and Benchmark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Yes, it can.

2. Each CU has 4 SIMDs and each SIMD has 16 ALUs. So, each SIMD takes 4 cycles to complete a wavefront (64 work-items). In each cycle, one vector instruction is issued to a SIMD and all the 16-ALUs within the SIMD executes the same instruction over 4 cycles. The instruction scheduler selects each SIMD one by one and issues one instruction per cycle. When there are enough wavefronts available, four SIMDs execute 4 wavefronts simultaneously .

3. Performance impact for accessing the same memory location depends on the memory type i.e. global, local or constant. As the "AMD OpenCL Programming guide" says:

2.1.1.2 Reads Of The Same Address

Under certain conditions, one unexpected case of a channel conflict is that reading from the same address is a conflict, even on the FastPath.

This does not happen on the read-only memories, such as constant buffers, textures, or shader resource view (SRV); but it is possible on the read/write UAV memory or OpenCL global memory.

From a hardware standpoint, reads from a fixed address have the same upper bits, so they collide and are serialized. To read in a single value, read the value in a single work-item, place it in local memory, and then use that location:

Avoid:

temp = input[3] // if input is from global space

Use:

if (get_local_id(0) == 0) {

local = input[3]

}

barrier(CLK_LOCAL_MEM_FENCE);

temp = local

Regarding choosing the right work-group (WG) size, here is an example where smaller sized WGs may be beneficial than larger-sized WGs. Each WG is assigned to a single CU. So, if there are not enough number of WGs to fill all the CUs, then some of the CUs will be idle, hence the GPU will be underutilized as well. In this situation, overall performance of application may be better for smaller sized WGs compared to larger-sized WGs.

Choosing the right WG size depends on many factors like application logic, gpu resource usage pattern, hardware architecture etc. Profiling information can be very useful to select appropriate WG size.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be clear on point 2, From what I inferred from the whitepaper,

Four SIMDs can be executing 4 wavefronts simultaneously but each SIMD can only issue a unique instruction in 1 cycle. This means if SIMD 1 issued a memory read or write instruction the other 3 can't issue that instruction in the same cycle?

Regarding the local workgroup size, I have a 640x480 image. The global work items is the same as image dimensions i.e [640,480]. When opting for local workgroup size (LWS) of [8,8] i.e. 64 total. I have 80x60=4800 total workgroups. My card is HD7950 which has a total of 32 Compute Units and considering each CU can have 10 wavefronts at an instance, I can have a total of 32*40 = 1280 wavefronts. Since LWS = hardware wavefront size hence I have a total of 4800 wavefronts.

For case 2 where LWS is [16,8] i.e. I have 40x60 =2400 workgroup. However LWS = 2 * 64 = 128. This means I have 2 wavefronts per workgroup. The total number of wavefronts remain 4800. In both cases the number of workgroups exceed the limit of 32 CUs the device is surely saturated with workgroups. Furthermore the device is also saturated with wavefronts as the Max wavefront at any given instance for my card is 1280. The only difference is that in Case 1, I have 1 wavefront per workgroup where as in Case 2, I have 2. However case 1 gives better performance and I don't understand why?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Four SIMDs can be executing 4 wavefronts simultaneously but each SIMD can only issue a unique instruction in 1 cycle. This means if SIMD 1 issued a memory read or write instruction the other 3 can't issue that instruction in the same cycle?

I think, you are confusing two different operations - 1) issuing an instruction to the SIMD 2) executing an instruction by the SIMD over 4 cycles. These two operation can happen simultaneously. Say, in a particular cycle, when a SIMD is selected to issue one instruction, other 3 SIMDs may be busy executing their own instructions issued in earlier cycles and the instruction(s) can be of similar type but expected to be from different wavefronts.

Regarding the application performance, it would very difficult to point out anything without checking the profiling data and other details The use-case I shared was just as an example. As said, there are many other factors to be considered as well. I would suggest you to profile the application for both the cases and study the profiling data to find out any performance bottle-neck when work-group size is greater than 64.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

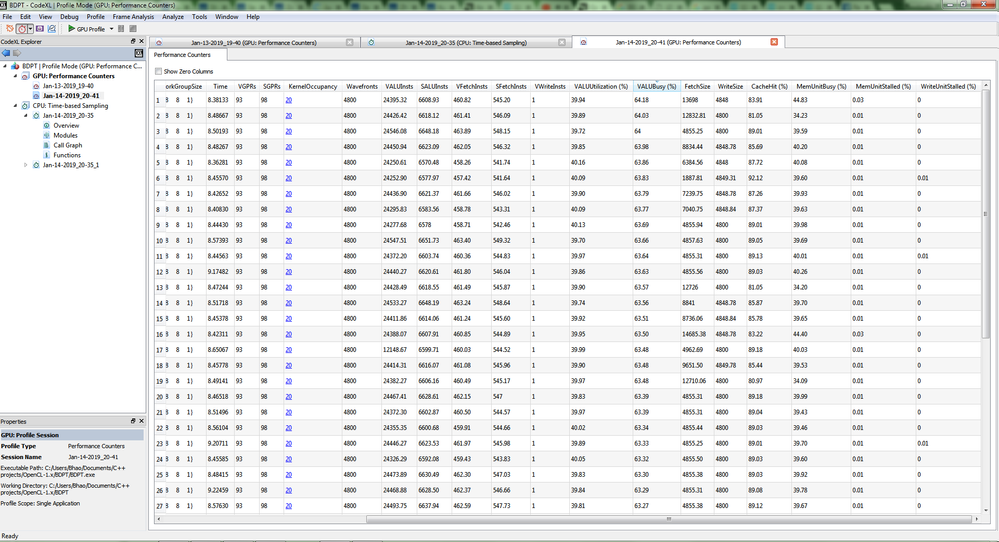

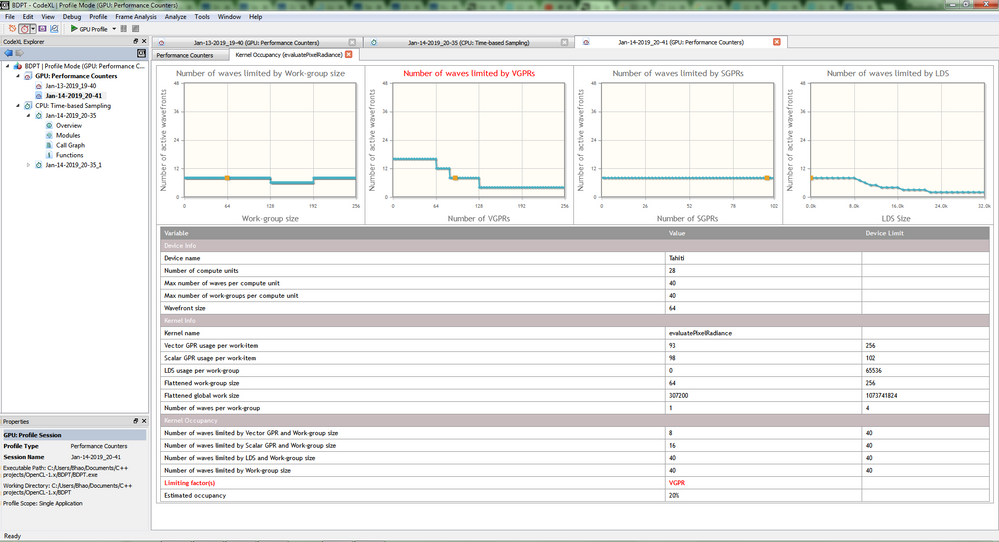

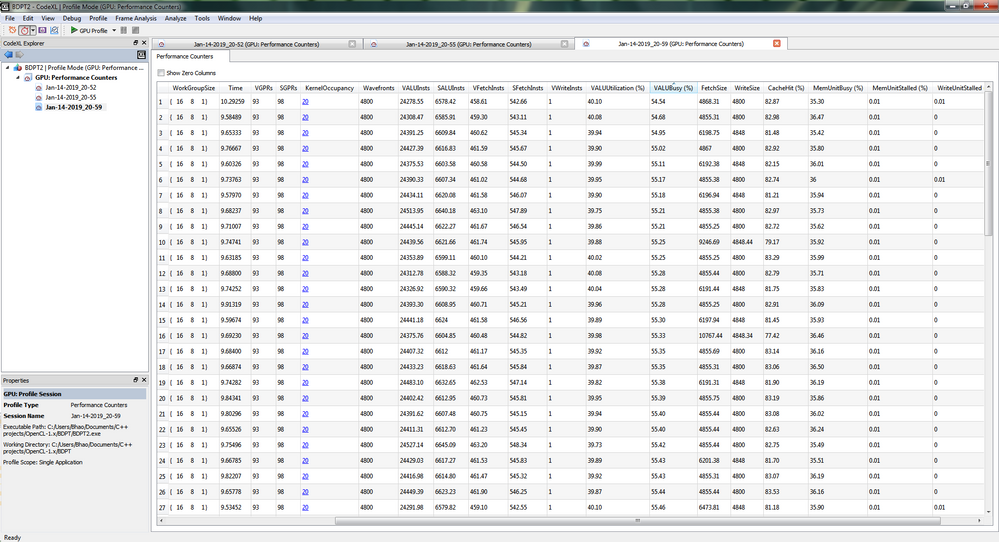

I'm new to kernel profiling. I ran CodeXL and profiled the data. Here are the screenshots.

The first two sets are for workgroup size 8x8=64 and the second one for 16x8 = 128. Not a significant difference from what I can tell but the kernel with 8x8 size had max VALU Busy = 64% while the second one is around 56%. I checked other things like VALU utilizations and Cache Hit % but they are all almost the same. One more difference is the "number of waves limited by LDS and WG size" is 32 for 16x8 and 40 for 8x8. I don't understand what this means tho. Can you give me any tips?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like both the kernel occupancy graphs (i.e. 2nd and 4th snapshots) are same and indicate for workgroup size 64.

"Number of waves limited by LDS and WG size" means the number of active wavefronts is affected by the amount of LDS used by the dispatched kernel. In your case, I don't see any LDS usage in the profiling data.

I have following suggestions:

- Due to high VGPR usage, the kernel occupancy is very low (20% only). Higher occupancy or more number of active wavefronts helps to hide the memory latency thus improve the overall application performance. Try to reduce the register usage to improve the occupancy.

- In general, the compiler takes a conservative approach during VGPR allocation. It assumes that the work-group size is 256 i.e. the largest possible work-group size, hence limits max. number of VGPRs per work-item. To allocate more number of VGPRs, the kernel should use required_work_group_size attribute which specifies to the compiler that the kernel is launched with a work-group size smaller than the maximum, allowing it to allocate more VGPRs. Please try this kernel attribute which may help in your case.

Another point, "CacheHit percentage" values seem little better in case of workgroup size 64(8x8) compared to workgroup size 128(16x8). It may also contribute to the performance gain.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry about that I accidentally uploaded the wrong screen for the 2nd pic. Corrected it and it shows 2 waves per workgroup for 16x8 as expected now.

I'll try to look into reducing private float4 variables as much as I can. I'll try the attribute you talked about. About Cache HIt, yes the maximum and minimum for 8x8 are between 80-90 while the 16x8 one falls even to 70%. But I still don't understand the reason as to why this is happening.

If a compute unit can work on several wavefronts from different workgroups at a time it doesnt matter anymore if I have 1 or 2 waves per workgroup. Either way it's gonna assign the maximum number of wavefronts it can. My work-items don't access memory based on their IDs instead every work-item accesses memory in a predefined pattern. This means each workgroup is identical in it's access pattern. For example as I said earlier if there is an object array, each work-item traverses from 0 to object size, so I don't understand why would there be such a difference in CacheHit%.

Even if work-items were accessing based on spatial coherence (for e.g pixels closer access same memory) I should be getting a speed up instead, since increasing the size of the workgroup would mean the CU is filled with closer wavefronts first.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One more difference is the "number of waves limited by LDS and WG size" is 32 for 16x8 and 40 for 8x8. I don't understand what this means tho. Can you give me any tips?

When work-group (WG) has more than one wavefront (WF), there is an upper limit of 16 work-groups (WG) per compute unit (CU).

So, in your case where work-group size is 128 or 2 WFs per WG, max. number of WFs per CU = 16 * 2 = 32

If a compute unit can work on several wavefronts from different workgroups at a time it doesnt matter anymore if I have 1 or 2 waves per workgroup. Either way it's gonna assign the maximum number of wavefronts it can.

Yes, that is true.

In this case, max. 2 WFs per SIMD can be assigned due to register usage limit. When there is small number of active WFs, sometimes WFs from different WGs can help the scheduler to choose independent instructions. I'm not sure if 1 WF per WG has an effect here also.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When work-group (WG) has more than one wavefront (WF), there is an upper limit of 16 work-groups (WG) per compute unit (CU).

So, in your case where work-group size is 128 or 2 WFs per WG, max. number of WFs per CU = 16 * 2 = 32

And why is that? I'd understand limiting due to LDS usage but why would workgroup size limit the number of waves to 32 when increasing WG size doesn't increase LDS usage.

What I mean is 2 wave per workgroup can be thought of the same as 2 different WG with 1 wave per workgroup, Since there is 0 LDS usage i think? The VGPR and SGPR usage remains same in both WG size cases. Then why does it limit the total wave fronts to 32?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That seems an architectural limit. As CodeXL user guide says about how work-group size influences the kernel occupancy calculation:

The first limit to the number of active wavefronts on the compute unit is the work-group size. Each Compute unit (CU), has up to 40 slots for wavefronts. If each work-group is exactly one wavefront, then the maximum number of wavefronts is:

WFmax = 40

Otherwise, if there is more than one wavefront (WF) per work-group (WG), there is an upper limit of 16 work-groups (WG) per compute unit (CU). Then, the maximum number of wavefronts on the compute unit is given by:

WFwgmax = min(16 * WFwg, WFmax)

where WFwg is the number of wavefronts per work group.

For more information, please check: "CodeXL->Help > Using CodeXL > GPU Profiler > Using the GPU Profiler > GPU Profiler Kernel Occupancy"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When work-group size is same as wavefront size (i.e. 64), sometimes few synchronization instructions/commands (for example, a barrier) can be dropped because a wavefront executes in lock-step. The same may not possible for a work-group having multiple wavefronts; otherwise it can produce wrong result. So, in some cases, work-group size as 64 may improve the performance.

Thanks.