Gaming Discussions

- AMD Community

- Communities

- Red Team

- Gaming Discussions

- 2700X vs 8700K for gaming

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2700X vs 8700K for gaming

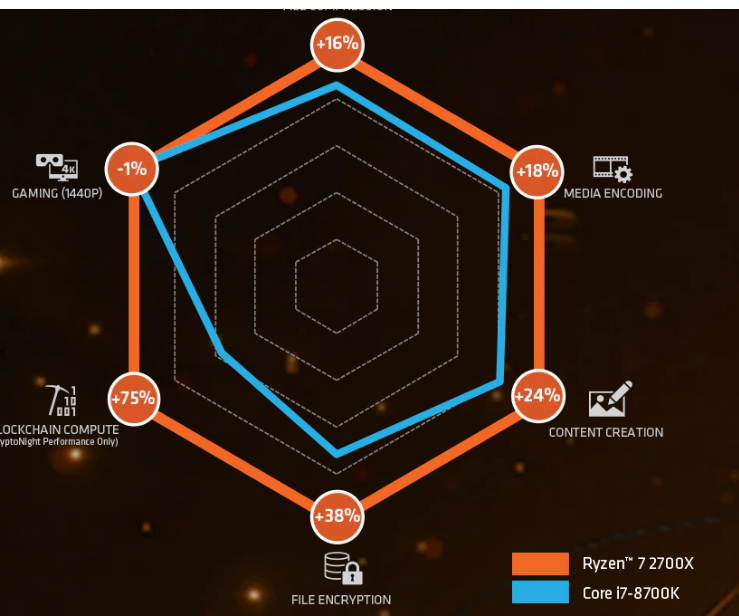

According to AMD website the 2700X is 1% weaker than the 8700K for gaming purposes. All Independant tests and benchmarks prints +- 10% in favour of the 8700K. So I cant't trust any of the numbers in this chart then?

Fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

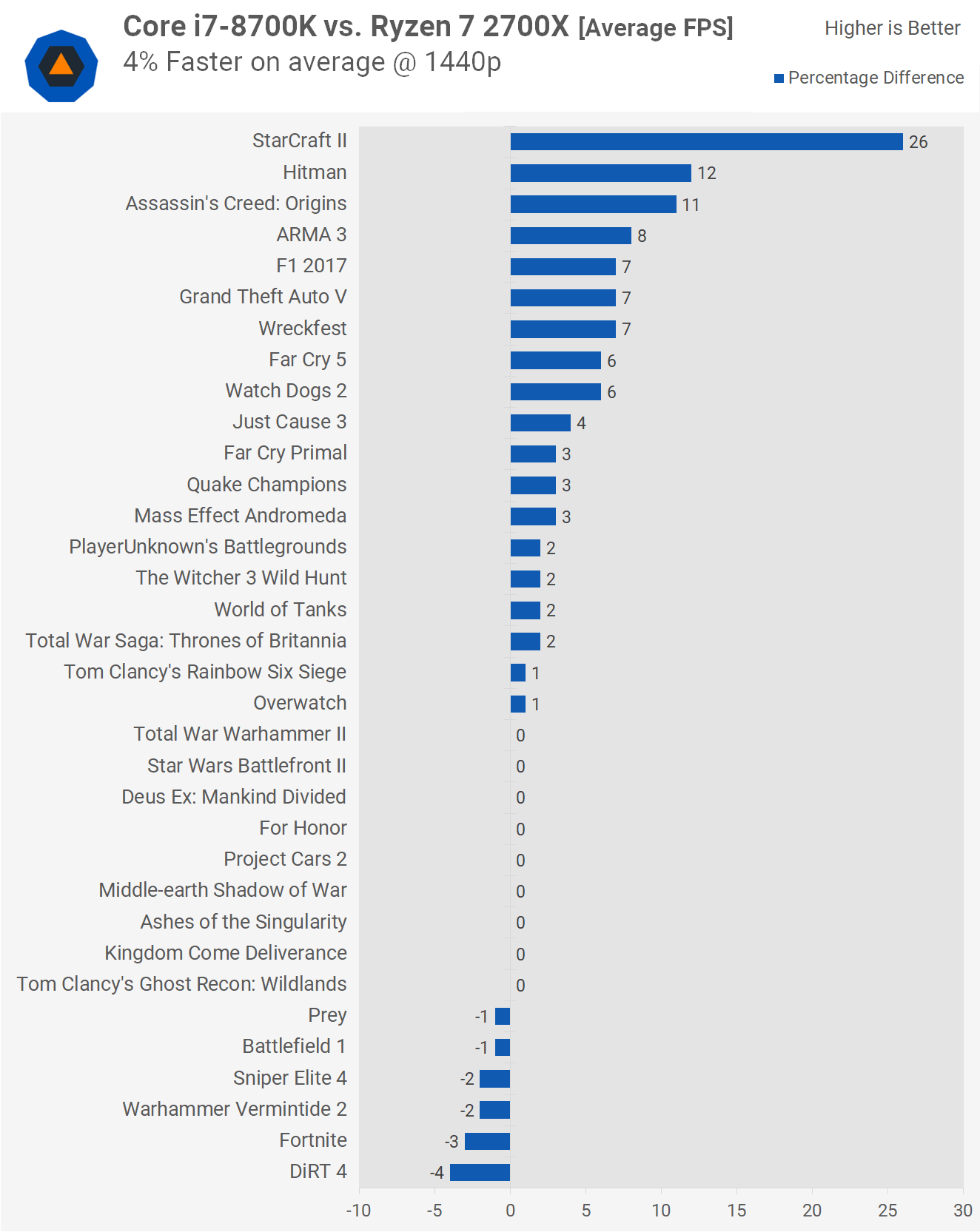

It's wise to always be a little skeptical of any manufacturers claims as they likely cherry pick the results that flatter their product the most. That said, the independent tests that I've seen only show a 4% difference at 1440P. A 3% difference in results is marginal and is likely because of the games they chose to test - maybe AMD tested a game they didn't or vice versa. As we can see from Techspot's results* the 8700K only performs 10% better than the 2700X when at 720P and sometimes 1080P. It's highly unlikely that anybody is gaming at 720P on either of the aforementioned CPUs. So yeah, believe what you want, but I'd say AMD's numbers are reasonable.

*https://www.techspot.com/review/1655-core-i7-8700k-vs-ryzen-7-2700x/page8.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting take. So if I pay more than 10% extra I could get almost 10% increase in clock for gaming alone. Considering Intel sourced the 9900K to a "third party" benchmarking firm that handicapped the 2700X and twisted the results (confirmed) who cares what Intel has to say or sell? Lying corporate scumbags. You don't murk the results of your benchmarks to gain customer respect. You do that by selling high performance/cost-efficient solutions that are reliable and consumer inspired. Like, say, AMD. I've been using AMD for professional 3D Modeling/Animation, 2d paint, video editing, and Gaming since the K6. I have built many computers in over 25 years of IT and I always recommend AMD no matter how deep my client's pockets are.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

It's a bit an odd question, why would you pick AMD slides over the 10K reviews of the 2700X now, did the 2700X just came out?

Companies shames themselves all the time, but who care at the end, are you not buying an Intel/AMD/Nvidia/Tech product now forward?

From where/when world customers are paying attention to that, we can't pay attention to simple stuff as our planet, go figure about tech!

Also your question is a bit misleading and not very well wrote, 1% at 1440p, where the games are gpu bound mostly all the time.

So yeah you will not find anyway a lot of difference at 1440p between the two, even less differences at 4K.

It's stated almost everywhere that at higher resolution one is gpu bound, quite logic!

And even at 720p, if you are not someone that play competitive shooters, you will not see the difference between 80Fps and 140Fps if both have decent frame time.

As biologist engineer i already explained how the brain and vision system works in a big big post over OCN.

That got deleted because science, i had to fight with admins to get the post back in and then left OCN for allowing thread manipulation over new product launch.

Once again, stop referring to FPS, it's meaningless, your brain don't check the FPS counter, it check if the time between each frame is constant, not the number of frames itself.

Hence al the reviewers, utubers, companies, etc lies simply for marketing purposes, never really being able to deliver what would be the optimal frames to trick your brain.

When one go watch a movie, did it market the number of FPS or simply deliver a fluid stutterless, lagless series of images for your pleasure?!

I mean it's true manufactures try to market this feature as GSync, Freesync or any way to smooth the frametime between each frames through the monitor.

But strangely i found no major media outlet and utubers using the frametime as the only major measure of the fluidity of a game for your brain, not for the hardware. ![]()

The bottom line, you can have 120Fps, the frametime if constant would be 8.3ms between each frame, but let me state right now that this will never happen!

Hence that's why one can still see a difference between 60FPS, 144FPS, 244FPS, trying to get the maximum framerate possible only give you the illusion of fluidity.

Because even at 244FPS with an image on screen each 4ms, if the next image is not constant and framed a bit late to 6.9ms, it will still look like a 144FPS before jumping back to 244FPS.

And yes you brain will still notice it, again you brain do not check at FPS, it check if there is any temporal difference between each frame.

Why? Because your brain works reconstructing images already looking forward in time, based on information already acquired in the past.

Since modern games are still heavy for the current hardware, you will never find a game engine capable of rendering a constant FPS.

Simply because a rendered game scene, where the player is gathering some flowers in a calm land when suddenly a huge meteorite strike, is still to heavy to compute. ![]()

Hope this at least clear your mind, the next time you would ask what are the FPS difference between X and Y!!!!!!!!!