Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Reasons of glDrawElements(BaseVertex) having huge ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reasons of glDrawElements(BaseVertex) having huge driver overhead?

I'm currently working on the refactoration of a complex and likely CPU/driver bound renderer.

In a nutshell:

- all rendering is done on a background thread (consumer) which is fed through a blocking queue of "rendering tasks"

- the main thread (producer) does a lot of small batch draw calls like "moveto(a, b) - lineto(c, d) - lineto(e, f) - ... - flush()"; inserting a task into the queue when necessary

- I designed the abstract interface so that it resembles modern graphics APIs (Metal/Vulkan), so I have buffers, textures, renderpasses and graphics pipelines

- the corresponding GL objects are cached, whenever possible (VAOs, programs, framebuffers), so that GL object creations are minimized

- GL state changes are not managed (with a few exceptions like framebuffer, VAO and program changes)

- buffer data uploads are optimized with the GL_MAP_UNSYNCHRONIZED_BIT; should the buffer become full, I do a glFenceSync+glWaitSync (doesn't happen too often tho)

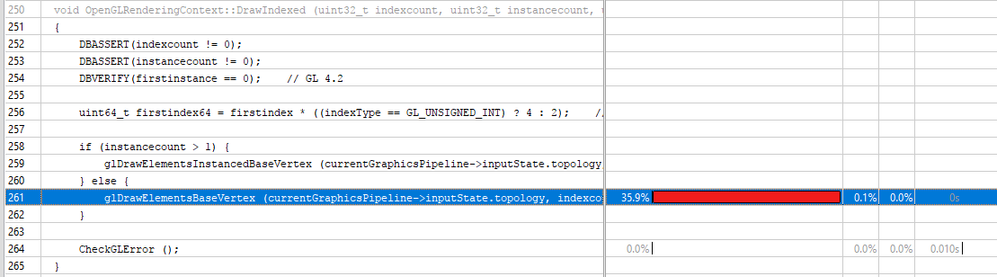

Despite my efforts, so far the rendering is incredibly slow. VTune shows that the majority of the CPU time is spent in glDrawElementBaseVertex:

Same thing on Intel cards. It pretty much looks like a driver limit case, however the funny thing is, that the old DX9 implementation is like 200x faster (hell, even GDI is faster).

So my question is: what might cause a drawing command to have such overhead? Or in other words: what state changes should I look out for to avoid this?

UPDATE: also tried buffer orphaning and STREAM_DRAW flag...no effect...

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I mark this answer as correct, but I didn't really "solve" the problem...

The GPU being flooded was "my fault" (or more precisely, the difference between DX and GL implementation logics).

The other problem which I mentioned can be derived to the driver ignoring certain GL flags (like GL_MAP_UNSYNCHRONIZED_BIT). Orphaning only made things worse.

So I did the following: I mapped the buffer with the unsyncronized flag and when it got full I simply used a glFenceSync + glWaitSync. Using this approach the performance is still slower than DX9, but doesn't drop at least...

Another thought (and I'm going to open another discussion): unifrom buffer objects are INCREDIBLY SLOW (and I really mean it).

What is my reasoning? As I started to refactor the 3D part of the application I noticed that using uniform buffers with _every_ possible optimization still yields performance like ten times slower than the original GL 2.1 glUniformXXX approach. This is ridiculous. No matter the round-robin approach, no matter the fences, the driver _still_blocks_in_that_<flower>_glMapBufferRange_ ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is somewhat solved... It looks like the GPU was overflooded with draw commands.

However after solving this, another problem arised with glMapBufferRange. Obviously I provide the GL_MAP_UNSYNCHRONIZED_BIT, and when the buffer gets full I map with GL_MAP_INVALIDATE_BUFFER_BIT (a.k.a. orphan it).

For a few seconds the performance is ok, but then suddenly drops to 4 fps, the reason being glMapBufferRange (like it's waiting for something). Perhaps the multiple buffer/fencesync approach would be preferable... : /

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I mark this answer as correct, but I didn't really "solve" the problem...

The GPU being flooded was "my fault" (or more precisely, the difference between DX and GL implementation logics).

The other problem which I mentioned can be derived to the driver ignoring certain GL flags (like GL_MAP_UNSYNCHRONIZED_BIT). Orphaning only made things worse.

So I did the following: I mapped the buffer with the unsyncronized flag and when it got full I simply used a glFenceSync + glWaitSync. Using this approach the performance is still slower than DX9, but doesn't drop at least...

Another thought (and I'm going to open another discussion): unifrom buffer objects are INCREDIBLY SLOW (and I really mean it).

What is my reasoning? As I started to refactor the 3D part of the application I noticed that using uniform buffers with _every_ possible optimization still yields performance like ten times slower than the original GL 2.1 glUniformXXX approach. This is ridiculous. No matter the round-robin approach, no matter the fences, the driver _still_blocks_in_that_<flower>_glMapBufferRange_ ...