Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Strange fluctuating completion time on APU GPU

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Strange fluctuating completion time on APU GPU

Hi,

I'm working on a framework for classification and scheduling of computations on heterogeneous multi-device platforms.

I recently added a simple computation to the training samples set which performs the sum-of-cols of a matrix (output = Sum(input[r, i]) forall r).

The kernel code is the following (it looks a bit strange cause it's generated from and F# function):

kernel void SumCols(global float*matA, global float*c, int matA_length_0, int matA_length_1, int c_length_0) {

int r = get_global_id(0);

float accum = 0;

for(int i = 0; i <= (matA_length_1) - (1);i++) {

accum = (accum) + (matA[((r) * (matA_length_0)) + (i)]);

}

c

= accum; }

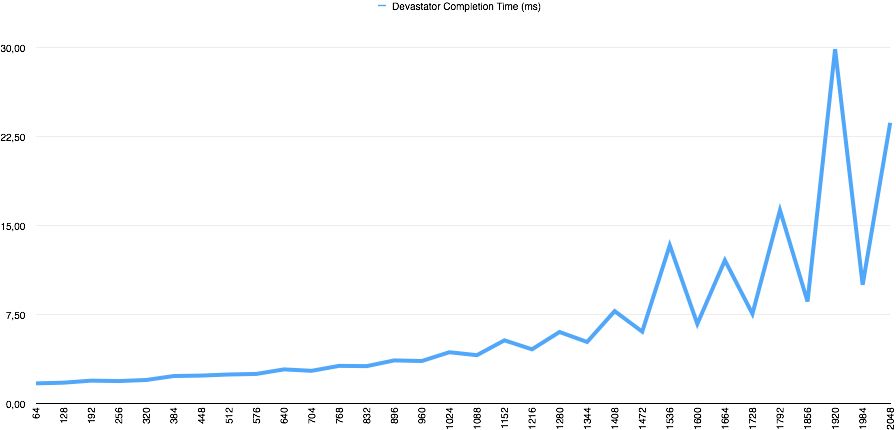

I'm getting a weird fluctuating completion time by varying the input matrix size from 64x64 to 2048x2048 (element type is float32) with a step of 64.

The integrated GPU is a 7660D in the A10-5800K APU.

The following graph shows the completion time by varying the input size. A CSV with numbers is available here: featureBasedScheduling/Sum By Cols-Table 1.csv at master · morellid/featureBasedScheduling · GitHub.

Any hint about what may cause this strange behaviour?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The execution time depends on various factors along with the global data size (in this case matrix size). Please can you share the complete code (with Host) to check and test. As matrix size increases, stride of memory accessing may play an important role. Do you find similar characteristics for other GPU cards also? Or is it only for A10-5800K APU? [The numbers in the above link look confusing. Please can you share in other formats, say in excel)

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. I'm the developer of FSCL (FSCL/FSCL.Runtime · GitHub) and I'm currently working on it, so I'm not explicitly writing any host side code. Anyway, I'm trying to do the same thing in OpenCL C. I'm pretty sure I'll get the same behaviour.

For the input matrix I use USE_HOST_PTR | READ_ONLY flags, while the output is allocated using USE_HOST_PTR | WRITE_ONLY.

For the other devices on the platform (A10 CPU and 7970 Tahiti discrete GPU) I'm not getting any fluctuating behaviour. This only happens on the APU's GPU (maybe cause using the host RAM as global memory/different memory infrastructure?)

Here it is the excel format of the data I collect. The first three cols are the completion times on the three devices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing the excel file. Indeed the graph for AMD A10-5800K APU is totally different from other two which have similar characteristics.

Its an interesting observation. I want to run the same thing in our side. That's why I need the host code to get other information such as memory allocation (already you've mentioned), kernel configuration, how kernel been launched, how time been calculated etc. so that we are in the same page. It would a great help if you provide me the test project.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was discussing with a friend of mine here at the University of Pisa. It -may- be due to a peculiar interference with the specific cache associativity and size.

We are trying to do some match on the blackboard. May you confirm you spot the same "issue" on your side whenever you have a chance to run it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm gonna post the C host side later in the afternoon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please post the host-side code?

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It happened to spot that sizes for which we have peaks seem to be multiple of 384, which is the max amount of work-item that can execute on the whole card (64 x 6 CUs). Don't know whether it is related or not with the performance issue.