Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Transfer Rate exceed PCI-e bandwith

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Transfer Rate exceed PCI-e bandwith

Hi,

I need your help. I create an OpenCL program using pinned buffer.

The duration to transfer buffer from device to host is fast as I expected.

My question is, is it normal if the transfer rate of pinned buffer (size is 256 MB) exceed the PCI-e bandwidth (PCI-e 2.0 x 16)??

The transfer rate of pinned buffer is 204582 GB/s ( iget the transfer rate from AMD APP Profiler)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can someone help me??

I already search many times and I find that pinned buffer can increase transfer rate but it is limited to PCI-e bandwidth.

In my case, the transfer rate is exceed PCI-e bandwidth (8 GB in each direction)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ya true, pinned buffer can increase the transfer rate but it is limited to PCI-e bandwidth.

For more details check Types of Memory used by Runtime in the "AMD Accelerated Parallel Processing OpenCL Programming Guide" available in AMD APP SDK Reference materials.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply.

Is it applied in zero copy buffer too (zero copy buffer transfer rate is limited to PCI-e bandwidth)??

If it is true, why my pinned buffer transfer rate (device to host) exceed PCI-e bandwith (204582 GB/s > 8 GB/s)??

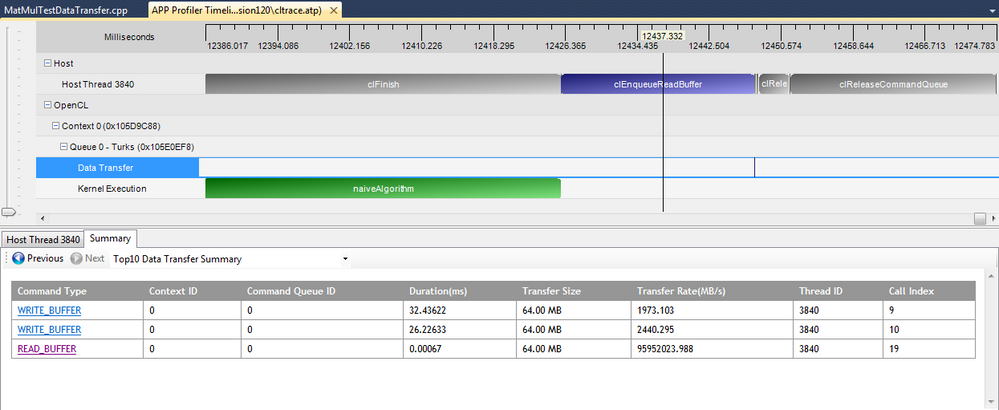

Is it a bug from AMD APP Profiler or there are mistakes in my program (Matrix Multiplication program using 2D work item) ??

I make a new test case with matrix 4096 x 4096 (64 MB) and the pinned buffer transfer rate is 93703 GB/s ( 93703 GB/s > 8GB/s).

Is it because of the asynchronous transfer ??

Here is the code:

#include <Windows.h>

#include <iostream>

#include <fstream>

#include <string>

using namespace std;

#ifdef __APPLE__

#include <OpenCL/opencl.h>

#else

#include <CL/cl.h>

#endif

#define MAX_SOURCE_SIZE (0x100000)

#define SIZE 4096

cl_context context = NULL;

cl_command_queue queue = NULL;

cl_program program = NULL;

//Function to read RandomNumber.txt and fill matrix

void fillMatrix(cl_float* data, int size)

{

//Get text file to read

ifstream myfile("RandomNumber.txt");

if(myfile.is_open())

{

for(int i = 0; i < size*size; ++i)

{

myfile >> data;

if(((i + 1) % size) == 0)

{

//ignore until the next line

myfile.ignore(INT_MAX, '\n');

}

}

myfile.close();

}

else cout << "Unable to open file";

}

//Function to print matrix

void printMatrix(cl_float* matrix, int totalsize, int size)

{

for(int i = 0; i < totalsize; i++)

{

cout<<matrix<<" ";

if(((i + 1) % size) == 0)

{

cout<<endl;

}

}

cout<<endl;

}

void MatrixMul(cl_mem d_A, cl_mem d_B, cl_mem d_C, int size)

{

cl_int err;

cl_kernel naive;

// Create Kernel Object Bound To Kernel Function

naive = clCreateKernel(program, "naiveAlgorithm", &err);

//Set size of global work item and work tem in each work goups

int globalsize = size;

int localsize;

if(globalsize >= 16)

{

localsize =16;

}else

{

localsize = globalsize;

}

size_t global_work_items [2] = {globalsize, globalsize};

size_t local_work_items [2] = {localsize, localsize};

// Setup Kernel Argument

err = clSetKernelArg(naive, 0, sizeof(cl_mem), (void *)&d_A);

err = clSetKernelArg(naive, 1, sizeof(cl_mem), (void *)&d_B);

err = clSetKernelArg(naive, 2, sizeof(cl_mem), (void *)&d_C);

err = clSetKernelArg(naive, 3, sizeof(cl_int), (void *)&size);

// Execute OpenCL kernel for Naive Algorithm

err = clEnqueueNDRangeKernel(queue, naive, 2, NULL, global_work_items, local_work_items, 0, NULL, NULL);

clFinish(queue);

//Release Kernel

err = clReleaseKernel(naive);

}

void Naive(cl_float* matrixA, cl_float* matrixB, cl_float* matrixC, int size)

{

int err;

// OpenCL device memory for matrices

cl_mem d_A;

cl_mem d_B;

cl_mem d_C;

// Allocate Device Memory For Input And Output

d_A = clCreateBuffer(context, CL_MEM_READ_ONLY , sizeof(cl_float)*size*size, 0, &err);

d_B = clCreateBuffer(context, CL_MEM_READ_ONLY , sizeof(cl_float)*size*size, 0, &err);

d_C = clCreateBuffer(context, CL_MEM_WRITE_ONLY | CL_MEM_ALLOC_HOST_PTR , sizeof(cl_float)*size*size, 0, &err);

// Copy Host Memory To Memory Device

err = clEnqueueWriteBuffer(queue, d_A, CL_FALSE, 0, sizeof(cl_float)*size*size, matrixA, 0, NULL, NULL); //Copy matrixA to d_a

err = clEnqueueWriteBuffer(queue, d_B, CL_FALSE, 0, sizeof(cl_float)*size*size, matrixB, 0, NULL, NULL); //Copy matrixB to d_b

MatrixMul(d_A, d_B, d_C, size);

// Copy Device Memory To Host Memory

err = clEnqueueReadBuffer(queue, d_C, CL_TRUE, 0, sizeof(cl_float)*size*size, matrixC, 0, NULL, NULL);

err = clReleaseMemObject(d_A);

err = clReleaseMemObject(d_B);

err = clReleaseMemObject(d_C);

}

//Main Function

int main(int argc, char **argv)

{

cl_int size = 4096;

//Matrix for input and output

cl_float * matrixA;

cl_float * matrixB;

cl_float * matrixC;

//Allocate and init memory for the host

matrixA = (cl_float *) malloc(size*size*sizeof(cl_float));

matrixB = (cl_float *) malloc(size*size*sizeof(cl_float));

matrixC = (cl_float *) malloc(size*size*sizeof(cl_float));

//Fill matrix

fillMatrix(matrixA,size);

fillMatrix(matrixB,size);

//print input for matrix A and B

cout<<"Input for matrix A :"<<endl;

printMatrix(matrixA, size*size, size);

cout<<"Input for matrix B :"<<endl;

printMatrix(matrixB, size*size, size);

cl_int err; // error code

cl_platform_id* platforms;

cl_uint platformCount;

cl_device_id device;

int platformtype = 0; //if 0 using amd app sdk but if 1 using intel sdk

clGetPlatformIDs(0, NULL, &platformCount); //get number of platform

platforms = (cl_platform_id*) malloc(sizeof(cl_platform_id) * platformCount); //create memory allocation for each platform

clGetPlatformIDs(platformCount, platforms, NULL); //get list of platform

clGetDeviceIDs (platforms [platformtype], CL_DEVICE_TYPE_GPU, 1, &device, NULL); //get list of devices

const cl_context_properties contextProperties [] =

{

CL_CONTEXT_PLATFORM,

reinterpret_cast<cl_context_properties> (platforms [platformtype]),

0, 0

};

// Create OpenCL context

context = clCreateContext(contextProperties, 1, &device, NULL, NULL, &err);

// Create a command queue

queue = clCreateCommandQueue(context, device, CL_QUEUE_PROFILING_ENABLE, &err);

//Load Kernel Source

FILE *fp;

const char fileName[] = "./MatMul_Kernel.cl";

size_t source_size;

char *source_str;

fp = fopen(fileName, "r");

if (!fp)

{

fprintf(stderr, "Failed to load kernel.\n");

exit(1);

}

source_str = (char *)malloc(MAX_SOURCE_SIZE);

source_size = fread(source_str, 1, MAX_SOURCE_SIZE, fp);

fclose(fp);

// Create Program Object

program = clCreateProgramWithSource(context, 1, (const char **) &source_str, (const size_t *) &source_size, &err);

// Build Program

err = clBuildProgram(program, 1, &device, NULL, NULL, NULL);

Naive(matrixA, matrixB, matrixC, size);

//Cleanup all memory

err = clFlush(queue);

err = clFinish(queue);

err = clReleaseProgram(program);

err = clReleaseCommandQueue(queue);

err = clReleaseContext(context);

// Display result of matrix multiplication

cout<<"Output for matrix C :"<<endl;

printMatrix(matrixC, size*size, size);

cout<<endl;

free(matrixA);

free(matrixB);

free(matrixC);

free(source_str);

return 0;

}

And here is the image from AMD APP Profiler:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I already read the AMD documentation. Looks like I am misunderstanding.

1. If I use standard buffer (Default Flag), the process of transfer happened two times :

pageable buffer (host) -> page-locked buffer / pinned buffer (host) -> buffer (device)

2. If I use pinned buffer d_C (CL_MEM_ALLOC_HOST_PTR), the transfer rate will increase but it is limited with PCIe bandwidth.

page-locked buffer / pinned buffer (host) -> buffer (device)

3. If I use zero copy, the transfer rate increase greatly since the GPU access kernel directly in the pinned host memory without do any transfer,

but the drawback is: the kernel execution time become slow especially for discrete GPU (because of slower access of GPU to read kernel).

Is my explanation correct??

So, if my explanation is correct, my pinned buffer transfer rate (CL_MEM_ALLOC_HOST) must not exceed PCI-e bandwith (204582 GB/s > 8 GB/s)

unless I use zero copy (CL_MEM_ALLOC_HOST + mapBuffer), is n't it??

Is OpenCL automatically use zero copy if the transfer rate faster than PCIe bandwidth??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the image of GPU-Z that show my PCIe version:

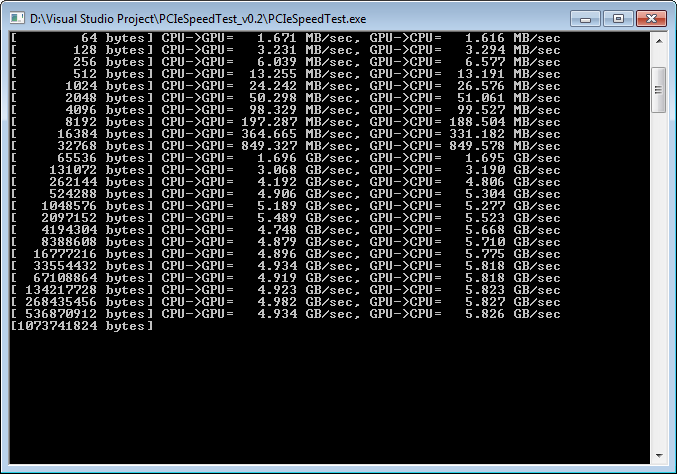

Here is the PCIe speed test result from program that provided by ATI:

From the result, it seems the limit of my PCIe 2.0x16 bandwidth is ~5.8GB/sec (in theory is 8GB/sec) when I copy from GPU to CPU.

Looks like there is no problem in my hardware, so why the transfer rate from device (GPU) to host(CPU) become higher than PCI-e bandwidth

whenever I don't use clEnqueueMapBuffer?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

use of CL_MEM_ALLOC_HOST_PTR cause it to use zero copy buffer. so there are no transfer across PCIe which explains that high transfer rates. refer to table 5.2 in AMD APP OpenCL programming guide.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply, Nou.

I become confused because the table just explain the relation between "the flag in clCreateBuffer" with "clEnqueueMapBuffer".

The table does n't explain the relation between "the flag in clCreateBuffer" with "clEnqueueReadBuffer/WriteBuffer".

So,according to the table 5.2 (I already understand in this part):

CL_MEM_ALLOC_HOST_PTR + mapBuffer -> zero copy

DEFAULT FLAG + mapBuffer -> copy (if device CPU, it become zero copy buffer)

And from my experience:

DEFAULT FLAG + enqueueWriteBuffer/ReadBuffer -> copy

From your explanation:

CL_MEM_ALLOC_HOST_PTR + enqueueWriteBuffer/ReadBuffer -> zero copy (this part make me confuse)

In conclusion, what make a buffer is zero copy buffer is the parameter CL_MEM_ALLOC_HOST_PTR and CL_MEM_USE_HOST_PTR although I use enqueueWriteBuffer/ReadBuffer.

Am I correct??

In documentation (5.6.2.3 Pre-pinned Buffers), to make a pinned buffer (it must not exceed PCIe bandwidth), we can use CL_MEM_ALLOC_HOST_PTR and CL_MEM_USE_HOST_PTR.

So, how to make a pinned buffer (not zero copy buffer) without the use of enqueueReadBuffer/WriteBuffer??

If I use enqueueReadBuffer/WriteBuffer with CL_MEM_ALLOC_HOS_PTR, it will become zero copy buffer, is n't it??

Or pinned buffer (with flag CL_MEM_ALLOC_HOST_PTR) always become zero copy buffer??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh I overlooked that you are talking about clEnqueueReadBuffer(). It may be a bug in profiler code. Try set it to unblocking read and call clFinish manually. only clEnqueuMap/Unmap can be zero copy so this is clearly a bug in profiler.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

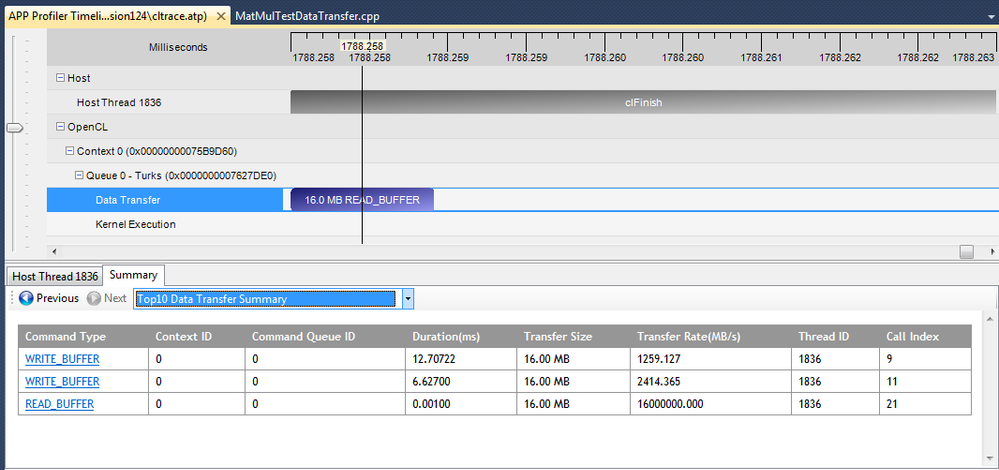

Thanks for your help but I already try that and the result is same,

using CL_MEM_ALLOC_HOST_PTR + enqueuereadbuffer = zero copy (transfer rate ~15000 GB/s)

| // Allocate Device Memory For Input And Output | |

| d_A = clCreateBuffer(context, CL_MEM_READ_ONLY , sizeof(cl_float)*size*size, 0, &err); | |

| d_B = clCreateBuffer(context, CL_MEM_READ_ONLY , sizeof(cl_float)*size*size, 0, &err); | |

| d_C = clCreateBuffer(context, CL_MEM_WRITE_ONLY | CL_MEM_ALLOC_HOST_PTR , sizeof(cl_float)*size*size, 0, &err); |

| // Copy Host Memory To Memory Device | |

| err = clEnqueueWriteBuffer(queue, d_A, CL_FALSE, 0, sizeof(cl_float)*size*size, matrixA, 0, NULL, NULL); //Copy matrixA to d_a | |

| clFinish(queue); | |

| err = clEnqueueWriteBuffer(queue, d_B, CL_FALSE, 0, sizeof(cl_float)*size*size, matrixB, 0, NULL, NULL); //Copy matrixB to d_b | |

| clFinish(queue); |

err = clEnqueueReadBuffer(queue, d_C, CL_FALSE, 0, sizeof(cl_float)*size*size, matrixC, 0, NULL, NULL);

clFinish(queue);

Are there a relation with discrete GPU because my GPU support VM (virtual memory)?? Or are there a relation with buffer size??

I use AMD App SDK 2.6 + AMD App Profiler 2.4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, I already use unblocking call in clEnqueueWriteBuffer and using clFinish(queue) but the result is zero copy.

And I already try to install AMD APP SDK 2.5 and 2.7 but using clEnqueueReadBuffer/WriteBuffer with CL_MEM_ALLOC_HOST_PTR still result in zero copy.

I already upgrade to AMD APP Profiler 2.5 and the result is zero copy too.

Can someone help me ?? I just want to get the result of pinned buffer, not zero copy buffer??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We tried to compile/run your code. However RandomNumbers.txt and MatMul_Kernel.cl are missing.

Could you please provide them?

Thanks,

AMD Support

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is not a bug. It is zero-copy according to the documentation.

cl_mem d_C in your code is a buffer on your host because your specify the flag.

When you pass it as a kernel argument, the doc says " If the buffer is used in a kernel, the runtime creates a cached copy on the device, and subsequent copies are not on the fast path." So possibly your kernel will write to cache in device memory and after the execution the memory area is implicitly copied back (not at paged locked rate).

Even if you do not enqueue the read buffer command, the result already reside in your buffer d_C.