Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Estimate how a kernel will take GPU ressources fro...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Estimate how a kernel will take GPU ressources from KernelAnalyser2 info VS clinfo data ?

Hi,

I programed a quite long kernel and it seems I cannot execute more than 1024 of it in parallel on my Tahiti card. I have a CL OUT OF RESSOURCE error code if my global_work_size parameter is > 1024 !! and I dont understand why....

Tahiti has work group size of 256 and 28 compute units, so basically with a very simple kernel using minimum set of ALUs, does it mean I would expect to run at least 28x256 times the same kernel accross the GPU at same moment ? If then I take into account the wavefront concept, I can run more work items.....

I understand that if my kernel is too big in register usage, I would probably reduce the ressources and thus reduce the ressources available to execute my kernel in "parallel" accross all the work items available and compute units available.

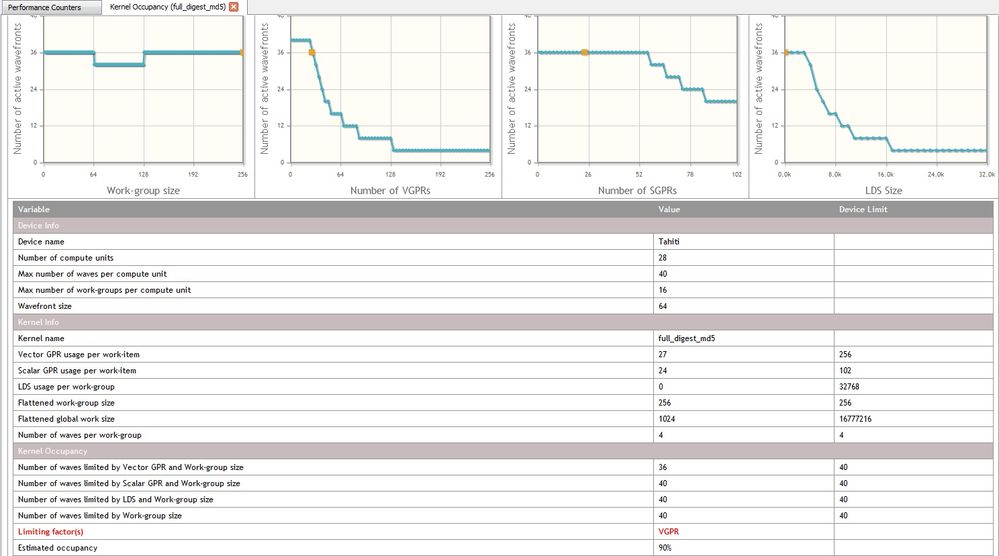

Is there a way with those figures above about Tahiti and the output data from KernelAnalyser2 to predict how many times I can run my kernel code in "parallel" using all the GPU ressources ?

Here is an example of data generated from KernelAnalyser2 for my code (I only extracted non-0 values here...) :

; ----------------- CS Data ------------------------

codeLenInByte = 2596;Bytes

userElementCount = 3;

; userElements[0] = PTR_CONST_BUFFER_TABLE

, 0

, s[2:3]

; userElements[1] = IMM_UAV

, 10

, s[4:7]

; userElements[2] = IMM_UAV

, 11

, s[8:11]

extUserElementCount = 0;

NumVgprs = 27;

NumSgprs = 24;

FloatMode = 192;

IeeeMode = 0;

ScratchSize = 0;

;COMPUTE_PGM_RSRC2 = 0x00000098

COMPUTE_PGM_RSRC2:USER_SGPR = 12

COMPUTE_PGM_RSRC2:TGID_X_EN = 1

Here is the codeXL analysis on Kernel Occupancy:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Global Work Group Size should not pose any problems for out of resource usage.

As I understand, the LDS, Register Usage are the key factors.

If you can run 1 workgroup (with global work group size = 256), it means that LDS and Register Usage are just fine so that compute units can run a workgroup.

I dont understand, why OUT_OF_RESOURCE should occur only when you run more than 4 workgroups. This is not LDS or Register related then.

This looks more like a lazy buffer allocation related error. What is the size of global-memory buffers (cl_mem) that you use for your kernel?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you sure, you set the global_work_size parameter and not the local_work_size parameter of the clEnqueueNDRangeKernel() function?

Because on Tahiti (and on any GCN chip) the maximum for local_work_size is exactly 1024. clEnqueueNDRangeKernel() has many parameters, easy to make a mistake.

Although on the sceenshot and on the .isa disasm footer it seems ok 😕

If everything ok, then you should be able to launch millions of this kernel. (In code size you can go up to 32KB to say it's a big kernel for HD7700+ ![]() , because that's the size of the instruction cache)

, because that's the size of the instruction cache)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry, it was finally my bad ... I realized indeed that in order to make memory access optimization during execution, I put my input buffer in constant memory (so in so called GDS) but at the end I am using too much of it when increasing global work items number ..... using __global keyword instead of __constant in the kernel parameter solved my ressource problem....

Indeed 1 kernel is using in constant memory a vector of 16x32bits = 16*4 bytes = 64 bytes, so when using 1024 kernels I am at limit of 64kbytes for GDS.... that explains why I got the OUT OF RESSOURCES error code.

However for the moment my kernel is finally using registers and private memory and only 1 load and 1 store in global memory, so for now usage of constant memory is not justified, so I guess even if I run million of it in "parallel" the use of global memory at start and end of kernels will have no impact on bandwidth rather than using constant/cache memory ?

For info,my kernel number #k is reading in same big big vector memory at position k with a granularity of uint16 size .... so each kernel will extract a 16x4 bytes vector at start of its execution, put it in a local register/private memory and then process it .... at the end of processing, 2 vectors of 64bits are written in global memory.

Just a question: I would like to read the Tahiti HW register called "HW_ID" to see for each kernel work item #k the usage of wavefront, computing unit etc... Do we have intrinsics in AMD APP SDK so that OpenCL kernel compiler could generate appropriate assembly code to access the HW_ID register and store it in a desired variable ? (please refer to Table 5.6 and 5.7 in http://developer.amd.com/wordpress/media/2012/12/AMD_Southern_Islands_Instruction_Set_Architecture.p...)

Obviously my code would not be compatible with other GPU architecture using such kind of code, but it's for benchmark purpose and to understand how my kernel execution is running over time and ressources .....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The purpose of constant memory is to provide constants that are the same for all the work_items (or at least all the work_items in a specific wavefront). If you try to access them in a way that you'd access from global memory, then the compiler will go nuts while indexing every single constant for each work_items in a sequential way. So you did it good to place work_item specific data into global memory. Accessing const with an index that can be different on each work_item is slow.

Constant memory had a limit in the older VLIW architecture (32KB dedicated constant memory If I remember well). On GCN constants are read from global memory but through the scalar alu and a dedicated constant cache. So basically there is no limit, but I guess the old VLIW limit still exists. On GCN there are 105 S registers to hold constants before using them and the compiler cannot load 100KBytes of them anyways.

GDS memory is the same thing as LDS memory except that it belongs to all worki_tems, not just to a single work_group. And AFAIK you can't access GDS from OpenCL language.

HW_ID: No OpenCL support for that as well. And I bet no AMD_IL support too. You gotta go down to ISA level to reach it. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed realhet, I used constant memory in the wrong way, thanks for clarifications.

About the HW_ID and other registers related to Southern Island ISA, I thought OpenCL compiler shall permit to use asm("....."); inlining conventions with clobbering indication ?

I dont want to break the compiler optimization/efficiency, but I at least need to see if I can ask the compiler to store this register in a given variable