Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Global synchronization inside the kernel

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Global synchronization inside the kernel

Hi,

I'm facing with the following problem: I have to use LDS for a relatively long time and also I need to gather/scatter data across all the LDS memory.

Scheduling more than one kernel is not an option because I'll have to do 1024 [paralell LDS jobs] interleaved with 1024 [LDS gather operations]. In the final thing I gonna need 192K [paralell LDS jobs] per second, so that really isn't the clEnqueue's area.

WorkGroupSize=64, Total WorkItems=4x gpu streams, all WorkItems fit in LDS: guaranteed.

I tried this way:

if(lid==0){

int dstCnt=LoopIdx*cb->GroupCnt; //value to wait for after all workgroups are done with the atomic_incs

atomic_inc(&(out->globalCntr)); //inc for this workgroup

while(out->globalCntr!=dstCnt){} //wait

}

But I'm totally not trusting this (because I don't know if caching can interfere this), and it's kinda slow.

Is there a way to use GDS for this?

Also as a side question: The gather operation will sum up float values. Is it a good idea to convert the floats to integers and sum then with atomic_adds? Or is there a way to atomically sum floats?

Thanks in advance!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And suddenly: I've found the ds_gws_barrier instructions. Unfortunately I haven't found any documentation about it. If anyone knows it please tell me how it works.I gonna check it soon. What if it can make global synch across ALL the waves present in CUes o.o That gonna be jackpot

Hi realhet,

Yes it does synchronize all waves across all CUs.

I tried many global synch schemes and finally went to (global wave sync) gws_barriers.

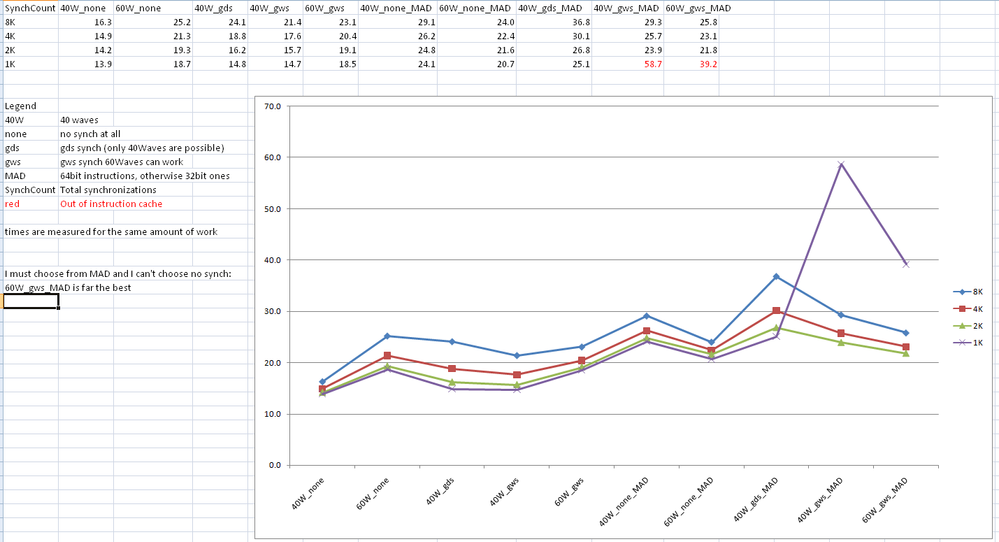

Below is a simple example from my c compiler (gcnc). (opencl doesn't support global synchronization.)![]()

The method can be seen in the C code.

The ISA instruction syntax is shown in the assembly code output just below

The example was cut from a 2D wave function where output A --> B and output B --> A.

global synch is a must unless you re-issue the kernel each time, greatly reducing performance.

Basically

1. Barriers must be initialized each time they are used.

2. Be careful to initialize the first barrier with the first wave to arrive, global id=0 may not work

3. I always use alternating 2 or 3 barriers, initialize barrier n+1 just before hitting barrier n

4. Barriers are initialized with the total number of waves running.

5. This is what I do, no guarantee it's the best way.

Note the assembler allows gs_xxxx insts, just change to ds_xxxx.

#include "../gcnc.h

#define BAR0 0 //define barrier IDs

#define BAR1 1

kernel void lccwave(

__global float *restrict wav0,

__global float *restrict vel0,

__global unsigned int *restrict GLB,

const int ops

)

{

register int gx,gy,gid,i,j,ret;

gx=get_global_id(0);

gy=get_global_id(1);

gid=256*gy+gx;

ret=atomic_inc(&GLB[0],999999); //must find first wave in!!

if(ret==0)gws_init(255,BAR0); //first wave initializes barrier 0

// [some code]

for(j=0;j<32;j++){ //---------------main loop

// [block of code]

if(gid==0)gws_init(255,BAR1); //wave 0 initialzes barrier 1

gws_barrier(BAR0); // hit barrier 0

// [block of code]

if(gid==0)gws_init(255,BAR0); //wave 0 initialzes barrier 0

gws_barrier(BAR1); // hit barrier 1

} //-------------end main loop

gws_barrier(BAR0); //exit hit barrier 0 required

}

//-----------------------------------MARKED UP ASSEMBLY OUTPUT-----------------------

.user elms= 3

.user_dimsi= 2

.user_sgpr = 12

.user_dims = 2

.uax.uav12

.uax.uav10

.uax.uav13

.uax.uav11

.uax.uav9

.ue(0) PTR_UAV_TABLE slot= 0 s[2:3]

.ue(1) IMM_CONST_BUFFER slot= 0 s[4:7]

.ue(2) IMM_CONST_BUFFER slot= 1 s[8:11]

.file 2,"cl\lccwave_2buf.cl"

.nvgpr: 21

.nsgpr: 32

x_set_nvgpr 127 //assembler directives

x_set_nsgpr 104 //assembler directives

x_set_ldsmax 0x400 //assembler directives

s_mov_b32 m0,0xffff

s_movk_i32 s103, 21

s_movk_i32 s102, 32

s_buffer_load_dwordx2 s[0:1], s[4:7], 0x04

s_waitcnt lgkmcnt(0)

s_mul_i32 s0, s12, s0

v_add_i32 v7, vcc, s0, v0

v_mov_b32 v8, v0

s_mul_i32 s1, s13, s1

v_add_i32 v6, vcc, s1, v1

v_mov_b32 v9, v1

.uavp to s[10:11]

s_mov_b64 s[10:11], s[2:3]

s_load_dwordx4 s[16:19], s[10:11], 0x60

s_load_dwordx4 s[20:23], s[10:11], 0x50

s_buffer_load_dword s24, s[8:11], 0x00

s_buffer_load_dword s25, s[8:11], 0x04

s_buffer_load_dword s26, s[8:11], 0x08

s_buffer_load_dword s27, s[8:11], 0x0c

s_buffer_load_dword s28, s[8:11], 0x10

s_buffer_load_dword s29, s[8:11], 0x14

s_buffer_load_dword s30, s[8:11], 0x18

s_buffer_load_dword s31, s[8:11], 0x1c

s_waitcnt lgkmcnt(0)

v_mov_b32 v12, v7

v_mov_b32 v13, v6

v_lshlrev_b32 v18, 8, v13

v_add_i32 v14, vcc, v18, v12

s_load_dwordx4 s[20:23], s[10:11], 0x48

s_waitcnt lgkmcnt(0)

v_mov_b32 v0, 0xf423f

v_mov_b32 v1, s28

buffer_atomic_inc v0, v1, s[20:23], 0 offen glc // initial sync code

s_waitcnt vmcnt(0) // initial sync code

v_mov_b32 v17, v0 // initial sync code

s_mov_b64 s[32:33], exec // initial sync code

v_cmpx_eq_i32 vcc, 0, v17 // initial sync code

s_cbranch_execz label_2 // initial sync code

v_mov_b32 v1, 255 // barrier code before loop

gs_gws_init v1 offset0:0 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_2:

s_mov_b64 exec, s[32:33]

s_mov_b64 s[32:33], exec

v_mov_b32 v16, 0

label_4:

s_mov_b64 s[34:35], exec

v_cmpx_eq_i32 vcc, 0, v14

s_cbranch_execz label_10

v_mov_b32 v1, 255 // barrier code mainloop

gs_gws_init v1 offset0:1 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_10:

s_mov_b64 exec, s[34:35]

gs_gws_barrier offset0:0

s_waitcnt lgkmcnt(0)

s_mov_b64 s[34:35], exec

v_cmpx_eq_i32 vcc, 0, v14

s_cbranch_execz label_12

v_mov_b32 v1, 255 // barrier code main loop

gs_gws_init v1 offset0:0 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_12:

s_mov_b64 exec, s[34:35]

gs_gws_barrier offset0:1

s_waitcnt lgkmcnt(0)

label_5:

v_add_i32 v16, vcc, 1, v16

v_cmpx_gt_i32 vcc, 32, v16

s_cbranch_execnz label_4

s_mov_b64 exec, s[32:33]

gs_gws_barrier offset0:0 // barrier code end

s_waitcnt lgkmcnt(0) // barrier code

label_1:

s_endpgm

.end lccwave

endbye..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And I just ran into a weird bug:

if(lid==0){

int dstCnt=LoopIdx*cb->GroupCnt;

atomic_inc(&(out->globalCntr));

while(out->globalCntr!=dstCnt){ }

}

out->out[gid]=gid+1; // <- here I check that every workitem is alive.

When checking the out[] array on the host side it contains (0,2,3,4,...63,64,0,66,67,...) meaning that the first workitem in the workgroups are lost. o.O

If I remove the while(){} then there will be no synch but at lest all the workitems are alive, out[] equals (1,2,3,4,...,TotalWorkItems).

Seems like the preditate flags from (lid==0) are inverted and left behind after the if block. But it only occurs when I use that while loop with the empty block.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May be, the lid0 thread never completed the while....and your kernel timed-out?

Can you check for any error code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No errors, it runs. And when I invert the while's criteria then it freezes as it have to.

I've checked the amd_il code I think the compiler goes nuts of that empty while ![]()

Look at this, lol:

...

ieq r70.__z_, r71.z, r70.z

if_logicalz r70.z

whileloop

mov r2518, l36 //l36=1,1,1,1 It's a compiled endless loop o.O

break_logicalz r2518

endloop

break

endif

else

endif //I've lost the thread already

...

...

break_logicalnz r66.z

endloop

...

break

endif <---!!!!!!!!!!!!! ???????????????

endloop

else <---I think this causes then lid==0 thread to idle

... <---here is the out->out[gid]=gid+1; part

And miraculously amd_il can pretty much eat this ![]()

Here's my loop, it's not that complicated as it seen from il:

for(int LoopIdx=0; LoopIdx<cb->LoopLen; LoopIdx++) {

//step to next dt

n0=n2; n2=(n0>=2)?0:n0+1; n1=(n2>=2)?0:n2+1; //calculate others from n2

//simulation

private float newy[SegSize];

for(int i=0, j=lid*SegSize*3; i<SegSize; i++, j+=3){ //y[] is LDS, newy is private

newy=a5*(y[j-3+n1]+y[j+3+n1]+y[j+n2])

+a4*(y[j-6+n0]+y[j+6+n0])

+a3*(y[j-3+n0]+y[j+3+n0])

+a2*y[j+n1]

+a1*y[j+n0];

}

//boundary conditions

newy[0]=sBegin?-newy[2]:newy[0];

newy[1]=sBegin?0 :newy[1];

newy[SegSize-1]=sEnd?-newy[SegSize-3]:newy[SegSize-1];

newy[SegSize-2]=sEnd?0 :newy[SegSize-2];

//write back to LDS

for(int i=0, j=lid*SegSize*3; i<SegSize; i++, j+=3) y[j+n2]=newy;

//global synchronization

if(lid==0){

int dstCnt=LoopIdx*cb->GroupCnt;

atomic_inc(&(out->globalCntr));

while(out->globalCntr!=dstCnt){ }

}

//check live workitems

out->out[gid]=gid+1;

} //end loop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

realhet wrote:

And I just ran into a weird bug:

if(lid==0){

int dstCnt=LoopIdx*cb->GroupCnt;

atomic_inc(&(out->globalCntr));

while(out->globalCntr!=dstCnt){ }

}

out->out[gid]=gid+1; // <- here I check that every workitem is alive.When checking the out[] array on the host side it contains (0,2,3,4,...63,64,0,66,67,...) meaning that the first workitem in the workgroups are lost. o.O

If I remove the while(){} then there will be no synch but at lest all the workitems are alive, out[] equals (1,2,3,4,...,TotalWorkItems).

(first post)realhet wrote:

But I'm totally not trusting this (because I don't know if caching can interfere this), and it's kinda slow

I think it's the cache. lid==0 is not seeing the global memory in the while() statement().

I see the same thing in my globally synchronized kernels, different CUs don't agree on the

content of global memory, which may also be different from what the host sees.

Atomics are guaranteed to go through the cache but global memory references are not.

One option is to use only atomics in the while() statement.

Another way in GCN but maybe not available in opencl, is force global memory references to use the glc bit

in the tbuffer read and write instructions, which is what I do and it works fine and is fast.

(similar to fastpath / complete path in earlier architectures, where they clearly say the compiler decides.)

There may be opencl compiler options or flags to force the cache to global memory.

You might also try volatile, but I doubt it works for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rethinking caching: The value I monitor is incremented over time, so caching can't come up with future values.

Neither a crash occurs that could caused because the cache is not refreshed with the atomic_inc.

But OpenCL generates code that I can't even recognize. I think it eliminates that while loop completely. :S But if I invert the criteria it freezes as it should.

GCN: Yea, that glc flag is cool. I planned to the s_sleep instruction at there, so the 64 waves don't have to be so aggressive to the GDS or ram. But I think amd_il will enough for this project, only that frequent sync is the problem so far.

Anyways, I want this virtual instrument to make voices soon as it can ![]() So I go down to amd_il and do it properly with GDS. At least it will work on HD4xxx too. OpenCL is so elegant when it comes to writing expressions, and later I should give it another try...

So I go down to amd_il and do it properly with GDS. At least it will work on HD4xxx too. OpenCL is so elegant when it comes to writing expressions, and later I should give it another try...

It feels like this to me, doing unusual thing on OpenCL, lol -> http://f.kulfoto.com/pic/0001/0015/L80Cl14168.jpg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How about...

if (lid == 0) {

update global memory();

}

barrier(CLK_GLOBAL_MEM_FENCE);

if (lid == 0) {

while(....);

}

I still dont know about the compiler issue.... If you think, if this is an issue -- Can you make a repro-case and share it with us? We will forward it to the engg team. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It can't come up with future values but the problem is that it is never guaranteed to come up with any new value at all until the kernel completes. So if you have two workgroups that are spinning like that they may never force an L1 update and never see the updated value from the increment. Under the strict interpretation of the OpenCL standard a fence doesn't help here either and it seems that the AMD toolchain interprets the specification very literally on this point, instead of how the programmer would expect it to behave. The result of that is that because it never has to read that value from memory, it may not generate a read instruction at all and just carry its own value from the atomic.

would switch to use something like:

while(atomic_add(&out->globalCntr, 0)!=dstCnt){ }

which *should* work, if the atomics aren't re-ordered. I'm not 100% sure about atomic ordering, though, the spec is currently not very strict on these things.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding the toolchain not genrating the "read":

Arguments to atomic* functions are always "volatile pointers".

So, as long as the global structure is declared volatile, the read instruction will be generated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think the spec is that explicit. volatile has never been intended as a concurrency feature in C, it's no guarantee, and even less so in OpenCL C.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One another problem with the spinning approach is:

Workgroups are scheduled in batches on the GPU. So, Workgroups in Batch-1 can be infintely spinning waiting for other Batches to complete. The other batches dont get scheduled until Batch-1 completes and thats a classic deadlock.

Unless, One spawns just enough workgroups so that there is only 1 batch actively running -- one cannot solve this problem.

But -- when you are doing so -- You are already outside the boundaries of OpenCL and Portability.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What I've found is that this kind of thing won't work:

while(out->globalCntr!=dstCnt);

Instead, try using atomic_cmpxchg to force read the latest value:

whie(atomic_cmpxchg(&out->globalCntr, -1, -1) != dstCnt);

However as Himanshu mentioned, you need to be aware of how many groups are in flight at the same time. If your logic depends on having N work groups in flight, there is no guarantee the GPU will schedule N groups at the same time. If the GPU is multi-tasking, or fails to allocate all N groups to CUs for some reason, you can deadlock.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

*The forum engine keeps saying to me "An error occurred while trying to submit your post. Please try again." Dunno what's wrong o.o

Wanted to post this http://pastebin.com/TAapD1Rp and the forum engine (not the moderator) throwed it back with an error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have faced this problem when the forum, for some reason, thinks that it is spam.

In my case, I struggled and finally found that the user-name that I had referenced in the post was considered as spam... Grrr....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This new Jive platform finally works under IE10, but I for one cannot edit my messages, because it keeps importing my very first post of the topic, and I fear editing it, because I think it will edit my first post, not the one I clicked "Edit" upon. Also the advanced editor keeps saying "Page not Found", so I cannot attach files either.

To say something on topic: Micah said something at least a year ago, that he started implementing GWS and GDS for OpenCL. It seems that his attempts did not mature to a release candidate, but would it be much work to expose these features via proper vendor extensions? I got no clue how much effort it takes to enhance the compiler with these features are, so I'm just asking. Making barrier(CLK_GLOBAL_WAVE_SYNC) and perhaps giving a __share memory namespace that refers to on-die GDS. Can we expect something like this (which I have been since Micah hinted he is working on these features), or there is simply not enough programming capacity to introduce these features? I understand there are a handful of you guys, and there are heaps of features requested and priority must be made in the order in which they are implemented, but I feel this to be a waste that the HW capability has been inside the chips since the HD5000 series, this could be one of those kick@ss features that the competition is not capable of, and it is simply left unimplemented on the SW side and topics like this have to be made in order to get working,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Testing Realhet's pastebin data with Piano information removed...

Thank you all your feedbacks. I can't wait to try those on the weekend.

Until that TODO:

LeeHowes, vmiura: atomic in the while()"Workgroups are scheduled in batches on the GPU. So, Workgroups in Batch-1 can be infintely spinning waiting for other Batches to complete. The other batches dont get scheduled until Batch-1 completes and thats a classic deadlock."

Yea, I'm dealing with this:

- Simply don't let the number of WorkGroups go above 2*NumberOfCUes. (tho' it's weird that it didn't crashed at CU*2+1)

(On GCN I could use the s_sleep() instruction to let other waves increment and poll that flag. And have 'complete path' with the glc flat that drallan mentioned earlier)This time, the bottleneck is LDS memory and not the processing power, so I hope if I will not use that many waves, ther will be no deadlocks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm afraid it's still not very safe (I've tried it). I had this kind of code working, and it would still randomly lock up.

- Simply don't let the number of WorkGroups go above 2*NumberOfCUes. (tho' it's weird that it didn't crashed at CU*2+1)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You were right, it's so unreliable.

Even when I used only 12 waves on the 12 CUes, it can survive only 10-20 synchronizations only, not thousands of it. I tried with GDS this time, and it worked very well that I had so many freezes/crashes lol.

And that workaround when I put an escape limit into the synch whileloop doesn't solve the problem, because it will produce click noises in the sound, at least it not freezes.

Well, I'll try to do it with larger batches and let the clEnqueueNDRange() to synchronize them. It will cost additional delays and have to swap the contents of LDS and regs into memory more often.

Anyways, I mark Himanshu's answer as he was the first who told this will not work well.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe the engine thought that I'm spamming that specific processor brandname. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Realhet,

I discovered the issue. We have a list of banned words and our site does stemming so that we don't have to type in all the various tenses/uses of every word. You used "Vibrations" in the piano explanation which turns out to be a variant of one of the banned words - which I can't insert in this post or it won't post. I moved it out of the banned word list and put it in another list, so now your original post should work.

Forums Administrator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me tell my current 'research' in the topic:

On HD6970 I gave up global synchronization because it was so slow, and also a bigger problem that it was so unreliable: sometimes not all the threads started and caused a deadlock.

But on HD7770 it is totally smooth so far ![]()

It's a 10 CU card, so I launch 40 wavefronts and check if all of them are running at the start (by reading the HW_ID hardware register with s_getreg_b32). While testing I launched many hundred kernels and all the 40 waves are placed in well determined SIMD engines (4 SIMD in each CU).

Then I made the global synchronisation with 2 GDS counters: On for counting the loop (there is many thousand global synchs in a kernel), and another one for counting the waves which finished the actual loop.

The first wave is the master: It updates the loop counter when it sees that all other slave waves are ready. The Slave threads are just wait for the loop counter to increment.

There is also a timeout check (s_memtime), I don't let any wave run for more than a second. It's my own gpu watchdog.

I measured how effective is this global synch:

Total active instructions per stream: 3.27M In whole Capeverde card: 3.27M*10{cu}*64{st}= 2.09G

First test: without synch at different number of instructions in the loop

instructions_in_loop * loop_count -> kernel time

400* 8192 -> 16.3ms

800*4096 -> 14.9ms

1600* 2048 ->14.2ms

3200*1024 -> 13.9ms

(This shows how the card prefers big fat loops when minimal number of waves are running)

Here are the times when a global synch occurs in every loop:

400*8192 -> 24.1ms (47% slower)

800*4096 -> 18.8ms (26% slower)

1600*2048 -> 16.2ms (14% slower)

3200*1024 -> 14.8ms (7% slower)

In the loop there was only 32bit long test instructions. Using 64bit only bit ones it can slow down with additional 70%. 😕 That's what I call too dense instruction stream, and this can be avoided only by using 2x more waves (8xStreams amount). But I can't do this because of the synchronisations. But still getting 700GFlops out of an 1120TFlops card is not bad while doing global synch at 70KHz!!

And suddenly: I've found the ds_gws_barrier instructions. Unfortunately I haven't found any documentation about it. If anyone knows it please tell me how it works.

I gonna check it soon. What if it can make global synch across ALL the waves present in CUes o.o That gonna be jackpot ![]() Right now I'm doing the synch with some ifs/loops/gds ops, but maybe ds_gws_barrier is a hardware assisted solution to this.

Right now I'm doing the synch with some ifs/loops/gds ops, but maybe ds_gws_barrier is a hardware assisted solution to this.

Here's the pseudo-code:

gdsAdd(0,1) //increment wave counter

s_cmpk_eq_i32 SIMDId, 0 //only 1 master wave

s_cbranch_scc0 @Slave

@MasterSynch:

gdsRead(0,a)

v_cmp_eq_i32 vcc, grpCount, a

s_cbranch_vccnz @gotcha //when all waves have incremented the wave counter

s_sleep 1

breakOnTimeOut

s_branch @MasterSynch

@gotcha:

gdsWrite(0,0) //reset wave counter

gdsWrite(1,k) //update global loop counter (k=next loop index)

s_branch @Continue

@Slave:

@SlaveSynch:

gdsRead(1,a)

v_cmp_eq_i32 vcc, k, a

s_cbranch_vccnz @gotcha2 //when global loop index = next local loop index

s_sleep 1

breakOnTimeOut

s_branch @SlaveSynch

@gotcha2:

@Continue:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And suddenly: I've found the ds_gws_barrier instructions. Unfortunately I haven't found any documentation about it. If anyone knows it please tell me how it works.I gonna check it soon. What if it can make global synch across ALL the waves present in CUes o.o That gonna be jackpot

Hi realhet,

Yes it does synchronize all waves across all CUs.

I tried many global synch schemes and finally went to (global wave sync) gws_barriers.

Below is a simple example from my c compiler (gcnc). (opencl doesn't support global synchronization.)![]()

The method can be seen in the C code.

The ISA instruction syntax is shown in the assembly code output just below

The example was cut from a 2D wave function where output A --> B and output B --> A.

global synch is a must unless you re-issue the kernel each time, greatly reducing performance.

Basically

1. Barriers must be initialized each time they are used.

2. Be careful to initialize the first barrier with the first wave to arrive, global id=0 may not work

3. I always use alternating 2 or 3 barriers, initialize barrier n+1 just before hitting barrier n

4. Barriers are initialized with the total number of waves running.

5. This is what I do, no guarantee it's the best way.

Note the assembler allows gs_xxxx insts, just change to ds_xxxx.

#include "../gcnc.h

#define BAR0 0 //define barrier IDs

#define BAR1 1

kernel void lccwave(

__global float *restrict wav0,

__global float *restrict vel0,

__global unsigned int *restrict GLB,

const int ops

)

{

register int gx,gy,gid,i,j,ret;

gx=get_global_id(0);

gy=get_global_id(1);

gid=256*gy+gx;

ret=atomic_inc(&GLB[0],999999); //must find first wave in!!

if(ret==0)gws_init(255,BAR0); //first wave initializes barrier 0

// [some code]

for(j=0;j<32;j++){ //---------------main loop

// [block of code]

if(gid==0)gws_init(255,BAR1); //wave 0 initialzes barrier 1

gws_barrier(BAR0); // hit barrier 0

// [block of code]

if(gid==0)gws_init(255,BAR0); //wave 0 initialzes barrier 0

gws_barrier(BAR1); // hit barrier 1

} //-------------end main loop

gws_barrier(BAR0); //exit hit barrier 0 required

}

//-----------------------------------MARKED UP ASSEMBLY OUTPUT-----------------------

.user elms= 3

.user_dimsi= 2

.user_sgpr = 12

.user_dims = 2

.uax.uav12

.uax.uav10

.uax.uav13

.uax.uav11

.uax.uav9

.ue(0) PTR_UAV_TABLE slot= 0 s[2:3]

.ue(1) IMM_CONST_BUFFER slot= 0 s[4:7]

.ue(2) IMM_CONST_BUFFER slot= 1 s[8:11]

.file 2,"cl\lccwave_2buf.cl"

.nvgpr: 21

.nsgpr: 32

x_set_nvgpr 127 //assembler directives

x_set_nsgpr 104 //assembler directives

x_set_ldsmax 0x400 //assembler directives

s_mov_b32 m0,0xffff

s_movk_i32 s103, 21

s_movk_i32 s102, 32

s_buffer_load_dwordx2 s[0:1], s[4:7], 0x04

s_waitcnt lgkmcnt(0)

s_mul_i32 s0, s12, s0

v_add_i32 v7, vcc, s0, v0

v_mov_b32 v8, v0

s_mul_i32 s1, s13, s1

v_add_i32 v6, vcc, s1, v1

v_mov_b32 v9, v1

.uavp to s[10:11]

s_mov_b64 s[10:11], s[2:3]

s_load_dwordx4 s[16:19], s[10:11], 0x60

s_load_dwordx4 s[20:23], s[10:11], 0x50

s_buffer_load_dword s24, s[8:11], 0x00

s_buffer_load_dword s25, s[8:11], 0x04

s_buffer_load_dword s26, s[8:11], 0x08

s_buffer_load_dword s27, s[8:11], 0x0c

s_buffer_load_dword s28, s[8:11], 0x10

s_buffer_load_dword s29, s[8:11], 0x14

s_buffer_load_dword s30, s[8:11], 0x18

s_buffer_load_dword s31, s[8:11], 0x1c

s_waitcnt lgkmcnt(0)

v_mov_b32 v12, v7

v_mov_b32 v13, v6

v_lshlrev_b32 v18, 8, v13

v_add_i32 v14, vcc, v18, v12

s_load_dwordx4 s[20:23], s[10:11], 0x48

s_waitcnt lgkmcnt(0)

v_mov_b32 v0, 0xf423f

v_mov_b32 v1, s28

buffer_atomic_inc v0, v1, s[20:23], 0 offen glc // initial sync code

s_waitcnt vmcnt(0) // initial sync code

v_mov_b32 v17, v0 // initial sync code

s_mov_b64 s[32:33], exec // initial sync code

v_cmpx_eq_i32 vcc, 0, v17 // initial sync code

s_cbranch_execz label_2 // initial sync code

v_mov_b32 v1, 255 // barrier code before loop

gs_gws_init v1 offset0:0 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_2:

s_mov_b64 exec, s[32:33]

s_mov_b64 s[32:33], exec

v_mov_b32 v16, 0

label_4:

s_mov_b64 s[34:35], exec

v_cmpx_eq_i32 vcc, 0, v14

s_cbranch_execz label_10

v_mov_b32 v1, 255 // barrier code mainloop

gs_gws_init v1 offset0:1 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_10:

s_mov_b64 exec, s[34:35]

gs_gws_barrier offset0:0

s_waitcnt lgkmcnt(0)

s_mov_b64 s[34:35], exec

v_cmpx_eq_i32 vcc, 0, v14

s_cbranch_execz label_12

v_mov_b32 v1, 255 // barrier code main loop

gs_gws_init v1 offset0:0 // barrier code

s_waitcnt lgkmcnt(0) // barrier code

label_12:

s_mov_b64 exec, s[34:35]

gs_gws_barrier offset0:1

s_waitcnt lgkmcnt(0)

label_5:

v_add_i32 v16, vcc, 1, v16

v_cmpx_gt_i32 vcc, 32, v16

s_cbranch_execnz label_4

s_mov_b64 exec, s[32:33]

gs_gws_barrier offset0:0 // barrier code end

s_waitcnt lgkmcnt(0) // barrier code

label_1:

s_endpgm

.end lccwave

endbye..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gcnc. What's that and where can we get it? ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

vmiura wrote:

gcnc. What's that and where can we get it?

Hi vmiura,

The best answer is it's my attempt at building a GCN hardware specific C compiler/assembler that can run in AMD's opencl environment. The compiler is not like opencl and is not meant to be. When GCN first came out, I (and a few others) looked at ways of working with this new and amazingly powerful hardware architecture. After an assembler, I thought a C compiler could ease the task of writing assembly code, much like early C.

It's purely a personal project (and daunting task) but might be nice to open up someday as interest in GCN grows.

You can see what it looks like here gcnc_link.

Sorry, no downloads yet ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very inspirational post! How good is to have arithmetic expressions and local functions with inline asm. Makes me wanna throw away macros and start to make something out of my pascal parser. Now at least I have a strong asm/end block for the start ![]()

I've made a small, reduced functionality arithmetic optimizer already, which used a pascal script for input and generated a static unrolled instruction stream (no ifs, no loops, just assignments). This combined with a high level compiler would be awesome.

Now I take a deep breath and go back to lowlevel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi drallan,

Thx for the great example code! And congrats to your compiler! ![]()

But how can it fail even on a simple thing as this: (the result is a deadlock at ds_barrier :S)

AMD disasm tells me that I do the ds_gws encodings correctly. I restrict the whole kernel to the first local lane. The workgroupsize is 64, there are 2 workgroups only and yet it goes into an infinite loop :S

Is there something in the CAL Note Section to enable it?

I've found something called IMM_GWS_BASE // immediate UINT with GWS resource base offset. It's in a _E_SC_USER_DATA_CLASS structure. Is that the key? (Right now I don't fiddle with it because I allways ask the current OpenCL to make me a fresh skeleton kernel)

----------------------------------------------------------------------------------------

var dev:=cl.devices[1], kernel:=dev.NewKernel(asm_isa(

isa79xx

numVgprs 256 numSgprs 104

numThreadPerGroup 64 //workgroupsize=64

oclBuffers 0,0

s_mov_b64 exec,1 //restrict to first local id

s_cmpk_eq_i32 s2,0 //gid=0?

s_cbranch_scc0 @skip

v_mov_b32 v10,1 //I load 1 because there are 2 waves in total

ds_gws_init v10 offset0:1 gds

s_waitcnt lgkmcnt(0)

@skip:

__for__(i:=0 to 999, s_sleep 7) //very long dummy code

ds_gws_barrier v0 offset0:1 gds //v0 is only a dummy 0

s_endpgm

));

writeln(kernel.ISACode);

with kernel.run(64*2 {2 waves}) do begin

waitfor; writeln('elapsed: '&format('%.3f',elapsedtime_sec*1000)&' ms'); free; end;

kernel.free;

---------------------------------------------------------------------------------------

ShaderType = IL_SHADER_COMPUTE

TargetChip = t;

------------- SC_SRCSHADER Dump ------------------

SC_SHADERSTATE: u32NumIntVSConst = 0

SC_SHADERSTATE: u32NumIntPSConst = 0

SC_SHADERSTATE: u32NumIntGSConst = 0

SC_SHADERSTATE: u32NumBoolVSConst = 0

SC_SHADERSTATE: u32NumBoolPSConst = 0

SC_SHADERSTATE: u32NumBoolGSConst = 0

SC_SHADERSTATE: u32NumFloatVSConst = 0

SC_SHADERSTATE: u32NumFloatPSConst = 0

SC_SHADERSTATE: u32NumFloatGSConst = 0

fConstantsAvailable = 0

iConstantsAvailable = 0

bConstantsAvailable = 0

u32SCOptions[0] = 0x01A00000 SCOption_IGNORE_SAMPLE_L_BUG SCOption_FLOAT_DO_NOT_DIST SCOption_FLOAT_DO_NOT_REASSOC

u32SCOptions[1] = 0x00000000

u32SCOptions[2] = 0x20800001 SCOption_R800_UAV_NONARRAY_FIXUP SCOption_R1000_BYTE_SHORT_WRITE_WORKAROUND_BUG317611 SCOption_R1000_READLANE_SMRD_WORKAROUND_BUG343479

u32SCOptions[3] = 0x00000010 SCOption_R1000_BARRIER_WORKAROUND_BUG405404

; -------- Disassembly --------------------

shader main

asic(SI_ASIC)

type(CS)

s_mov_b64 exec, 1 // 00000000: BEFE0481

s_cmpk_eq_i32 s2, 0x0000 // 00000004: B1820000

s_cbranch_scc0 label_0007 // 00000008: BF840004

v_mov_b32 v10, 1 // 0000000C: 7E140281

ds_gws_init v10 offset:1 gds // 00000010: D8660001 0000000A

s_waitcnt lgkmcnt(0) // 00000018: BF8C007F

label_0007:

[tonns of] s_sleep 0x0007 // 00000FA0: BF8E0007

ds_gws_barrier v0 offset:1 gds // 00000FBC: D8760001 00000000

s_endpgm // 00000FC4: BF810000

end

; ----------------- CS Data ------------------------

codeLenInByte = 4040; Bytes

userElementCount = 0;

extUserElementCount = 0;

NumVgprs = 256;

NumSgprs = 104;

FloatMode = 192;

IeeeMode = 0;

ScratchSize = 0;

texResourceUsage[0] = 0x00000000;

texResourceUsage[1] = 0x00000000

... all zeroes

fetch4ResourceUsage[7] = 0x00000000

texSamplerUsage = 0x00000000;

constBufUsage = 0x00000000;

COMPUTE_PGM_RSRC2 = 0x00000084

COMPUTE_PGM_RSRC2:USER_SGPR = 2

COMPUTE_PGM_RSRC2:TGID_X_EN = 1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

realhet wrote:

But how can it fail even on a simple thing as this: (the result is a deadlock at ds_barrier :S)

s_mov_b64 exec,1 //restrict to first local id

s_cmpk_eq_i32 s2,0 //gid=0?

s_cbranch_scc0 @skip

v_mov_b32 v10,1 //I load 1 because there are 2 waves in total

ds_gws_init v10 offset0:1 gds

s_waitcnt lgkmcnt(0)

@skip [sleep a lot]

ds_gws_barrier v0 offset0:1 gds //v0 is only a dummy 0

Because gid 0 always initializes the barrier. (I have done this sooooo many times...)

What happens if wave 1 arrives before wave 0 and hits the barrier? dead!

As is the code hangs my card but runs fine when I use the first arriving wave to initialize the barrier.

ret=atomic_inc(&p[0],999); //global var set to 0, first wave gets ret=0

execsave=exec;

exec=1UL;

// if(gid==0)gws_init(1,1); //fails on gid = 0

if(ret==0)gws_init(1,1); // works, first wave has ret==0

asm("s_sleep 7");

gws_barrier(1);

exec=execsave;

I think of this as, who syncs the synchronizer?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally it works, thank you ![]()

Finding the first thread was only one mistake I've made.

There was a stupid mistype: I typed 'ossfet' instead of 'offset' in one of the macros lol, and my asm just simply ignored it (I should improve it with error checking).

Then I realized that it's also a crash when I reinitialize the same barrier right after the ds_barrier instr. So that overlapped technique is a requirement, not an option.

I've made a chart comparing many things:

The rightmost is the one that the most effective method and that meets the requirements:

- It does a dense MAD stream (64bit instructions everyvhere)

- It can do 60waves (on a 10CU gpu) which is 20% better for the dense instruction stream than the 40 waves version.

With gds it is not possible to achieve more than 4 waves/CU, but with GWS I reached 60 waves. At 61 waves it introduces synch errors (but not crashes). At 80 waves there are so many errors. Up until 60 it seems really stable.

Thanks again, I'll mark your answer as the solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On Windows, global sync was smooth until I did something like move the window around while the kernels are running. I figured it was partitioning CUs between compute and rendering or something.

Btw, why only 40 waves? It could run up to 400 waves, depending on the vgpr usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

40 waves because on a HD7770 that is the total number of SIMD units. (1{ShaderEngines}*2{ShaderArrayElements}*5{CUes/ShaderArrayElements}*4{SIMDes/CUes} this is how identify them with the HW_ID register)

100 waves would be the maximum amount of waves that can stay inside the 10 CUes, and only 40 of those are assigned to the SIMD units at any given time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep, but that would give you only 10% occupancy which could be slow. But if it's just a test that doesn't care about performance then it doesn't matter.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please note that these small numbers of waves are for the smallest GCN chip, which has only 10 CUes, not 32.

With 40 threads it is possible to utilize all the 640 streams but without any latency hiding and only with simple instructions. GDS synch is only works for 4 waves per CU, otherwise it's a deadlock.

With 60 threads (thanks for ds_gws_barrier) it was possible to put 6 waves into every CU, and this tolerates better the 'fat' instruction stream I'm planning to give them.

I measured 700 GFlops/s with MADs, while synching all the workitems at 220KHz. This means a synchpoint after every 400 v_mad_f32. On a 1126 GFlops/s card it's not that bad. ![]()

There's also a noticeable kernel launch overhead: I have to launch 100 kernel in every second because it has to be interactive.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With 60 threads (thanks for ds_gws_barrier) it was possible to put 6 waves into every CU, and this tolerates better the 'fat' instruction stream I'm planning to give them.

Thanks for the data.

Agree, fat instructions do better when you go past full house (4waves/CU). Before GCN, all insns were fat.

For wave barriers (gws), I often use 8 waves/CU and I have not seen a problem. That's GCNs sweet spot for computation (ignoring latency). However, as himanshu points out, its up to your luck as far as when kernels are issued. When I use 8 waves/CU, I almost always use 256 work items/ group, only two groups / CU. Now I wonder if that makes a difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oups I had a mistake: forgot to use GLC while checking the synchronization with uav.

So the 8 wavefronts / CU is possible with GWS, and beyond this it is a crash.

w/CU 4 5 6 7 8

MAD 29 37 38 39 39 (exec time, ms)

ADD 21 34 34 34 34

When I raised it from 6 to 8, the exec time was only increased 1ms from 38, so some sleeping units was awaken.

Not the TFlops/s I can get out of it it is 838 (raised from 700, peak is 1126).

(And this leads to a problem in the piano: Faster processing leads to less string lengths given to each of the wavefronts. And it starting to reach lengths of the bass strings. It will be a miracle that how the whole thing will fit into the HD7770... But if it fits, it sits. ![]() )

)

Still there is room for MAD to be faster, but I think it's only can happen when the CU has all 10 waves inside.

Didn't tested for workgroup sizes bigger than 64. A test of that would be interesting 'tho.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oups I had a mistake: forgot to use GLC while checking the synchronization with uav. So the 8 wavefronts / CU is possible with GWS, and beyond this it is a crash.

w/CU 4 5 6 7 8

MAD 29 37 38 39 39 (exec time, ms)

ADD 21 34 34 34 34

When I raised it from 6 to 8, the exec time was only increased 1ms from 38, so some sleeping units was awaken.

Not the TFlops/s I can get out of it it is 838 (raised from 700, peak is 1126).

(And this leads to a problem in the piano: Faster processing leads to less string lengths given to each of the wavefronts. And it starting to reach lengths of the bass strings. It will be a miracle that how the whole thing will fit into the HD7770... But if it fits, it sits. )

Still there is room for MAD to be faster, but I think it's only can happen when the CU has all 10 waves inside.

Didn't tested for workgroup sizes bigger than 64. A test of that would be interesting 'tho.

LOL, now it is too fast ![]() . 838 of 1126 is very impressive, that's 1.5 flops per clock.

. 838 of 1126 is very impressive, that's 1.5 flops per clock.

Can you use MAC instead of MAD in some spots? I think mac is a short insn.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow thanks for MAC, now I'm at 960 GFlops/s with 230KHz synch ![]() I do convolution most of the time, so that's the proper instruction.

I do convolution most of the time, so that's the proper instruction.

(Gotta memorize that mad = mad+mac+madak+madmk. Even in my Mandelbrot example there's a spot for MAC.)

I also tried it with workgroup_size=256. And it worked without any slowdown. So the long piano string problem is no more a problem as I can ensure that every 4 adjacent wavefronts are using the same LDS memory.

Here's how it depends on speed: I have to fit the total number of string point into the whole card:

The whole instrument have 55K string points.

Total workitems: 10(cu)*8(wf)*64=5120

String points per workitems=10.74 -> 11

Longest string =11*64*4(wfs) = 2816 -> thats 2-3x more than actually needed, thanks for 256 workitems/workgroup

Iterations = 4096 (comes from 512 samples at 8x oversampling)

Maximum time = 10.6ms (512 samples @ 48KHz)

Estimated instruction count = 183 (based on actual HD6970 simulation, maybe I can optimize better)

Measured time with MAC's = 10.52ms sooooooo close! ![]() (That's 7.1ms on HD6970 which is 2.72 TFlops instead of 1.12)

(That's 7.1ms on HD6970 which is 2.72 TFlops instead of 1.12)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's how a 10cu HD7770 'instrument' sounds in realtime ![]() https://soundcloud.com/realhet/gcn-piano-moonlight-mvt3-by

https://soundcloud.com/realhet/gcn-piano-moonlight-mvt3-by

(performed by vs120 on prog.hu) And I don't even use the synch yet, all strings are working on their own, separated. (Because I'm lameing to interconnect the strings that they not go to overload haha, but at least it works ![]() )

)