Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Unexpected Cache Hit statistics

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unexpected Cache Hit statistics

Hi All,

I was wondering if the cache hit statistics output by the AMD APP Profiler (v2.5) are reliable.

I was experimenting with a very simple kernel:

int iGID = get_global_id(0);

c[iGID] = a[iGID];

When I run the above kernel on AMD 7970, I get the CacheHit as low as 0.4%.

Now, since in this case the global memory accesses are coalesced, I was assuming that I should be getting a very high Cache Hit rate given the global memory accesses are cached.

When I run the following kernel:

int iGID = get_global_id(0);

c[iGID] = a[iGID] + a[iGID+1] + a[iGID+2] + a[iGID+3] +a[iGID+4]; // I am taking care of not overshooting the buffer size by last threads

I get CacheHit as 12%.

Again, I was expecting a very large CacheHit here.

I have played around these around with 4096 Threads and 256 WG Size. The array 'a' should easily fit inside the 16KB L1 cache per CU>

Are these numbers very low because:

The Profiler only samples a particular block, which if happens to be the first one, Cache Hits are low due to cold-misses (I know, it really can not account for these numbers but that is my only guess) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Caches need to be warmed up first. If you are accessing a variable only once, then it will need to come from global memory for first time. This explains 0.4%. I think this is reasonable

As far as your second code goes, it could be due to the CU executing multiple workgroups.

If the caches are not fully associative, you can expect multiple workgroups to displace each other's data.

Not sure what is the cache architecture on 7970 is.

Do you have any idea?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, GCN Whitepaper says it to be 4-way set associative with 64 B cache line size. Per CU L1 cache size is 16KB.

In the second case, I tried to run following kernel with total 1024 threads(total) and the cache hit now is 14%.

int iGID = get_global_id(0);

c[iGID] = a[iGID] + a[iGID+1] + a[iGID+2] + a[iGID+3] + a[iGID+4]+ a[iGID+5]+ a[iGID+6]+ a[iGID+7]+ a[iGID+8];

Now, 1024 threads (total) or 16 Wavefronts is really not a big number for 7970 (it has 32 CUs).

Even if all the threads are somehow scheduled on one CU (7970 allows 40 Wavefronts/CU to be active at a time), the array 'a' of size 4K very nicely fits in the 16K L1 cache.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Usually, the wavefronts are scheduled on CUs before they get stacked up on a particular CU.

So, given 16 wavefronts, each wavefront will posibly go to 1 CU. And 16 CUs will still stall.

Cant you make it at least 32 wavefronts?

Also, I just hope the profiler is smart enough to profile on a CU that is being used 😉

I am assuming the data type of A is "int'.

Also, we dont know how the compiler has scheduled the loads.

Can you try first loading A[gid] and then do a "barrier".

After that, read the rest, add up and then write C

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can't recall precisely (if it was ATi or NV profiler) but there was info that profiling counters work only on first CU in device.

Usually first CU will be loaded most indeed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Usually first CU will be loaded most indeed.

All CUs get equal load. There is nothing special about CU 0.

But, it could be possible that if there is only one workgroup launched -- it will always go to CU 0. So, profliling CU0 probably is a safe bet because sometihng will be executing there for sure. This is just a guess.

Apart from that, there is nothing that suggests that CU0 will be the most loaded.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try the "barrier" thing listed above and let us know if that helped?

Also, please increase the number of workgroups.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks a lot Himanshu for your suggestions and following up on this.

Very sorry for delay in reply.

I did some new tests:

Just to recall that I am running these on AMD 7970 having 32 CUs

Case 1:

int iGID = get_global_id(0);

c[iGID] = a[iGID];

WorkgroupSize=256, TotalThreads=256*32, CacheHit%=4% // multiplied by 32 for fair load-balancing of blocks on CUs

WorkgroupSize=64, TotalThreads=64*32, CacheHit%=13% // 64 is the wavefront(WF) size

WorkgroupSize=16, TotalThreads=16*32, CacheHit%=37% // 16 bcoz a WF is divided into QuarterWFs while execution

WorkgroupSize=8, TotalThreads=8*32, CacheHit%=66% // no big logic behind 8, just to reduce cache contention

My observations:

* Since GPU tries to have global mem loads cached, so all the threads in WF will have to miss before they access it first, not sure how it is as high as 37% in case of 16 threads per workgroup (quarter WF is the lowest granularity of execution)

*It seems to be the case that even within a WF, cache eviction happens between Quarter Wavefronts. See the 64 and 16 case. But I am not convinced assuming an LRU policy.

Case 2:

int iGID = get_global_id(0);

float tmp = a[iGID];

barrier()

tmp += a[iGID+1] + a[iGID+2]+a[iGID+3]

c[iGID]=tmp

WorkgroupSize=256, TotalThreads=256*32, CacheHit%=7% // multiplied by 32 for fair load-balancing of blocks on CUs

WorkgroupSize=64, TotalThreads=64*32, CacheHit%=23% // 64 is the wavefront(WF) size

WorkgroupSize=16, TotalThreads=16*32, CacheHit%=52% // 16 bcoz a WF is divided into QuarterWFs while execution

WorkgroupSize=8, TotalThreads=8*32, CacheHit%=70% // no big logic behind 8, just to reduce cache contention

* The behavior here is still questionable. There is not much increase in respective numbers from Case1. The loads a[iGiD+ 1,2,3...] should have all hit the cache given we are using a very puny fraction of the large L1 cache per CU.

Well, for all the above, I am assuming that not multiple Workgroups are scheduled on same CU. I can try forcing only one Workgroup per CU by having large local memory usage in the kernels.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to add some more twist, the same microbenchmarks when run on an Nvidia Fermi :

Case 1:

c[tid] = a[tid];

0% L1 cache hits . Quite expected, cold cache misses

Case 2:

float tmp = a[iGID];

barrier() --> __syncthreads()

tmp += a[iGID+1] + a[iGID+2]+a[iGID+3]

c[iGID]=tmp

~73 to 88% (increasing with the block size) L1 cache hits. Again, as expected.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hope Case2 meant "c[iGID] = tmp;"

Otherwise, it will be optmized out as dead-code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's correct, my bad while typing the post. I have corrected it. (no dead-code in the actual program)

Also the data types of A and C are float.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

Nice experiments. I think the L1 cache is shared among 4 CUs.

See below from GCN Architecture paper.

The 16KB scalar data L1 is 4-way associative with 64B lines and LRU replacement; it is also shared between a cluster of up to 4 Compute Units and backed by the L2 cache.

That probably explains why you are getting hits on AMD platform for Case 2 (assuming there is no dead code there).

Case 1 is puzzling.

The same paper also talks about CU specific L1 data cache.

btw, what are the data-types of C and A? I hope they are ints.

On NVIDIA, the L1 cache is tied to the CU. Each CU has its own L1 cache - which can also be configured as "local memory".

Hence you are seeing absolute 0% cache-hits for c[] = a[];

Whereas, the Texture Cache in NVIDIA is shared by more CUs (multiprocessors) and they are read-only in nature.

This is simlar to AMD's L1 scalar caches - whcih I believe are read-only (Correct me if am wrong here)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

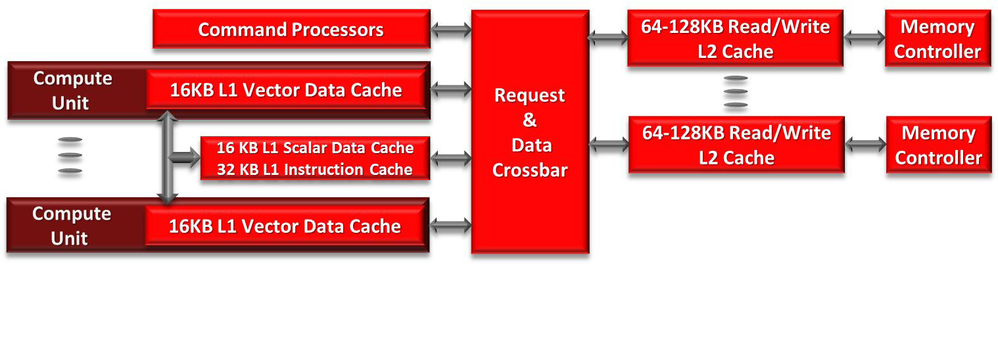

Courtesy: GCN Arch whitepaper.

As you can see, th Vector Data cache is private to CU, Scalar Data Cache is shared by CUs and there is also the instruction cache.

I just hope the cache-usage that you are getting does not factor in instruction cache 😞

Can you get more details of how exactly you are measuing the cache-hit ratio?

Is it specific to L1 or Does it include L2 as well?

Also, I am not sure if the hardware can prefetch data into cache-lines.

I dont see it in the whitepaper.

Another possibility is Say - A Cache miss fetching 4 lines together...

(i have seen similar stuff with Intel -- wherein it brings in 2 cache-lines per cache-miss instead of one)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am measuring the cache hits by the AMD APP profiler, which does not specify it to be L1 or L2. Though it claims it to be data cache and not instruction.

CacheHit : The percentage of fetches that hit the data cache. Value range: 0% (no hit) to 100% (optimal).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's L2 cache.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lihan,

That was surprising and useful. How do we get L1 cache hit ratio?

Is there any counter for it?

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently, there is no L1 cache counter exposed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Lihan for the insights.

But I really can not get my head around the cache hits I am observing, even after considering it to be L2 cache. Would be really helpful if you can shed some of your expertise (simple microbenchmarks: http://devgurus.amd.com/message/1287539#1287539). Could this be possible because of some weird way of calculating L2 cache hits ?

Himanshu: Thanks! Bringing in multiple lines on a miss could be a possible (though not entirely convincing, given the coalesced accesses). Btw the shared L1 cache is only for scalar data and instructions. Vector L1 Data cache is tied to One per CU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hope you know, that the LLVM "compiles" your code, and what runs on the hardware is not always what you can see.

Just with such a simple Kernel, the compiler will make heavy use from registers and so on.

The LLVM is really powerful! Just see it as a GCC mit -O3. When you really want to know what is going on, you have to look at the Assembler-Code.

When you do useless stuff, the LLVM very often just skip this parts of Code ![]()

So it is really not easy to utalize the GPU with useless stuff... I have tried this years ago, and have really often noticed, that it is really difficult to add useless code, to utalize the GPU much better for Testings.

If you want to do something like this, usw branches, where you check your result!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Skysnake,

This is not really a useless code. The compiler cannot ignore a code snippet like below.

C[gid] = A[gid] + A[gid +1] + .... and so on..

This is not a un-reachable code (or) something that can be calculated at compile time.

LLVM or whatever compiler -- has to generate code for this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you never use this manipulations of Data, the LLVM can delete all this apoerations sometimes. You really have to "use" the results. when you write them into a write buffer in the global memory everything is fine.

I am not able to see if you do this or not with just such a few lines of code. I can you only say, that you have to be careful, because it is not so clear what the GPU really do like the most people think

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. But I still feel there is no unused code in these small programs as all the operations result in a write to the Global Mem.

I verified it with the disassembled code. So, for the case of :

float tmp = a[iGID];

barrier()

tmp += a[iGID+1] + a[iGID+2]+a[iGID+3]

The disassembled code looks like:

t_buffer_load_format_x v2, v1, s[8:11], 0 offen forma ......

s_barrier

t_buffer_load_format_x v3, v1, s[8:11], 0 offen offs ...

t_buffer_load_format_x v4, v1, s[8:11], 0 offen offs...

t_buffer_load_format_x v1, v1, s[8:11], 0 offen offs...

.

.

.

t_buffer_store_format_x v1, v0, s[0:3], 0 offen....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, i have it -.-

Remember, what get_global_id(0) makes. You get for 256 Threads [0-255] as result. Because of the form of your index (+x [0-8]) you use the array-elements [0-263]. So you use every data less than 8 times per workgroupe (note, i assume, that you start DIM0=256,DIM1=32,DIM2=0 threads)

So per workgroupe you have to load 263 different elements. In sum 256*8=2048 Elements. Just make ist easy and say, you reuse every element 8 times. Than you have a maximum cachehit of ~0.4% in every Workgroupe, when you never flash your cache!

The rest of the hits comes from the L2 where you have a cachehit ratio of a maximum if 1/32 so ~3.2% (assum, that i have not use the result value, because it is not clear, if the GPU use a register to store the data or not)

To rise the cachehitratio, you have to use get_local_id(0) ![]()