Archives Discussions

- AMD Community

- Communities

- Server Gurus

- Server Gurus Archives

- Archives Discussions

- epyc 7551 spontaneously resets after 10mins render...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

epyc 7551 spontaneously resets after 10mins rendering

I just finished building a system, dual 7551 epyc cpus using the supermicro H11DSi-NT motherboard.

The build went very well, and the system is running just fine, and the performance is extraordinary.

I am using Fedora 27 linux, but have access to about 20 different linux distros, as

I maintain cinelerra-5.1. I need to build these distros to post deliverables periodically.

The build went very well, and the system is running just fine, but...

It actually takes a little effort to cook up a way to load it to capacity.

I can run a full linux build of Linus Torvalds git repo in about 11 mins, no problems.

Using: make -j200 this saturates the machine for over 10 minutes. Very nice.

However,

If you start 50 background render clients, and run a batch dvd render using the

render farm, I see that it nearly always spontaneously resets (no warning or log messages,

just as if the reset button was pushed) after about 10 minutes. The motherboard is equipped

with IPMI which allows you to monitor "server health" (thermal sensors, voltages, fans).

There are no measured parameters which are even close to any rails. Everything looks

just fine, but it is highly reproducible.

This job does not saturate the machine. It runs at about 85% utilization, probably due

to io delays created by 50 clients accessing media files. It is conspicuous because all

of the kernel panic code outputs all kinds of logging, and tries to resuscitate the machine

in a pretty vigorous way. This does not happen. It is as if the reset button was pushed.

Can a HT sync/reset packet do this?

If anyone in silicon validation would like to try this,

I will be glad to help set up a test case.

This is sort of tricky to setup.

I am a skilled linux developer, and I can set up a kdb session to trap the reset,

but I suspect it is vectoring to the bios reset, not the kernel, and so this may not

be of any help, but I am open to suggestions.

gg

PS: attached: bill_of_materials, dmidecode, lspci

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD has identified an issue with the Linux cpuidle subsystem whereby a system using a newer kernel(4.13 or newer) with SMT enabled (BIOS default) and global C state control enabled (also BIOS default) may exhibit an unexpected reboot. The likelihood of this reboot is correlated with the frequency of idle events in the system. AMD has released updated system firmware to address this issue. Please contact your system provider for a status on this updated system firmware. Prior to the availability of this updated system firmware, you can work around the issue with the following option:

Boot the kernel with the added command line option idle=nomwait

Thank you goodguy and abucodonosor for providing us with the workload that allowed us to replicate the issue you were experiencing. Also, I would like to recognize koralle for understanding how to implement a workaround in the meantime, independent of our findings and recommendations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have an similar build just with 2 x EPYC 7281 and similar issue.

It seems the system resets itself once load is >80% and I/O >50%.

Looking at that closer the only piece(es) HW can do that are the

watchdogs ( which are not working in Linux anyway right now ) also

maybe the BCM has some sort own watchdog ( can't find any good documentation

for the motherboard ). Also supermicro's manual about the modtherboard is strange.

It looks to me like is a matter of the memory configuration one is using.

4 , 8 , 12 , 16 RAM Modules ( which is completly undocumented in the manual )

and the used SATA/PCI-E/NVME ports.

I use 4 x 32GB right now.

I'm using the internal M.2 port with a 'Samsung SSD 960 EVO 250GB' for *system*

and have a second one in the PCI-e x8. Also 8 X 2TB NAS HDD's , 4 for each CPU SATA port

( using vendors calbles )..

Original configuration looked like this :

4 x 32GB RAM Modules D1/F1 ( like the motherboard manual suggest )

PCI-e x8 CPU1 slot the second NVME

M.2 CPU1 the system NVME

( NVME_0 , NVME_1 port unused )

CPU1-SATA 4x 2TB HDD

CPU2-SATA 4x 2TB HDD

No go with that stressing the system a bit it just reboot itself

after 5 to 10 minutes..

Also turned on edac in kernel and mce and now I see an mce on CPU24

but I don't think that's real since occurs like this:

BCM reports error on Disk18 , SMART Asseration ( huh? I don't have 18 disks ..)

followed by in kernel MCE correctable , eg:

mce: [Hardware Error]: Machine check events logged

[Hardware Error]: Corrected error, no action required.

[Hardware Error]: CPU:24 (17:1:2) MC22_STATUS[Over|CE|MiscV|-|-|-|-|SyndV|-]: 0xd82000000002080b

[Hardware Error]: IPID: 0x0001002e00000002, Syndrome: 0x000000005a00000d

[Hardware Error]: Power, Interrupts, etc. Extended Error Code: 2

[Hardware Error]: Power, Interrupts, etc. Error: Error on GMI link.

[Hardware Error]: cache level: L3/GEN, mem/io: IO, mem-tx: GEN, part-proc: SRC (no timeout)

*and* only occurs on High load , on normal load I never see that..

Now here is what I did to workaround for now:

First get at least an kernel-4.15-rc6 ( this has fixed edac for epyc )

Be sure you have EDAC turned on on kernel config.

On HW site:

power of the box.

pull out any PCI-e cards , any HDDs you don't need but your

HDD/SSD to boot the system.

Power On and in BIOS turn OFF:

Watchdog

IOMMU

ACS

SR-IOV

PCIe Spread Spectrum

Core Performance Boost

Global C-state Control

and any PCI-e/NVME's OPROM's you don't need.

Change:

Determinism Slider to Performance

Memory Clock to 2666Mhz

( if you use UEFI change the remaining OPROM's to EFI )

Save and performe an Power Cycle.

Once the box is UP open IPMI Webinterface.

Change FAN mode to HavyIO

Turn On extra event features.

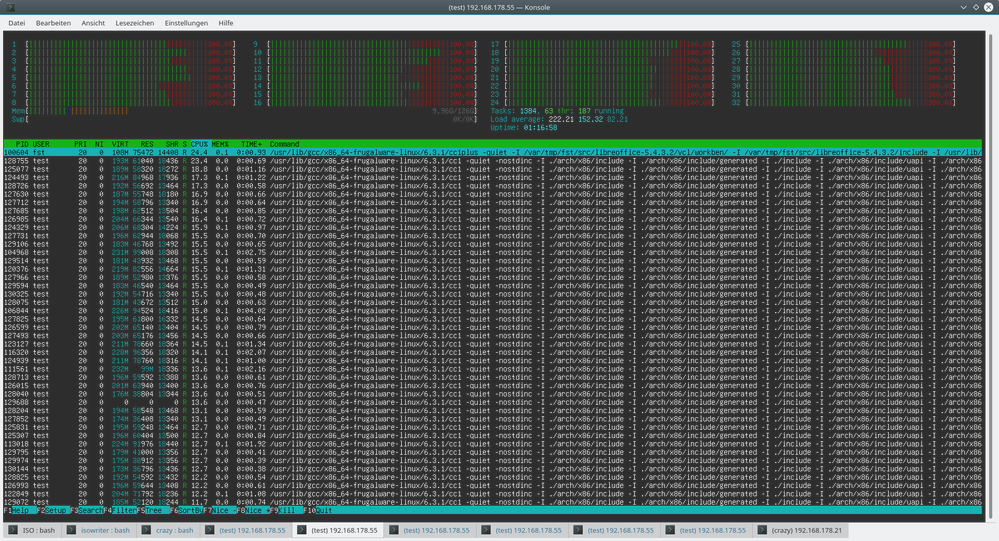

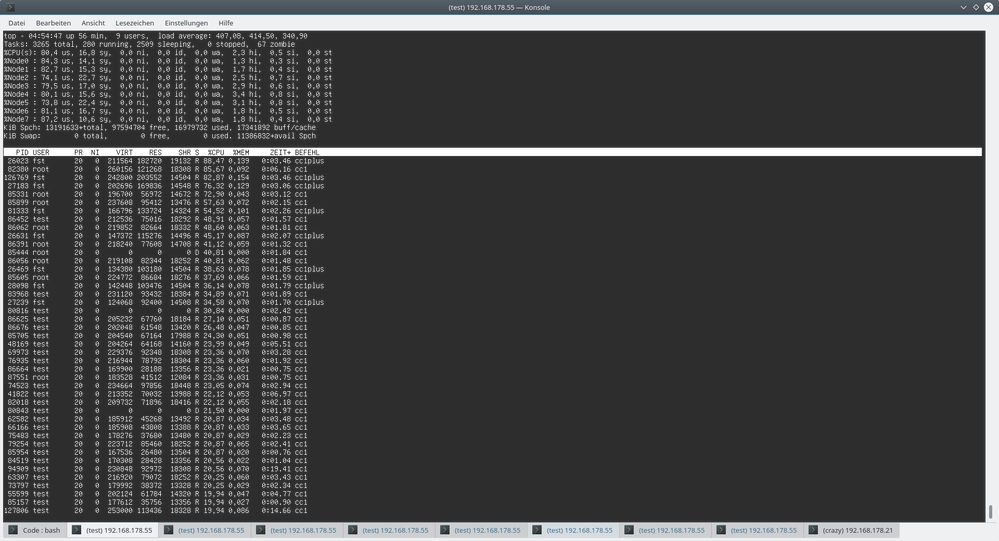

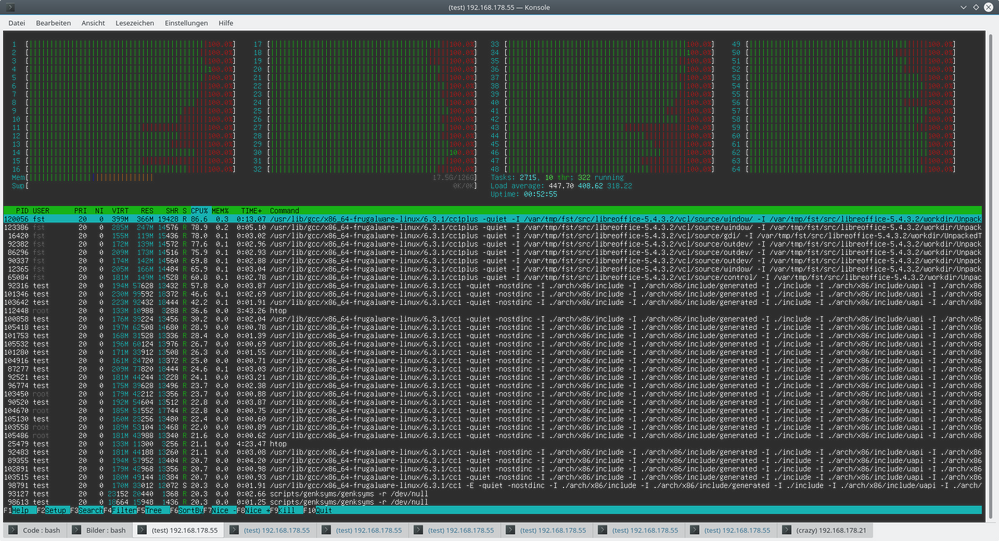

Here it works as workaround , I stress the box with an loop compiling libreoffice

and the kernel-tree with -j$core_count for near a day now.

I see the mce from time to time and something may be wrong but right now I'm not sure hwo to blame ![]()

( PS: you can find me on freenode just PM crazy if you wish )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow... this is definitely what I see.

Right down to the "Hardware Error".

Thank you for responding!

Dec 24 13:27:06 xray.local.net kernel: mce: [Hardware Error]: Machine check events logged

Dec 24 13:27:06 xray.local.net kernel: [Hardware Error]: Corrected error, no action required.

Dec 24 13:27:06 xray.local.net kernel: [Hardware Error]: CPU:40 (17:1:2) MC22_STATUS[Over|CE|MiscV|-|-|-|-|SyndV|-]: 0xd82000000002080b

Dec 24 13:27:06 xray.local.net kernel: [Hardware Error]: IPID: 0x0001002e00000002, Syndrome: 0x000000005a000009

Dec 24 13:27:06 xray.local.net kernel: [Hardware Error]: Power, Interrupts, etc. Extended Error Code: 2

Dec 24 13:27:06 xray.local.net kernel: [Hardware Error]: Power, Interrupts, etc. Error: Error on GMI link.

Dec 24 13:27:07 xray.local.net kernel: [Hardware Error]: cache level: L3/GEN, mem/io: IO, mem-tx: GEN, part-proc: SRC (no timeout)

My current conjecture is that there are actually 2 problems.

1) The machine reboots with no warning or logging

2) there are single bit errors in at least one bus (cache bus?)

Near the end of each month, I rebuild a package for about 16 distros. This means that I need to

maintain a wide set of linux versions, and have several past kernels available. I noticed that

older kernels do not seem to exhibit the "reboot" problem. Since starting a job and waiting

for a fail is the test procedure, and the time to fail is not predictable, these measurements are

"best guess" results. I have not run long term tests. Each test was less than 1 hour of stress.

I started with older fedora kernels, and worked towards the present deliverable.

4.8.14 ok, 4.11.12 ok, 4.13.12 ok, 4.13.15 fails, 4.14.7 fails.

I cannot tell what the controlling variables are. Running a kernel build saturates the

the cpu utilization, but does not fail. Running render jobs is about 80% cpu, and fails.

Perhaps IO utilization is a controlling variable.

The mce errors do not seem to be load related. The cooler on the cpus are

arctic freezer 240 and I have monitored the cpu temperatures with IPMI while

operating the high load tests. The top temp was 50 deg C. The IPMI indicates

rails of 100 deg C, not even close.

Once, while trying to configure the X server, not even under a load, I left up a hung

startx while I chased down log errors. While it was just sitting there, I got a mce

hw error.

I would like to add that the advertisements I used to buy the CPUs

AMD PS7551BDAFWOF Epyc Model 7551 32C 3.0G 64MB 180W 2666MHZ

that the cpus will run at 2666, and the actual rates are about 2000. This is what

you see from the BIOS and /proc/cpuinfo, but it is hard to tell for sure what is

really being used, since it varies over time. This is a little disappointing, since

some steps are serialized, and long jobs perform badly at low cpu clock rates.

> Now here is what I did to workaround for now:

> First get at least an kernel-4.15-rc6 ( this has fixed edac for epyc )

> Be sure you have EDAC turned on on kernel config.

I am currently trying to cobble together a 4.15.x kernel, and I will definitely try this.

Thank you for the suggestions. More to follow (probably).

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure what distro you are running , however to build the kernel simple use the distro config.

Download the kernel tarball you'll like , unpack in , cd in there , then :

cp the_location_of_distro_config ( see /boot and or /proc ) .config

make oldconfig

make prepare

make -j128 V=1

sudo make modules_install

sudo cp System.map /boot/System.map-$kerneluname ( take from DEPMOD the exact version )

sudo cp arch/x86/boot/bzImage /boot/vmlinuz-$kerneluname

for dracut initramfs generator just run :

sudo dracut -f --kver $kerneluname

and finally re-create grub.cfg by doing :

sudo grub-mkconfig -o /boot/grub/grub.cfg

I tested now offlining CPU24 ( the one with the bit errors ) errors seems to stoped but I see on

the IPMI webinterface event.log the same 'Disk18 SMART error' which just cannot be true since here

is no way to even have such much Disks without using the 2 NVME ports.. so that is something very strange..

Maybe we are very unlucky or there is something else going on ?

BTW if you try offlining on 414.x && 4.15-rc6 there is an BUG by now in BLK MQ code .. before building

open block/blk-mq.c line 1209 , there is a buggy WARN_ON() at least for EPYC CPUs .. just comment it out.

Also my testing setups still runs with the workarounds I've posted >20 full libreoffice builds ,

including checks , dicts , langs etc .. and near 54 kernel builds ( allmodconfig ) .. both build as distro packages

so I generate a lot more I/O by removing / installing in chroots etc..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think is a cooling problem my CPUs are +/- 37 deg C , system temp +/- 45 deg C

The only temp is higher is the MB_10G Temp is sometimes over 63 deg C and anything else is far away to hit >50 deg C.

Btw do you have any errors in your event.log ( IMPI ) and SMBIOS log in BIOS ?

It seems I hit 0x90 = Unknow CPU ?! in SMBIOS log...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did upgrade the BIOS to the latest 1.0a and Yes, I am getting very similar errors.

IPMI log errors are:

EID Time Stamp Sensor Name Sensor Type Description

7 2017/12/24 18:47:05 OEM HDD Disk 17 SMART failure - Assertion

8 2017/12/24 20:24:04 OEM HDD Disk17 SMART failure - Assertion

9 2017/12/27 18:49:17 OEM HDD Disk17 SMART failure - Assertion

10 2017/12/28 16:41:05 System Firmware Progress System Firmware Error (POST Error) - Assertion

11 2017/12/28 16:42:50 System Firmware Progress System Firmware Error (POST Error) - Assertion

12 2017/12/29 21:27:00 OEM HDD Disk17 SMART failure - Assertion

13 2018/01/01 20:50:53 OEM HDD DIsk17 SMART failure - Assertion

This data was from the IPMI log, but if you look at the bios SMBIOS log,

this is apparently at the same time (12/29 and 01/01) but as reported from the SMBIOS event log:

12/29/17 21:22:02 SMBIOS 0x90 N/A Description: unspecified processor / unrecognized

01/01/18 20:50:54 (the same, but only 2 of these over days)

It looks like it may be misreporting MCE hardware errors as SMART Disk errors.

There are other errors that seem to be incorrect as:

| [ 0.789694] pnp: PnP ACPI: found 6 devices | |

| [ | 0.799435] clocksource: acpi_pm: mask: 0xffffff max_cycles: 0xffffff, max_idle_ns: 2085701024 ns |

| [ | 0.799689] pci 0000:01:00.0: BAR 7: no space for [mem size 0x00100000 64bit] |

| [ | 0.799843] pci 0000:01:00.0: BAR 7: failed to assign [mem size 0x00100000 64bit] |

| [ | 0.800061] pci 0000:01:00.0: BAR 10: no space for [mem size 0x00100000 64bit] |

| [ | 0.800264] pci 0000:01:00.0: BAR 10: failed to assign [mem size 0x00100000 64bit] |

| [ | 0.800478] pci 0000:01:00.1: BAR 7: no space for [mem size 0x00100000 64bit] |

| [ | 0.800676] pci 0000:01:00.1: BAR 7: failed to assign [mem size 0x00100000 64bit] |

| [ | 0.800946] pci 0000:01:00.1: BAR 10: no space for [mem size 0x00100000 64bit] |

| [ | 0.801228] pci 0000:01:00.1: BAR 10: failed to assign [mem size 0x00100000 64bit] |

| [ | 0.801500] pci 0000:00:01.1: PCI bridge to [bus 01] |

| [ | 0.801683] pci 0000:00:01.1: bridge window [mem 0xef400000-0xef9fffff] |

| [ | 0.801885] pci 0000:00:07.1: PCI bridge to [bus 02] |

| [ | 0.802078] pci 0000:00:07.1: bridge window [mem 0xefa00000-0xefcfffff] |

| [ | 0.802277] pci 0000:00:08.1: PCI bridge to [bus 03] |

and

[ 15.079092] ipmi_si dmi-ipmi-si.0: The BMC does not support clearing the recv irq bit, compensating, but the BMC needs to be fixed.

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Near same here .. I don't have the Post errors and Disk 17 is Disk18 here .. also same 0x90 in BIOS log.

Also the same kernel warnings ..

If you look closer the kernel re-assigns the BARS later on but..

I'll play with the PCIe Link Options in BIOS later..

Do you have something could be 'Disk17' ?:) I don't know what kind error that is since here

is very clear .. I don't have 18 Disks.. Something seems very confused in some firmware..

@AMD guys ping ?:) Any ideas what this may be ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello abucodonosor and goodguy,

Thank you for submitting your issue to the community and helping us understand what is going on. I am currently working on getting you a solution. Please stay tuned.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jesse_amd

Thx for looking into this. If you need any infos please let me know ..

I can test any kind patches , firmware updates and so on..

@goodguy

can you test the following :

From IPMI Power Cycle the box.

Got into your BIOS and set 'PCIe Link Training Type' from 1 to 2 , save and reboot.

Run your 'kill_the_box_setup' .. see whatever mce still occurs .. if yes , add the following to kernel command line:

isolcpus=40

Also can you tell me how your disks layout looks like ( used and unused disks ) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

abucodonosor, on your Disk 17 question, this will vary by distribution, but my guess is that the number 17 refers to the logical port the disk is connected to, not to the disk number (starting at 0 or 1).

Regards,

Lewis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@linux_monkey

Is clear but there are 2 x 4 SATA ports using Ipass ( all in use ) , + 2 x conencted to CPU2 ( satadom or other devices ) ( one used )

1 x M.2 ( used ) , 2 x NVMe ports on motherboard ( not used ) .. 8+2+1+2 != 17 ?

Also no matter what HDD/SSD/NVME is conencted an only _this_ or even all or partial ports connected ,

the same error occurs followed by the kernel MCE.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe I can clarify my reply. Many things go into the device number for such error reports: How interfaces are electrically connected on the PCB, how those connections are described to the OS by SMBIOS and ACPI tables, how the OS interprets that data and enumerates devices, and how that enumerated data is reported in error messages. You cannot assume that Disk 17 is a bad error message because you don't have 17 disks connected or because the platform doesn't support 17 disks. You could still have a single disk connected to the same port and still see an error on Disk 17. You will need to research the details on your particular system and Linux distribution and kernel version to know exactly which disk the Disk 17 error message is referring to.

Now that said, I re-read your post and I see that the Disk 17 error is from the IPMI log, which I assume you retrieved from the BMC. The above comments about disk numbering still apply, but indeed it is possible that the BMC is mis-interpreting the MCE22 error as a disk error. You will need to contact SuperMicro about this issue since they control the software in the BMC.

A true disk error would not be related to an MCE22 error in any way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes is from the motherboard BMC even tested now without any disc attached but a Live CDROM image..

Also to get an SMART assertation error you need an DISK connected .. no matter how the firmware calls is

is why I think the error is not about the DISK but the MCE error just the BMC missreports that.

I need contact SuperMicro anyway and ask about an BIOS update with your new AGESA code.

Maybe the issues are gone then.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your testing, I believe you are correct - the BMC is mis-interpreting the SMCA bank 22 error report (SMCA = Scalable Machine Check Architecture - that is the name for the evolution of MCE on the latest AMD64 processors). Please open a ticket with your SuperMicro contacts to have them address this. AMD does not provide the software running in the BMC. You can provide them with the full SMCA kernel error report earlier and the corresponding BMC error that is logged.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your kernel testing I've build an 4.13.12 which was fine here

so I started to bisect the problem.

Unfortunately bisect pointed to some commits from staging here , things I don't even have enabled.

Propabaly on older kernels I need an different stress scenario.

Since you seems to trigger that very fast on every broken kernel , could you maybe try to bisect ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@goodguy, the most common cause I have seen of rogue reboots is DDR4 DIMMs that are not approved by the PCB manufacturer. If the specific DIMM you are using (Micron 18ASF2G72PDZ-2G6H1R from your dmidecode output) has not been tested and approved by SuperMicro, that is the first change I would suggest.

Beyond that, prudent debug steps to narrow the problem would be the following:

1) Set the memory clock speed to 2400 MHz in the System Settings

2) Disable SMT in System Settings (note this will reduce the number of CPUs so you may have to fix any scripts assigning affinity or a thread count)

3) Boot your system with the added kernel command line parameter processor_idle.max_cstate=0

I am not suggesting these as work-arounds, only as steps to narrow down the problem scope.

Note the MCE22 error applies to the Infinity Fabric interconnect between the two sockets. It is not likely related to or the cause of a rogue reboot. Occasional corrected errors do occur on this interface, however the error itself is not a concern and is not typically a canary of other system problems. That said, if either of the above changes increases or decreases the frequency of the error, that would be interesting to know.

Regards,

Lewis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@linux_monkey

I'm using 4 x 32GB Modules :

...

Manufacturer: Micron Technology

Serial Number: 18B3C0E2

Asset Tag: P2-DIMMD1_AssetTag (date:17/37)

Part Number: 36ASF4G72PZ-2G6H1

...

So with enabled C states , CBP in BIOS and processor_idle.max_cstate=0 @ command line ?

About the MCE error , well that's really not occasional .. once it starts I get it all some minutes when compiling or generating I/O..

For now I've just offlined CPU24..

Also I'm going to do some testing with BIOS defaults and things you suggested..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello abucodonosor,

AMD released a new AGESA code at the end of 2017 that should resolve the erroneous MC22's you are experiencing. You should consult your system vendor and ask them when to expect a new BIOS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the late update... I upgraded to a release that contains 4.15.0.rc6

and I goofed up the raid setup, and it took a few hours to nail it down again.

This reporting is in regards ! to "spontaneous reboots" with no logging or warnings.

OK. results from today's testing:

The principle test is to run a 50 job renderfarm DVD creation.

This typically fails within 10mins. Usually requires 3 runs to be a "success".

The duration of a successful render is approx 18mins, so the entire procedure

requires an hour if it does not fail.

1) Upgraded to rc6, run render, fails in 3mins.

2) Used BIOS to change PCIe to 2step training, fails in 9m30s

3) Used kernel cmdline isolcpus=40, (verified in top), fails in 4m50s

4) Used: echo 0 > /sys/devices/system/cpu/cpu40/online, (verified in top), fails in 1m12s

5) Used: for f in `seq 1 64`; do n=$((2*f-1)); echo 0 > /sys/devices/system/cpu/cpu$n/online; done

which turns off all of the odd numbered cpus, fails on second run, after 22m

6) Used BIOS, configured memory clock speed 2400 (NB memory setup), fails in 3m

7) Used BIOS, configured memory clock speed 1600, fails on second run, after 19m10s

This is all I had time for today. I am pleased to see that the problem(s) are being addressed.

BTW: the memory was purchased from the board vendor, and is approved.

BTW: the disk configuration consists of 6 Western Digital, 6TB WD6002FRYZ

The partitioning is listed from fdisk -l in the attachment.

The raid setup is md raid, 2 mirrors for system, and 4 raid5 disks in 8parts for user space.

The sda drive is divided into 50G partitions, and these are the "build system" partitions.

They contain current and past OS distro install which are used in a xen driven build harness.

It is worth mentioning that even though this is a bummer of a bug, that it is truly an edge case.

The system is performing well under almost all normal use scenarios. Still, if you are a render

farm, this will ruin your day.

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please note that the 4.15 release candidate 6 includes the patch set for Kernel Page Table Isolation (KPTI) as a fix for the disclosures reported yesterday by Google Project Zero labs. Unfortunately that merge did not include the following patch: x86/PTI: Do not enable PTI on AMD processors

You can either apply that patch if you are building your own kernel, or you can disable the PTI work-arounds by adding to the kernel command line pti=off. This will avoid a performance penalty from needlessly applying that work-around on AMD64 CPUs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tested the follwoing by now :

reseted to the BIOS default just changed NVME firmware to AMI so I can boot the test system.

with RAM set to 2400Mhz , reset occurs

with RAM set to 2400Mhz and processor_idle.max_cstate=0 , reset occurs

with RAM set to 2666Hhz and processor_idle.max_cstate=0 , rest occurs

with RAM set to AUTO and processor_idle.max_cstate=0 , reset occurs

Testing now the same but with SMT off

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SMT OFF 2400Mhz no reset , no MCE

SMT OFF 2666Mhz no reset , lots MCE's

Let me know if you want me to test different things.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@linux_monkey goodguy

I think I've found a solution for both issue ( MCE one being fixed by new AGESA but it may take a while until an new BIOS is out )

with :

RAM 2666Mhz

Memory Interleaving from AUTO to 'Channel' <-- this *need* be set to channel no other value seems to work

No more resets but lots MCE's..

( one hour of stress test )

with:

RAM 2400MHz

Memory Interleaving from AUTO to 'Channel' <-- this *need* be set to channel no other value seems to work

Memory Interleaving size from AUTO to 512 <-- this need be set too it seems

No more resets and just one MCE hit so far \o/

As bonus Turbo frequency is near 2.7 GHz for all cores while in the buggy

setup like goodguy already noticed that didn't really work.

( that stress test is still running , right now 2h )

can you please test this too ?

here is how I did it :

1) In IPMI ( save sour settings if needed ) then -> Maintaince Reset to factory , then Reset the Unit , Power Cycle the box

2) Enter BIOS setup and reset to defaults ( save your profile ofc first so you can restore later if needed )

3) Save & Reset

4) Enter BIOS again

if you boot from NVME you need change NVME firmware from vendor to AMI

Now in The Memory profile change the settings like I've explained

Save & Reset again

5) Boot and run your kill_the_box setup

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also for 2666Mhz 'Memory Interleaving size set to 512' works fine too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Response to abucodonosor:

Unfortunately "can you please test this too?" with changing memory interleaving from AUTO to channel did not fix the problem here.

First test, reset in 10 seconds. Second test, reset in 14 minutes. Third test, reset in 2min.

I also tried the 512 interleave, which also failed in 9m40s

Currently, we have exhausted all reasonable tests I can think of just now.

I am going back to application development until new ideas or new data occurs.

I very much appreciate the hard work you have applied in your exploration of this problem.

Please keep me in the loop if anything breaks loose, I will be available for testing.

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tested these settings on 2 boxes having these kind problems but I don't

own EPYC 7551 CPUs.

The second test still runs without any reset and just one MCE..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

goodguy linux_monkey @AMD guys

The situation changed here with update of BIOS 1.0b.

These RAM workarounds doesn't seems to work anymore.

They added now some other options too and until I don't

know what they are doing I don't really want to touch that right now.

I found out , at least on my system , something is wrong when

Global C-state Control is enabled or set to AUTO in BIOS.

EPYC seems to use 'acpi_idle' and 'acpi_cpufreq' kernel driver.

With Global C-state Control enable and at least 'acpi_cpufreq'

loaded the system _always resets_ sooner or later.

Global C-state Control OFF, kernel driver loaded

no reset ocurrs ( so far )

Global C-state Control ON , acpi_cpufreq blaklisted ( not loaded )

no reset occurs. Also disabling C1 && C2 using cpupower util while

acpi_cpufeq is not loaded seems to work even better.

To sum up 'BIOS' and 'kernel C state/freq drivers' enabled at the same time

results in random resets. 'ONE' enabled BIOS or kernel , no reset occurs.

Also looking changed from kernel v4.13.12 to v4.13.15 there are

5 or 6 commits may cause such things.

The 4 APCI* commits touching and moving around the GPE's logic.

One commit touches TSC

One commit touches EDAC AMD ( but this one basicaly just moves an 'dies with UC errors' from fam15 CPUS to all )

if your time permits , can you give that a try ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When memory interleave options are set to Auto, the memory training firmware will decide how to configure interleaving. If every channel is populated with an identical size / type of DIMM, interleave will be set to channel, which offers the highest possible memory bandwidth. If every other channel is populated with an identical size / type of DIMM, interleave will be set to channel pair.

Assuming your system has one DIMM per channel, I would expect the Auto setting to result in the interleave set to channel anyway (meaning changing this in BIOS should not make a difference). If it does make a difference, that suggests the memory training may have experienced some issues. This will typically show up if you run numactl -H - one or more nodes will have less than the expected amount of memory. You can also run a Stream benchmark to test if the system is delivering the expected memory bandwidth.

goodguy were you able to test with SMT disabled or with processor.max_cstate=0? I noticed that you disabled the odd numbered CPUs. If you were doing this to disable the SMT siblings on each core, you should instead disable the top half of the CPUs (e.g. on a 2P 32 core system, disable CPUs 64-127). However for the purposes of triaging your problem I would prefer you to disable SMT in BIOS if you are still able to do any further testing. Note if your application is compute / cache bound, it is likely you get little benefit from SMT anyway. What has been your experience with HyperThreading? If it helps you, our SMT should help you for the same reasons. If it does not help you, our SMT will not help you for the same reasons.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@linux_monkey

I expected that too. However with settings to AUTO the box just resets while

changing RAM settings like I've explained no resets occurs anymore.

( BIOS BUG maybe ? )

I'll try to find some time tomorrow and do some more testing , however since there is

no log or error written is very hard for me to guess what may be wrong.

I already compared MEM maps from different kernels etc but I don't have the

tools to access BIOS/FIRMWARE..

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you check the output from numactl -H and make sure it matches what you expect based on the DIMM population and sizes in your dmidecode output.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

numactl -H matches but there is a difference what the kernel mem map looks like with AUTO/Manual settings.

( this is just a quick test will investigate more tomorrow )

file t_mem ( dmesg ) = Manuall RAM settings

file t1_mem ( dmesg ) = all AUTO

snip

...

test@ant:~/MEM$ dmesg >dmesg1

test@ant:~/MEM$ head -n200 dmesg1 >t1_mem

test@ant:~/MEM$ diff -Naur t_mem t1_mem

--- t_mem 2018-01-04 19:59:04.669173605 +0100

+++ t1_mem 2018-01-04 19:59:57.140348064 +0100

@@ -131,17 +131,18 @@

[ 0.000000] found SMP MP-table at [mem 0x000fd3b0-0x000fd3bf] mapped at [ (ptrval)]

[ 0.000000] esrt: Reserving ESRT space from 0x00000000c72ceb18 to 0x00000000c72ceb50.

[ 0.000000] Scanning 1 areas for low memory corruption

-[ 0.000000] Base memory trampoline at [ (ptrval)] 98000 size 24576

+[ 0.000000] Base memory trampoline at [ (ptrval)] 59000 size 24576

[ 0.000000] Using GB pages for direct mapping

-[ 0.000000] BRK [0x13772fa000, 0x13772fafff] PGTABLE

-[ 0.000000] BRK [0x13772fb000, 0x13772fbfff] PGTABLE

-[ 0.000000] BRK [0x13772fc000, 0x13772fcfff] PGTABLE

-[ 0.000000] BRK [0x13772fd000, 0x13772fdfff] PGTABLE

-[ 0.000000] BRK [0x13772fe000, 0x13772fefff] PGTABLE

-[ 0.000000] BRK [0x13772ff000, 0x13772fffff] PGTABLE

-[ 0.000000] BRK [0x1377300000, 0x1377300fff] PGTABLE

-[ 0.000000] BRK [0x1377301000, 0x1377301fff] PGTABLE

-[ 0.000000] BRK [0x1377302000, 0x1377302fff] PGTABLE

+[ 0.000000] BRK [0x17142fa000, 0x17142fafff] PGTABLE

+[ 0.000000] BRK [0x17142fb000, 0x17142fbfff] PGTABLE

+[ 0.000000] BRK [0x17142fc000, 0x17142fcfff] PGTABLE

+[ 0.000000] BRK [0x17142fd000, 0x17142fdfff] PGTABLE

+[ 0.000000] BRK [0x17142fe000, 0x17142fefff] PGTABLE

+[ 0.000000] BRK [0x17142ff000, 0x17142fffff] PGTABLE

+[ 0.000000] BRK [0x1714300000, 0x1714300fff] PGTABLE

+[ 0.000000] BRK [0x1714301000, 0x1714301fff] PGTABLE

+[ 0.000000] BRK [0x1714302000, 0x1714302fff] PGTABLE

+[ 0.000000] BRK [0x1714303000, 0x1714303fff] PGTABLE

[ 0.000000] Secure boot could not be determined

[ 0.000000] RAMDISK: [mem 0x3cb72000-0x3e996fff]

[ 0.000000] ACPI: Early table checksum verification disabled

@@ -197,4 +198,3 @@

[ 0.000000] SRAT: PXM 2 -> APIC 0x2b -> Node 2

[ 0.000000] SRAT: PXM 3 -> APIC 0x30 -> Node 3

[ 0.000000] SRAT: PXM 3 -> APIC 0x31 -> Node 3

-[ 0.000000] SRAT: PXM 3 -> APIC 0x32 -> Node 3

....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried with SMT off and there are no resets so far. This ran over

1hr with no resets. This is only half as much cpu, but surpisingly

the throughput went up over 30%... so in SMT mode it is less

than "half fast"? Maybe so.

I am still occasionally getting mce errors, but I did go back to

BIOS defaults for memory. I will retest with SMT off,

MemClk 2400, intrlv channel 512 and see if it is all better.

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to hear. Fingers crossed.

To understand the throughput difference with SMT on vs. off (and this is very much the same for HyperThreading), you have to understand how it works. There is a fixed set of resources in each physical core (Integer execution units or ALUs, load/store units, L1, L2 caches, TLBs, FPU/AVX2/SIMD, etc.) When SMT is enabled, a second identical architectural CPU is exposed to the OS however both of these logical CPUs share the same physical resources. This is advantageous when your application is memory, disk or I/O bound as you can run more threads in parallel to take advantage of otherwise idle physical core resources. This can in some cases represent up to a 50% gain on a severely memory or I/O bound scenario but the 15% range is more likely. Conversely when your application is limited by the physical core resources, the second logical CPU pretty much represents overhead that in a worst-case scenario can detract from overall performance. Consider that there are twice as many CPUs to schedule and keep track of now for the OS.

That said, while I'm happy that SMT disabled appears to represent more performance for you, the intent was to see if disabling SMT might affect the rogue reset issue. Please do update us after more hours of hopefully successful testing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

on 4.15.0-rc6 run with pti=off , should give you way better results

that fix is only on git :

https://github.com/torvalds/linux/commit/694d99d40972f12e59a3696effee8a376b79d7c8.patch

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Response to abucodonosor:

Update on bisect of between which kernels of Fedora the reset/failure start to occur:

| Each test was less than 1 hour of test. I started with older fedora kernels, and worked towards the present deliverable.

| 4.8.14 ok, 4.11.12 ok, 4.13.12 ok, 4.13.15 fails, 4.14.7 fails.

It looks like this is not as good a conclusion as originally thought after more testing this morning.

4.13.12 kernel THIS TIME FAILED in a about 1 hour test

4.13.13 kernel failed twice in a 1 hour duration run

4.13.13 kernel failed in about 9 minutes

It does seem that the more up-to-date distros fail on a more frequent basis.

I don't think going further down the kernel version path is going to help isolate

the cause since it may take an exact set of circumstances to cause the reset.

Perhaps, once the problem is root caused, this can identify why this may be.

gg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a similar problem on Supermicro AS-1023US-TR4 and dual Epyc 7551.

Kernel 4.9.50 did not experience any random reset in 2 months (12 nodes), although it had other issues with loopback and singularity with overlayfs, eg

[1963815.193100] blk_update_request: I/O error, dev loop4, sector 3751200

With both 4.14.11 and 4.15.0_rc6, the random resets occur. 4.14.11 resets in 2-4 hours for my payload, although the lifetime can be prolonged using these boot options

nopti pcie_aspm=off rcu_nocbs=0-127

4.14.11 running on 3 machines did not survive more than 12h, while 4.15.0_rc6 is a bit better, one reset in 12h (4 machines running)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update:

In my previous response I made a typo in the kernel findings for the following with correction in bold:

4.13.12 kernel THIS TIME FAILED in a about 1 hour test

4.13.13 kernel failed twice in a 1 hour duration run

4.15.0_RC6 kernel failed in about 9 minutes

----------------------------------------------------------------------------

Latest test results:

BIOS at 1.0b

Kernel 4.15.0-0.rc6.git0.1.fc28.x86_64

BIOS settings at all defaults except "GLOBAL C-STATE = disabled" (note SMT is ON!)

Successfully ran for a little over 10 hours without any resets looping the render farm

test that with all defaults generally fails within 43 seconds to 23 minutes.

In summary SMT OFF, i get no resets AND the computer runs about measurably faster.

Similarly, SMT ON and Global C-state OFF, I get no resets. Either option seems to work.

I would recommend that the BIOS defaults be updated to avoid this issue, but I can't say

which would be the best choice. It probably depends on the use scenario.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes is what I've wrote in https://community.amd.com/message/2841246#comment-2841246

maybe you didn't saw that ![]()

Also I set Determinism Slider to Performance too.

Also since you want full CPU power install cpupower util then run this :

sudo cpupower frequency-set -g performance

sudo cpupower idle-set -d 1

sudo cpupower idle-set -d 2

( and see how you COREs runs full speed on compiling stuff etc , even Turbo runs near max with 4.15.rcX )

Maybe someone @AMD want to look at idle and cpufreq in Linux since we now use the ACPI generic one for EPYC ?

Anyway the question is what cause the resets ? since it resets like an 'reset from watchdog' which is 1 disabled in BIOS and 2 set to

'disable ( RESET )' on the motherboard. .. if you ask the BMC for watchdog info it tells is 'reserved' not disabled.

The problem I have is .. there is _NO_ watchdog driver in Linux for AMD .. OK there is SP5100 but this one is such broken

even for older platforms .. conflicts with SMBus drivers and won't work on EPYC anyway.

@AMD are there any plans to add watchdog drivers for Linux ?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Goodguy,

Would you please provide detailed steps to replicate the failure? I'd like to see what I can find. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need produce high load and I/O , how doesn't seems to matter.

I use as example 4 kernel trees and compile all in a loop , 2 with -j$core_count

and 2 with -j2*$core_count .. also one job is compressing and decompressing 2GB data

using xz..

Just be sure you have Global C-state Control turned on in BIOS

and you'll hit the reset sooner or later.

Regards