Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- OpenGL UBO performance issue XOR fence sync proble...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OpenGL UBO performance issue XOR fence sync problem

This topic is aimed to discuss two different problems:

- UBO with glBufferSubData is extremely slow

- sync objects seem to ignore that the buffer I would like to reuse is no longer in use by the GPU (same thing happens on Intel, but with somewhat different effects).

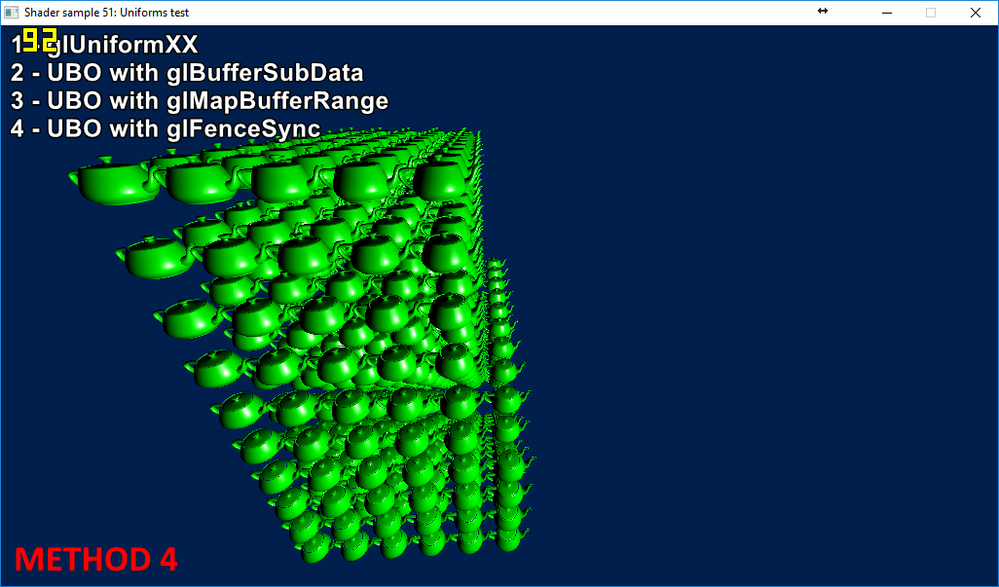

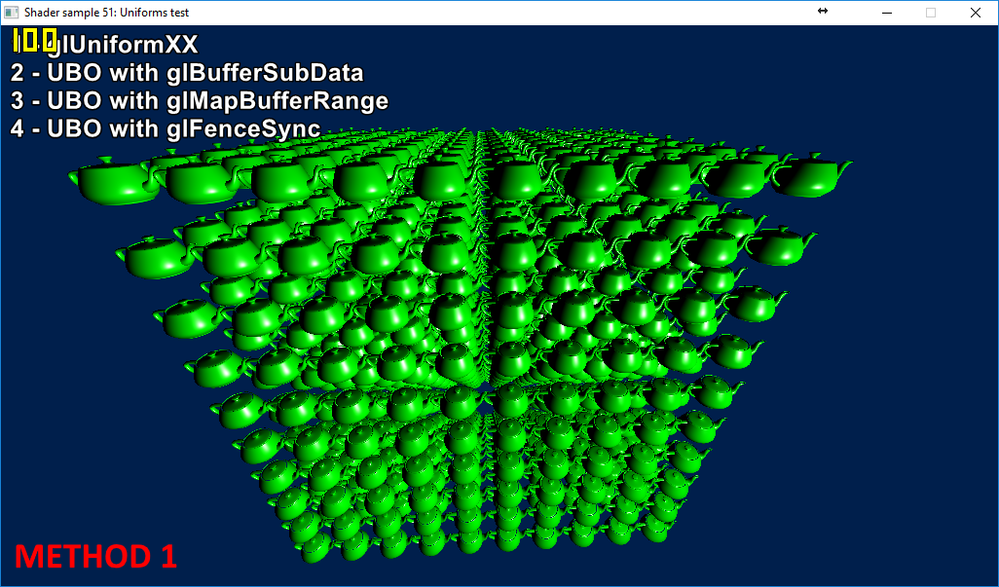

First of all, screenshots from the expected and actual results:

And some measurements on two different cards (I refer to the methods by their number):

Intel HD 4600:

1 - 23 fps

2 - 23 fps

3 - 7 fps

4 - 23 fps and the above bug (camera movement reveals that the missing meshes are drawn to the same place as the visible ones)

AMD R7 360:

1 - 103 fps

2 - 5 fps

3 - 46 fps

4 - 96 fps and the above bug (missing objects are flickering)

Repro source code: Dropbox - FOR_AMD_4.zip

I have two questions:

- why the (huge) difference between methods 2 and 3 wrt. to Intel/AMD?

- why are the other teapots missing in method 4 (unless I orphan, which drops performance again)

UPDATE: the missing teapots are due to frame queueing; can be easily fixed. However nVidia cards solve it naturally...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Steven, thank you for your post.

4) sounds like a potential app issue, given the fact you're seeing glitched visuals on more than just one GL implementation. Have you tried running your application with debug callbacks enabled? They might help in this case.

As to the other perf difference question you raised, I do not really look after our GL stack so all I can do is to ask one of our GL engineers to get back to you with some further info, once they find some time to look into your case. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

yes, 4) was an "app issue", because I didn't take frame queueing into account (the fix is pretty easy). However in an OpenGL app I shouldn't even be aware of frame queueing, and nVidia cards hide it pretty well (and the problem doesn't surface there).

Debug output doesn't work on AMD cards (perhaps you can tell me why...). It works tho on Intel but doesn't tell anything interesting. So far the only card where I saw debug output working was nVidia.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I can tell, debug output generated via GL_KHR_debug is expected to work on our drivers, as we do support the extension and pass relevant conformance tests (see VK-GL-CTS/gl4cKHRDebugTests.cpp at bf297b8eec02e4cdfcbd399b33b720a81f81e5dd · KhronosGroup/VK-GL-CTS... ).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well I query GL_ARB_debug_output and it returns 1 ... Is there a difference between that and KHR_debug? Because the former doesn't output anything to me in my real app (not in the test prog of course as I didn't implement the callback).