Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- clEnqueueMapBuffer in parallel

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

clEnqueueMapBuffer in parallel

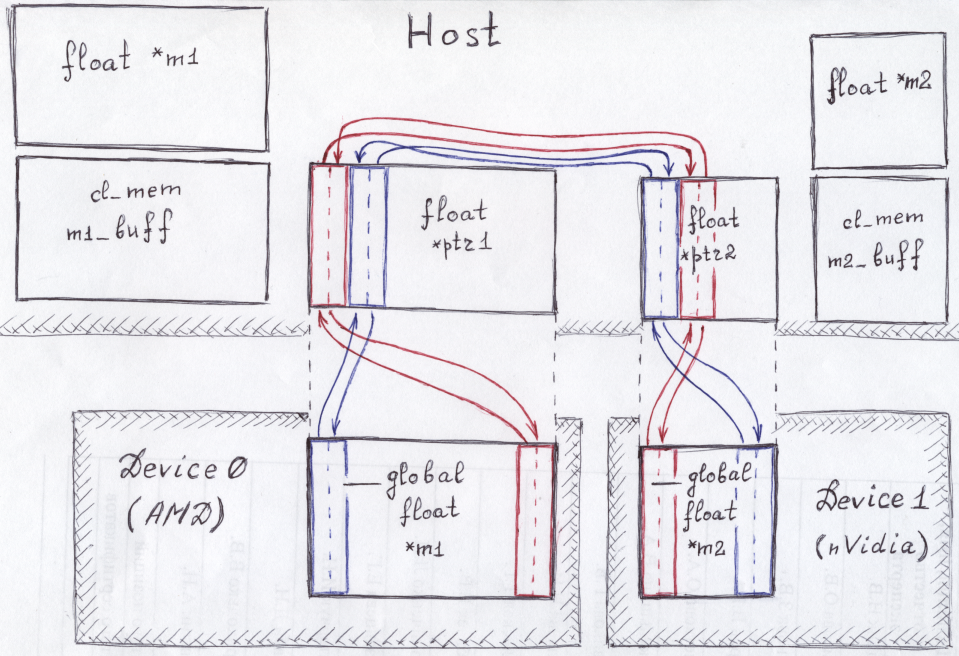

I have two buffers which are processed at two GPUs (AMD and nVidia) in parallel (parallelized with OpenMP),

Part 1:

Active OpenCL platform : AMD Accelerated Parallel Processing

Active OpenCL Device : Cayman OpenCL 1.2 AMD-APP (1800.11)

Part 2:

Active OpenCL platform : NVIDIA CUDA

Active OpenCL device : GeForce GTX 560 Ti OpenCL 1.1 CUDA

The buffers are created with the following flags: CL_MEM_READ_WRITE | CL_MEM_COPY_HOST_PTR.

On each iteration I need to exchange edge values between them. In order to do this, I would like to use map/unmap operations in parallel (as I know, this is faster than read/write).

It should work like this:

Red and blue colors correspond to the different OpenMP threads. Each OpenMP thread should perform map of the corresponding region, memcpy between mapped edges, and unmap to the devices.

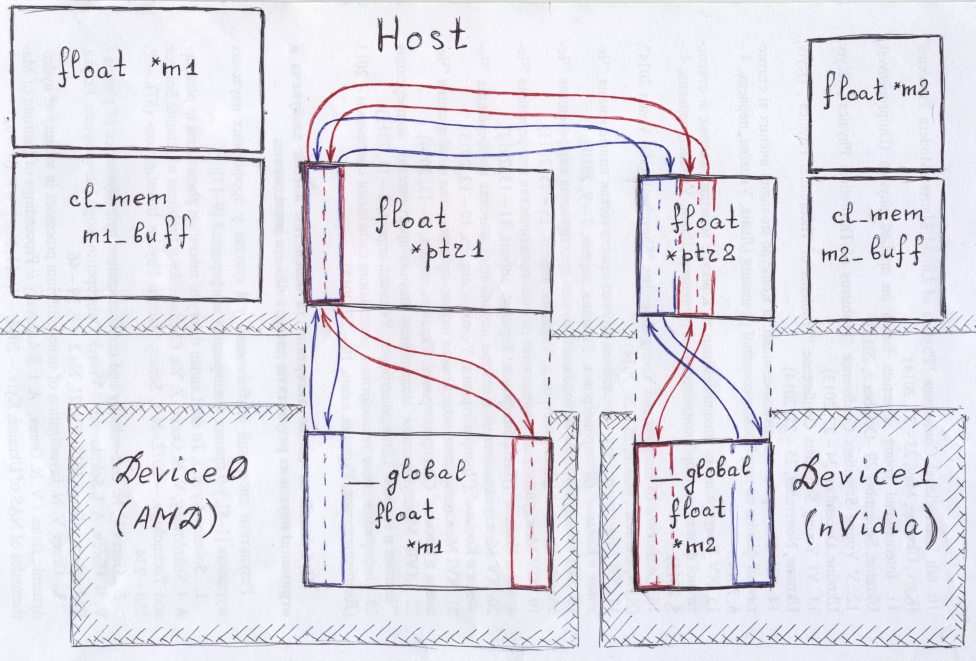

But actually it works like this,

From AMD GPU different parts of data are mapped into the same addresses, so when the program runs in parallel, it leads to wrong result. The nVidia card works correctly. The following sequence of the pointers to the mapped memory for both devices (in the brackets -- number of the device) illustrates this behavior:

[0] 0x7f362a7e3000

[1] 0x7f363c1f5000

>>> [1] 0x7f363c210000

>>> [0] 0x7f362a7e3000

[1] 0x7f363c22b000

[0] 0x7f362a7e3000>>> [1] 0x7f363c246000

>>> [0] 0x7f362a7e3000[1] 0x7f363c261000

[0] 0x7f362a7e3000>>> [1] 0x7f363c27c000

>>> [0] 0x7f362a7e3000[1] 0x7f363c297000

[0] 0x7f362a7e3000>>> [1] 0x7f363c2b2000

>>> [0] 0x7f362a7e3000

[0] 0x7f362a7e3000

[1] 0x7f363c2cd000>>> [0] 0x7f362a7e3000

>>> [1] 0x7f36464aa000

[1] 0x7f36464c5000

[0] 0x7f362a7e3000>>> [1] 0x7f36464e0000

>>> [0] 0x7f362a7e3000

[1] 0x7f36464fb000

[0] 0x7f362a7e3000

>>> [1] 0x7f3646516000

>>> [0] 0x7f362a7e3000[1] 0x7f3646531000

[0] 0x7f362a7e3000

>>> [1] 0x7f364654c000

>>> [0] 0x7f362a7e3000

[1] 0x7f3646567000

[0] 0x7f362a7e3000>>> [1] 0x7f3646582000

>>> [0] 0x7f362a7e3000

[0] 0x7f362a7e3000

[1] 0x7f36463aa000

>>> [1] 0x7f36463c5000

>>> [0] 0x7f362a7e3000

[1] 0x7f36463e0000

[0] 0x7f362a7e3000>>> [1] 0x7f36463fb000

>>> [0] 0x7f362a7e3000

[1] 0x7f3646416000

[0] 0x7f362a7e3000

>>> [1] 0x7f3646431000>>> [0] 0x7f362a7e3000

">>>" signs the "left" sub-buffer in the device (i.e. blue sub-buffer at AMD GPU and red at nVidia). It is seen that from 0-th device data are mapped into the same address, independently of the initial position in the GPU memory.

Is this mapping to the same pointer a bug in the driver, or this is made purposely?

If this is purposely, is it possible to perform map/unmap operations in parallel somehow?

Thank you for help in advance.

Natalia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there anyone who could help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a workaround maybe try guard mapping of the buffer with mutex so it is not performed in parallel or map whole buffer at once and then run parallel on whole region.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response.

Yes, it does work sequentially, but I would like to accelerate code by exchanging these edges in parallel... In case of large buffers, map of the whole buffer also slows down program.

Now I do this exchange as follows:

map red sub-buffers in parallel --> exchange data --> unmap in parallel -->

map blue sub-buffers in parallel --> exchange data --> unmap in parallel,

but this is not what I want. It will be also not very efficient in case of more than 2 buffers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Natalia,

Could you please share the relevant code which manifests parallel mapping/unmapping of two or more sub-buffers is not working on AMD platform?

Regards,

Dipak

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dipak!

Since actual code is too large I have written a simplified program which demonstrates this behavior. It creates two matrices, performs some actions with their components (just to do something), and performs exchange of edges. The last may be made sequentially or in parallel with OpenMP (defined by flag in the Makefile).

The relevant part of code is in run.cpp file:

float *ptr_rf, *ptr_lf;

int k, k_next;

size_t offset_k, offset_k_next;

size_t size = 2 * K * sizeof(float);

#ifdef USE_OPENMP

omp_set_num_threads(NT);

#pragma omp parallel private(ptr_rf, ptr_lf, k, k_next)

{

k = omp_get_thread_num();

#else

for (k = 0; k < NT; k++){

#endif

k_next = (k + 1) % NT;

offset_k = ((!k) ? (N - 2) : (M - 2)) * K * sizeof(float);

offset_k_next = 0;

ptr_rf = (float*)clEnqueueMapBuffer(queue

, m_buff , CL_TRUE, CL_MAP_WRITE, offset_k, size, 0, NULL, NULL, &err); #pragma omp barrier

printf(">>> [tid %i, matrix %i, ptr_rf] %p\t % f\n", k, k, ptr_rf, ptr_rf[0]);

ptr_lf = (float*)clEnqueueMapBuffer(queue[k_next], m_buff[k_next], CL_TRUE, CL_MAP_WRITE, offset_k_next, size, 0, NULL, NULL, &err);

#pragma omp barrier

printf(">>> [tid %i, matrix %i, ptr_lf] %p\t % f\n", k, k_next, ptr_lf, ptr_lf[0]);

memcpy(ptr_rf + K, ptr_lf + K, K * sizeof(float));

memcpy(ptr_lf, ptr_rf, K * sizeof(float));

#pragma omp barrier

err = clEnqueueUnmapMemObject(queue

, m_buff , (void*)ptr_rf, 0, NULL, NULL); #pragma omp barrier

err = clEnqueueUnmapMemObject(queue[k_next], m_buff[k_next], (void*)ptr_lf, 0, NULL, NULL);

}

As it turned out, it works properly on Radeon HD 6770 (Juniper). Examples of correct and incorrect results are here.

If buffers are created with CL_MEM_USE_HOST_PTR instead of CL_MEM_COPY_HOST_PTR, it works correctly, but it is not what I need.

Regards,

Natalia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Natalia for sharing the code. I'll check and get back to you.

BTW, could you please let me know your OS and catalyst driver version?

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dipak!

I have tried the following systems:

HD 7970, HD 5870: openSUSE 13.1 (kernel 3.12.53-40-desktop, fglrx 1800.11)

HD 6970, GTX 560Ti: openSUSE 12.3 (kernel 3.7.10-1.45-desktop, fglrx 1800.11)

HD 8750M, Intel Core i5-3230M: Windows 8.1 (Catalyst 1268.1, processor works correctly)

HD 6770, HD 7990, TITANs: Scientific Linux 6.6 (kernel 2.6.32-504.23.4.el6.x86_64, fglrx 1800.5).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FYI:

clEnqueueMapBuffer says:

If a memory object is currently mapped for writing, the application must ensure that the memory object is unmapped before any enqueued kernels or commands that read from or write to this memory object or any of its associated memory objects (sub-buffer or 1D image buffer objects) or its parent object (if the memory object is a sub-buffer or 1D image buffer object) begin execution; otherwise the behavior is undefined.

A memory object is considered as mapped if there are one or more active mappings for the memory object irrespective of whether the mapped regions span the entire memory object.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is for OpenCL 2.0. But non of mentioned by me AMD devices supports this standard. CL_PLATFORM_VERSION is OpenCL 2.0 AMD-APP (. . .), however, "OpenCL 2.0" stays just formally here, doesn't it?

Or this comment was simply explicitly added into the newer specification, while it applies to all standards?

Anyway, why this parallel mapping does systematically work with old Radeon HD 6770 and HD 5870?

And why does setting CL_MEM_USE_HOST_PTR flag help?

Is it true that mapping of independent parts of the same buffer cannot be implemented in parallel in principle on AMD GPUs (at least with OpenCL 2.0 standard)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Natalia,

After some experiments, it seems that multiple mapping (with different offset) of same buffer is working as expected if the buffer is a zero-copy memory object (i.e. created with CL_MEM_USE_HOST_PTR or CL_MEM_ALLOC_HOST_PTR or CL_MEM_USE_PERSISTENT_MEM_AMD). Otherwise, i.e. when it's a normal device buffer, the buffer is mapped to same memory location irrespective of "offset" or "size" for both read and write. As I checked, the following code gave me similar observation as yours.

float *mapPtr1 = (float *)queue.enqueueMapBuffer(buffer, CL_TRUE, CL_MAP_READ, 0, size); float *mapPtr2 = (float *)queue.enqueueMapBuffer(buffer, CL_TRUE, CL_MAP_READ, size / 2, size / 2);

for (auto i = 0; i < N; i++) std::cout << mapPtr1 << " ";

for (auto i = 0; i < N; i++) std::cout << mapPtr2 << " ";

queue.enqueueUnmapMemObject(buff, mapPtr1);

queue.enqueueUnmapMemObject(buff, mapPtr2);

Anyway, I need to check with the runtime team before I can share more information regarding this.

BTW, in your case you may try CL_MEM_USE_PERSISTENT_MEM_AMD flag as an alternative of CL_MEM_USE_HOST_PTR. Because it will give you better performance if the buffer is heavily accessed by the GPU.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI Natalia

As I've come to know, this is indeed a runtime bug and they are working on the fix.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dipak!

Thank you. I will wait for fixes.

Relative CL_MEM_USE_PERSISTENT_MEM_AMD, it doesn't work at any available for me machine. I have included CL/cl_ext.h, the code is compiled successfully, however, the correspondent clCreateBuffer returns -37-th error... I think, I will try to read some more about this... If everything is bad, I will write in a corresponding topic (I saw some of them, but they didn't help with -37-th error).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update:

The issue has been resolved in the latest internal builds and the fix is expected to be released soon.