- AMD Community

- Blogs

- Instinct Accelerators

- Driving the Industry into the Exascale Era with AM...

Driving the Industry into the Exascale Era with AMD Instinct™ Accelerators

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

AMD Instinct™ accelerators have been gaining a lot of traction and publicity around its growing adoption across major HPC centers. This is highly evident from the recently published list of Top500 supercomputers in the world by Top500.org, where AMD Instinct accelerators power the fastest supercomputer in the world.

Driving much of the success of AMD Instinct accelerators is the ability to deliver outstanding performance at scale. The first exascale-class supercomputers for the Department of Energy to leverage AMD Instinct accelerators and AMD EPYC™ CPUs for two out of the three planned Exascale supercomputers in the United States reflect a high confidence in both the product capability and ability for AMD to deliver an exceptional performance and user experience for a broad set of their HPC users.

The Frontier supercomputer at Oak Ridge National Laboratories, powered by AMD Instinct MI250X accelerators and AMD EPYC CPUs, was the first supercomputer to officially pass the Exascale barrier and according to the latest Top500 list, was the fastest in the world. AMD Instinct MI250X accelerators also power the #3 Supercomputer, LUMI at CSC Finland, and the #10 Supercomputer in Adestra at CINES.1 For reference, AMD Instinct MI250X GPUs + EPYC CPUs delivers over 30% of all FLOPs on the June ’22 Top500 list.1 This strong preference for AMD Instinct GPUs by HPC users is driven by several factors. Key among them are:

- A dedicated compute architecture (AMD CDNA™ 2) targeted towards HPC and AI/ML use cases that not only provides nearly 3.9x Peak theoretical FP64 Flops of the other GPU vendor but also provides over 96 GFLOPs/W making AMD Instinct MI250X, the most powerful and the most efficient GPU for HPC acceleration3

- An industry first open and portable SW stack for GPUs called ROCm™ that easily unlocks the power of AMD Instinct accelerators for both software developers and users. We strongly believe that an open and portable ecosystem are critical for unrestricted research in the HPC community

- AMD HIP is a leading-edge open-source compiler to expand the reach of GPU optimized code. HIP is the native format for the AMD ROCm platform and can compile seamlessly using the HIP/Clang compiler and is portable to run on any GPU platform. AMD supports the broad HPC user base with an easy tool to port CUDA® & OpenACC codes to HIP to open up researchers’ options to their choice of accelerator hardware.

AMD made a major design shift in GPU architecture starting with AMD Instinct MI100 products with a new focus on compute intensive use cases like HPC and AI/ML training. The need for HPC and AI/ML was shifting rapidly and AMD was the first GPU vendor to make the decision to focus on this trend with a dedicated GPU architecture. The result

The latest AMD CDNA™ 2 architecture builds on the tremendous core strengths of the original AMD CDNA architecture to deliver a leap forward in accelerator performance and usability while using a similar process technology. The AMD CDNA architecture is an excellent starting point for a computational platform. However, to deliver exascale performance the architecture was overhauled with enhancements from the compute units to the memory interface, with a particular emphasis on radically improving the communication interfaces for full system scalability.

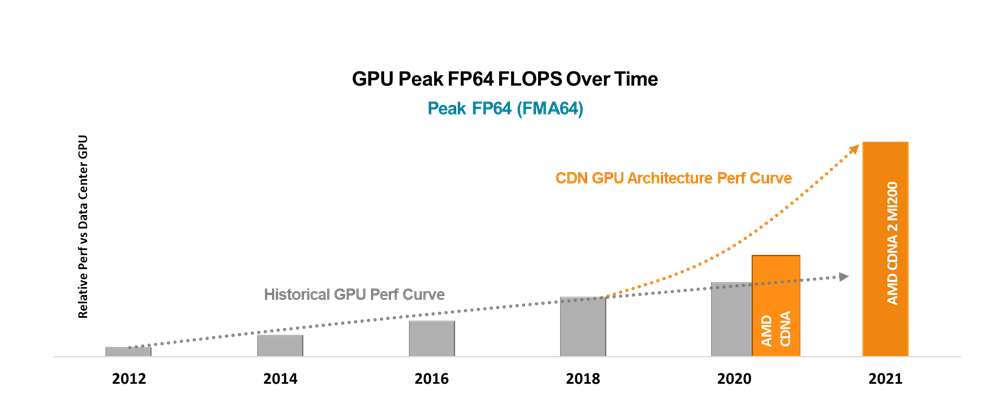

The below image puts in stark contrast the benefits of the approach to building a dedicated architecture like AMD CDNA. The performance improvement of AMD Instinct GPUs over time and its performance in Flops vs the other vendor validates the reason for the strong interest from key HPC users.

Figure 1: Historical FP64 GPU performance over time (For illustration only).

The AMD ROCm software stack was built with three key principles. First, accelerated computing requires a platform that unifies processors and accelerators when it comes to system resources. While they play different roles for different workloads, they need to work together effectively and have equal access to resources such as memory. Second, a rich ecosystem of software libraries and tools should enable portable and performant code that can take advantage of new capabilities. Last, an open-source approach that empowers vendors, customers, and the entire community.

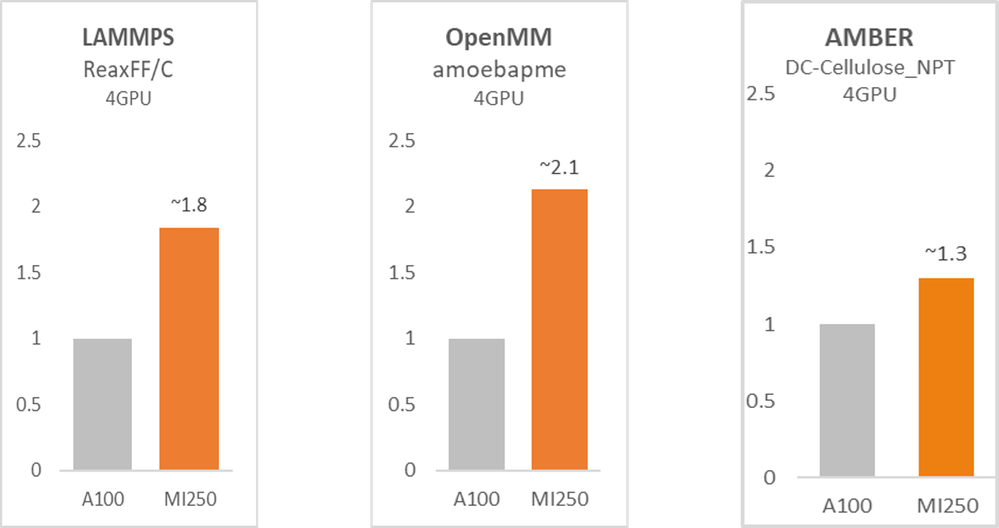

Having a strong hardware and software foundation provides AMD Instinct accelerators an ideal launchpad to accelerate HPC applications across several verticals. The AMD Infinity Hub is a growing collection of HPC and AI frameworks and HPC application containers across various domains like Life Science, Physics, Quantum Chemistry, and more. To showcase the performance using AMD Instinct accelerators, we selected a set of HPC applications and compared them against another GPU vendor. The performance was measured on a Gigabyte server with four AMD Instinct™ MI250 GPUs and referenced against the other data center accelerator vendor’s publicly released data from their benchmark site and AMD testing lab results where published benchmarks were not available.

We believe results should reflect actual delivered performance, replicable by third parties for validation and should be

All performance results for AMD Instinct MI250 were calculated using Geomean calculations across multiple datasets and compared to published results from the other vendor except performance results for OpenMM and HPCG, which were performed in the AMD testing labs using a Geomean basis across multiple datasets.

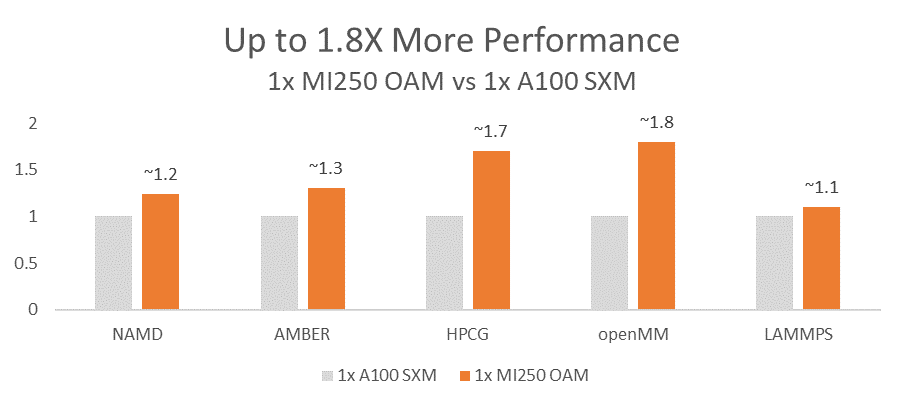

In test runs using one GPU, AMD Instinct MI250 outperformed an A100 80GB SXM across all applications tested and delivered ~1.3x solution on AMBER and ~1.8x higher performance on OpenMM.5,6,7,8,9

Figure 2: 1x AMD Instinct™ MI250 GPU Performance.5,6,7,8,9

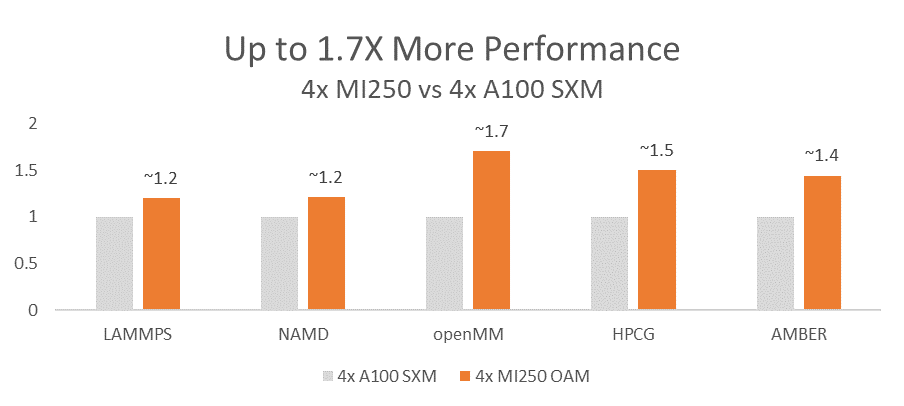

In the multi-GPU instances, AMD Instinct MI250 showcases the strengths of the Instinct GPUs compute capability as well as the Peer-2-Peer interconnect performance with the high speed AMD Infinity Fabric™ technology providing up to 400 GB/S of total theoretical I/O bandwidth per MI250 in this test platform10 to deliver up to 1.7x higher performance on OpenMM and AMBER following up at ~1.4x.6,8

Figure 3: 4x AMD Instinct™ MI250 GPU Performance.5,6,7,8,9

The individual modules within these major applications highlight the massive performance advantage AMD Instinct MI250 has over its nearest GPU competitor. For example, running OpenMM amoebapme with 4x MI250 GPUs provides up to 2.1x higher performance than A100 seen below in Figure 5.6

Figure 4: LAMMPS Perf9 Figure 5: OpenMM Perf8 Figure 6: AMBER Perf6

Conclusion:

The era of Exascale computing is here and the requirements for HPC has taken a massive leap forward. AMD Instinct accelerators, along with EPYC CPUs and the ROCm open software platform, are the first accelerated solution to power an Exascale Supercomputer with the Frontier system at ORNL unlocking a new era of computing capabilities for HPC users. The AMD Instinct MI200 series exascale-class products and the ROCm SW stack are now readily available for customers and the entire HPC & AI community. The application performance delivered by AMD Instinct GPUs highlights the growing adoption of AMD GPUs by a broad set of HPC users and highlights what a dedicated compute designed GPU architecture and an open platform can deliver. AMD Instinct MI250 provides outstanding theoretical peak performance, performance/Watt and HPC application performance on several key modules across these HPC applications.

We encourage users to verify these results by running the tests for themselves. AMD benchmark code can be found on the AMD Infinity Hub.4

For more information about AMD Instinct™ MI250 GPU performance, please click here.

Making the ROCm platform even easier to adopt

For ROCm users and developers, AMD is continually looking for ways to make ROCm easier to use, easier to deploy on systems and to provide learning tools and technical documents to support those efforts.

Helpful Resources:

- Learn more about our latest AMD Instinct accelerators, including the new Instinct MI210 PCIe® form factor GPU recently added to the family of AMD Instinct MI200 series of accelerators and supporting partner server solutions in our AMD Instinct Server Solutions Catalog.

- The ROCm web pages provide an overview of the platform and what it includes, along with markets and workloads it supports.

- ROCm Information Portal is a new one-stop portal for users and developers that posts the latest

versions of ROCm along with API and support documentation. This portal also now hosts the ROCm Learning Center to help introduce the ROCm platform to new users, as well as to provide existing users with curated videos, webinars, labs, and tutorials to help in developing and deploying systems on the platform. It replaces the former documentation and learning sites.

- AMD Infinity Hub gives you access to HPC applications and ML frameworks packaged as containers and ready to run. You can also access the ROCm Application Catalog, which includes an up-to-date listing of ROCm enabled applications.

- AMD Accelerator Cloud offers remote access to test code and applications in the cloud, on the latest AMD Instinct™ accelerators and ROCm software.

Mahesh Balasubramanian, Guy Ludden, & Bryce Mackin are in the AMD Instinct™ GPU product Marketing Group for AMD. Their postings are their own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

Endnotes:

- Source as of June 2022 Top500 list: https://www.top500.org/lists/top500/2022/06/

- World’s fastest data center GPU is the AMD Instinct™ MI250X. Calculations conducted by AMD Performance Labs as of Sep 15, 2021, for the AMD Instinct™ MI250X (128GB HBM2e OAM module) accelerator at 1,700 MHz peak boost engine clock resulted in 95.7 TFLOPS peak theoretical double precision (FP64 Matrix), 47.9 TFLOPS peak theoretical double precision (FP64), 95.7 TFLOPS peak theoretical single precision matrix (FP32 Matrix), 47.9 TFLOPS peak theoretical single precision (FP32), 383.0 TFLOPS peak theoretical half precision (FP16), and 383.0 TFLOPS peak theoretical Bfloat16 format precision (BF16) floating-point performance. Calculations conducted by AMD Performance Labs as of Sep 18, 2020 for the AMD Instinct™ MI100 (32GB HBM2 PCIe® card) accelerator at 1,502 MHz peak boost engine clock resulted in 11.54 TFLOPS peak theoretical double precision (FP64), 46.1 TFLOPS peak theoretical single precision matrix (FP32), 23.1 TFLOPS peak theoretical single precision (FP32), 184.6 TFLOPS peak theoretical half precision (FP16) floating-point performance. Published results on the NVidia Ampere A100 (80GB) GPU accelerator, boost engine clock of 1410 MHz, resulted in 19.5 TFLOPS peak double precision tensor cores (FP64 Tensor Core), 9.7 TFLOPS peak double precision (FP64). 19.5 TFLOPS peak single precision (FP32), 78 TFLOPS peak half precision (FP16), 312 TFLOPS peak half precision (FP16 Tensor Flow), 39 TFLOPS peak Bfloat 16 (BF16), 312 TFLOPS peak Bfloat16 format precision (BF16 Tensor Flow), theoretical floating-point performance. The TF32 data format is not IEEE compliant and not included in this comparison. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper..., page 15, Table 1. MI200-01

- Calculations conducted by AMD Performance Labs as of Sep 15, 2021, for the AMD Instinct™ MI250X (128GB HBM2e OAM module) 500 Watt accelerator at 1,700 MHz peak boost engine clock resulted in 95.7 TFLOPS peak theoretical double precision (FP64 Matrix), 47.9 TFLOPS peak theoretical double precision (FP64 vector) floating-point performance. The Nvidia A100 SXM (80 GB) accelerator (400W) with boost engine clock of 1410 MHz results in 19.5 TFLOPS peak theoretical double precision (FP64 Tensor Core), 9.7TF TFLOPS peak theoretical double precision (FP64 vector) floating-point performance. MI200-40

- Due to application licensing restrictions, some codes used in benchmarking may need to be downloaded directly from their software repository sources. Plus, AMD optimizations to LAMMPS (EAM, LJ 2.5, ReaxFF/C, and Tersoff) and HPL that are not yet available upstream.

- Testing Conducted by AMD performance lab 8.2.22 running NAMD v3 APOA1 NVE and STMV NVE. Comparing: 2P EPYC™ 7763 CPU powered server, SMT disabled, with 1x and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W, ROCm 5.1.3 using amdih/namd3:3.0a9 container vs. NVIDIA Public Claims as of 8.2.22: https://developer.nvidia.com/hpc-application-performance Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-65

- Testing Conducted by AMD performance lab 8.2.22 using AMBER: DC-Cellulose_NPT, DC-Cellulose_NVE, DC-FactorIX_NPT, DC-FactorIX_NVE, DC-JAC_NPT, DC-JAC_NVE, and DC-STMV_NPT comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.0.0, Amber container 20.amd_84 vs. NVIDIA Public Claims: https://developer.nvidia.com/hpc-application-performance, Amber version 20.12-AT_21.12 as of 8.2.22. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-68

- Testing Conducted by AMD performance lab 8.22.2022 using HPCG 3.0 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.0.0.50000-49. HPCG 3.0 Container: docker pull amdih/rochpcg:3.1.0_97 at https://www.amd.com/en/technologies/infinity-hub/hpcg vs. 2P AMD EPYC™ 7742 server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM 400W GPUs, CUDA 11.6 HPCG 3.0 Container: nvcr.io/nvidia/hpc-benchmarks:21.4-hpcg at https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-70

- Testing Conducted by AMD performance lab 8.2.22 using OpenMM 7.7.0: gbsa, rf, pme, amoebagk, amoebapme, apoa1rf, apoa1pme, apoa1ljpme, amber20-dhfr, amber20-cellulose, amber20-stmv benchmark comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, 1x and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.0.0 vs. 2P AMD EPYC™ 7742 server 1x and 4x Nvidia Ampere A100 80GB SXM (400W) GPUs, CUDA 11.6. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-66

- Testing Conducted by AMD performance lab 8.2.22 using LAMMPS 2021.5.14_130: EAM, LJ 2.5, ReaxFF/C, and Tersoff, Plus, AMD optimizations to LAMMPS: EAM, LJ 2.5, ReaxFF/C, and Tersoff that are not yet available upstream. Comparing 2 Systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, ROCm 5.1 2022.5.04 versus NVIDIA Public Claims: https://developer.nvidia.com/hpc-application-performance as of 8.2.22, Version patch 4May2022. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-67

- Calculations as of SEP 18th, 2021. AMD Instinct™ MI250 built on AMD CDNA™ 2 technology accelerators support AMD Infinity Fabric™ technology providing up to 100 GB/s peak total aggregate theoretical transport data GPU peer-to-peer (P2P) bandwidth per AMD Infinity Fabric link, and include up to eight links providing up to 800GB/s peak aggregate theoretical GPU (P2P) transport rate bandwidth performance per GPU OAM card for 800 GB/s. AMD Instinct™ MI100 built on AMD CDNA technology accelerators support PCIe® Gen4 providing up to 64 GB/s peak theoretical transport data bandwidth from CPU to GPU per card, and include three links providing up to 276 GB/s peak theoretical GPU P2P transport rate bandwidth performance per GPU card. Combined with PCIe Gen4 support, this provides an aggregate GPU card I/O peak bandwidth of up to 340 GB/s. Server manufacturers may vary configuration offerings yielding different results. MI200-13