Graphics Cards

- AMD Community

- Support Forums

- Graphics Cards

- Re: Getting 10 bit color from a W5500 with HDMI ad...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Getting 10 bit color from a W5500 with HDMI adapter

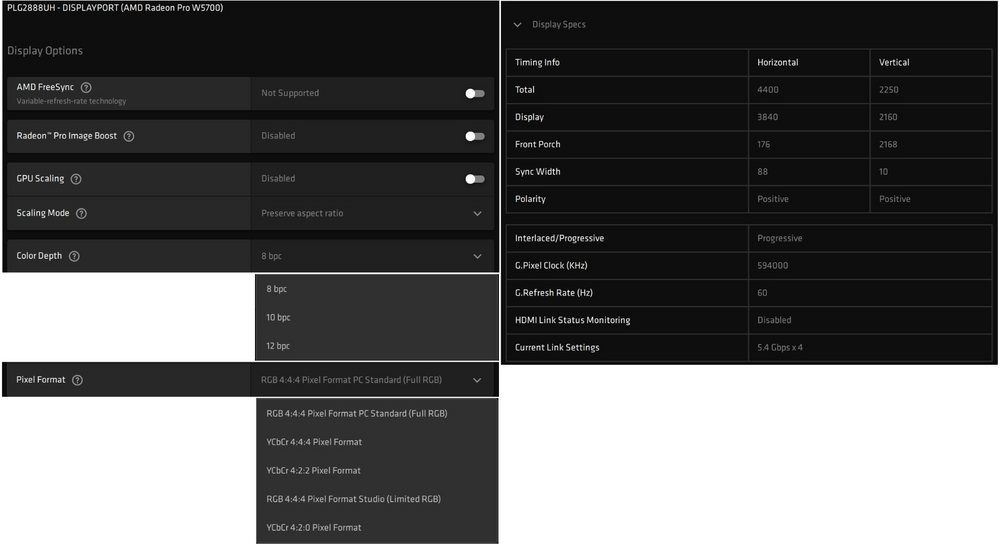

Is there any way to enable 10 bit (HDR) color on a Radeon Pro W5500 connecting the DP output to HDMI inputs on 4K TVs? I can't get it accept anything but 4:4:4 pixel format in the Radeon Pro settings.

Any help is appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Depending on your display, you should be able to get RGB and YCbCr. This needs to be reported by your TV back to GPU, where the information will be populated. Which pixel format are you expecting?

- Please send a screenshot from Radeon Pro Settings

- OS and driver version used

- TV make and model

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With my RX580, I need to use either YCbCr 4:2:2 or YCbCr 4:2:0 to get 10 or 12 bit color on the screen. With the Radeon Pro, I only have 4:4:4 RGB or YCbCr available, and so I am limited to 8 bit color.

I'm currently running Windows 10 with:

Radeon Pro Settings Version - 2020.0811.0958.17961

View Release Notes - Not Available

Driver Packaging Version - 20.20.01.16-200811a-358081E-RadeonSoftwareAdrenalin2020

Provider - Advanced Micro Devices, Inc.

2D Driver Version - 8.1.1.1634

Direct3D® Version - 9.14.10.01451

OpenGL® Version - 26.20.11000.14736

AMD Audio Driver Version - 10.0.1.16

Vulkan™ Driver Version - 2.0.145

Vulkan™ API Version - 1.2.139

As this was auto-installed when the machine booted with the W5500 in it, I have to use the Settings version to go by, and it looks like it is the 20.Q3-Aug12 driver.

The TV in question is an LG 65UK6090PUA 65" 4K HDR

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

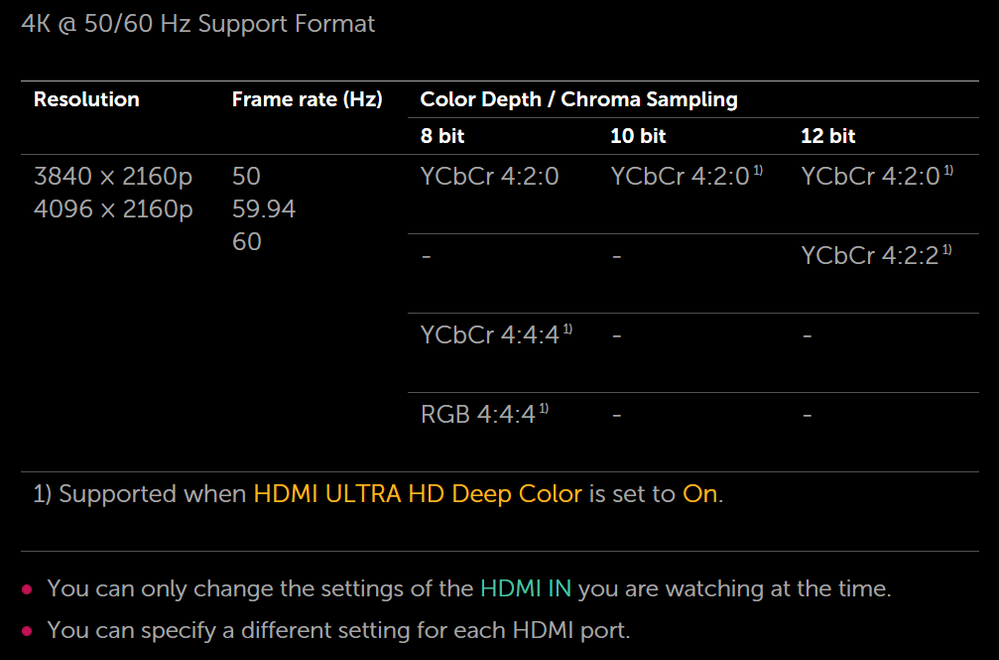

From your LG TV Manual concerning the Colors:

I have attached the LG User's Manual since I needed to download it to take a look at it. Don't know if this help either FSADOUGH or you concerning the issue you are having.

EDIT: If you are using a DP>HDMI Adapter that could also be the reason you are not get 10 Bit color. Found this DP>HDMI UHD ADAPTER that does support 8 bit to 16 bit color from Amazon: https://www.amazon.com/Club3D-Displayport-1-2-HDMI-CAC-1070/dp/B017BQ8I54

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My display definitely supports it, as I can go to 12bit color with my RX 580 with no problem. But I can't get it with the W5500 with an Active 4K@60Hz adapter.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just edited my previous reply concerning the adapter.

Okay that rules out the DP>HDMI Adapter then if you were getting 12 bit color with the RX580 output.

fsadough will then need to keep assisting you then since he is the expert on Professional GPU cards.

But it is strange a Consumer GPU card can output 10 bit and a Professional GPU can't on your UHD LG Television.

Take care.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- The statement "My display definitely supports it, as I can go to 12bit color with my RX 580 with no problem" is not clear. The RX580 has HDMI and DP output. How exactly are you connecting the RX580 to your LG TV?

- Are you using the exact same HDMI cable and DP-HDMI adapter connected to DP-Output of RX580, or are you using the HDMI output of RX580 and eliminating the DP-HDMI Adapter? Are you using the exact same HDMI input on your LG while testing RX580 vs W5500?

- Send me the EDID of your display.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed it is a bug. I reproduced it. With DP cable you get 10 bpc but not with HDMI. Please stand by.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fsadough wrote:

Indeed it is a bug. I reproduced it. With DP cable you get 10 bpc but not with HDMI. Please stand by.

Will a hotfix be forthcoming, or will a fix be in the 2020.Q4 or 2021.Q1 package?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My apology. Seems to me there is a limitation by HDMI version. For any 4:4:4 10bpc you would need a DP-HDMI 2.1 adapter & Cable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

fsadough wrote:

My apology. Seems to me there is a limitation by HDMI version. For any 4:4:4 10bpc you would need a DP-HDMI 2.1 adapter & Cable.

So does that mean that in order to simply get 10 bit color, I would also need to be connected to an HDMI 2.1 capable TV or monitor?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

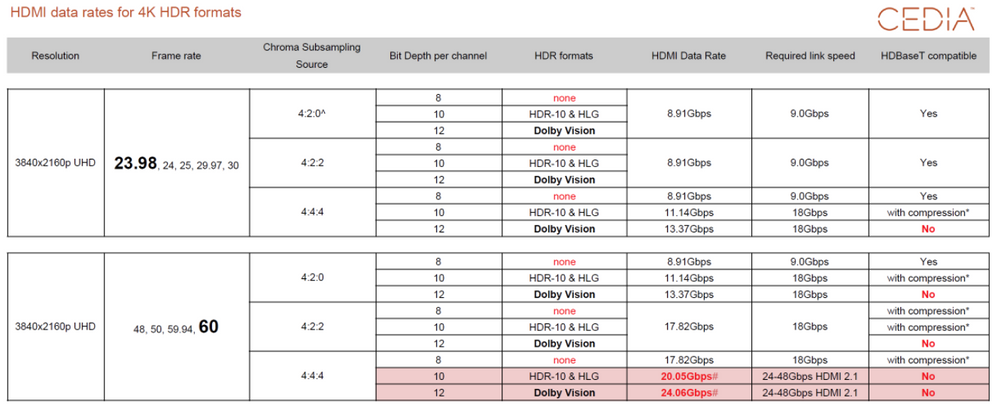

To be more accurate:

If you need 4K@60Hz at color depth 10bpc with any 4:4:4 pixel format (RGB or YCbCr), then you would need a DP-HDMI 2.1 adapter. Club 3D has one for almost $60. 10bpc @ YCbCr 4:2:2 or 4:2:0 should be supported with a proper DP-HDMI adapter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be more accurate, 10bpc @ RGB/YCbCr 4:2:2 or 4:2:0 should be supported with a proper DP-HDMI 2.0b

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The newer Club 3d 2.1 adapter has some very mixed reviews.

If the OP is okay with 10 bit 4:2:2 or 4:2:0 4k 60hz. I can attest that this adapter has been fantastic for me with none of issues reviewers complain about with the newer version.

https://www.club-3d.com/en/detail/2442/displayportt_1.4_to_hdmit_2.0b_hdr_active_adapter/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe the question here is 4K@60Hz support with 4:4:4 color format. For this configuration an HDMI link speed of 24-48 Gbps is required, which translates ONLY to HDMI 2.1

Source: HDMI Data Rates for 4K HDR

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get that hence where I said "if the OP is okay with 10 bit 4:2:2 or 4:2:0 4k 60hz". Even with the right adapters that work at 4:4:4 and those are very new to the market, if you read what reviewers say about those products they don't work well are problematic and users have to plug and unplug to get them working again etc. I was only pointing out that if a slight step back would work it might be worth not having all the headache and the customer would still have the 10bit color and HDR they want with that adapter that gets great reviews and I agree works great as I have one is all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd settle for any HDR.

So I guess it wasn't completely clear in my original post after all this time, because I am not really familiar with how pixel format vs color depth works. With my current 4K@60Hz HDMI adapter, I am limited to 4:4:4 8 bit color in the Radeon Pro settings. I can't get 4:2:2 or 4:2:0 of any color depth. It's just not given as an option.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only trade off with going down that step is it is compressed signal. Likely with any content you will be watching much of it has been through compression itself and it won't make a visible difference. Honestly even on the best over the air uncompressed you likely wouldn't see much difference. Albeit I am not as finicky as video and audiophiles. All I know is I went through a lot of time and money to come to the only stable solution I could find that works all the time. That adapter does work well. Worth pointing out is that I don't have your TV and I don't have your GPU. Either of those variable could easily change the equation. Worth mentioning is that I buy from places that allow you to purchase without return penalties in case it doesn't work out. Micro Center and Amazon as long as you buy from them and not market place are really good about taking returns. Amazon even plays shipping back. I'm a cheap skate so it matters to me. LOL

Another thing I will mention is that when you turn on HDR on the Windows 10 desktop IMHO it looks like junk. Dark and washed out. It gives some adjustments but I have no luck getting it back to the way it looked with regular RGB. This is well known with Windows and I mention this as it has nothing to do with an issue with, cables, adapters, gpu etc....

I just toggle back in forth as needed.

Note in games, many of them can toggle the HDR on without this being on at the desktop.

Support is very different game to game. You will have some games that only look good in 8bit with HDR and others 10. Even some that won't run HDR on one setting vs the other so again you are doing nothing wrong.

This is all just very new stuff and a shame all involved didn't get this stuff better thought out before releasing on end users. It is like Beta Max vs VHS on steroids. Hope your old enough to get that reference. LOL

Hope this helps you and good luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't play any games that use HDR. It's mostly about watching 4K HDR via Windows desktop clients so that the film doesn't "wash out" due to tone mapping, or the lack of it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- What is your DP-HDMI adapter?

- What is your HDMI Cable?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

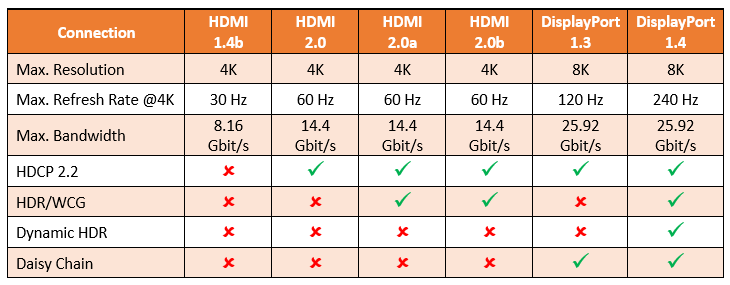

My HDMI adapter is a QVS DPHD-AMF from MicroCenter, but looking at its official page, and comparing it to the table you provided a week ago - seen below - it must be the adapter. While it is 4K@60Hz, it is only HDMI 2.0 compliant, not 2.0a that your table shows is necessary for "HDR/WCG" support.

I will go back to MicroCenter and get an adapter that is 2.0a compliant.

I would assume my HDMI cable is fine, as I can do 4K HDR on this same display with just the HDMI cable connected to my RX 580's HDMI port.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

before these couple more recent additions in adapters the club-3d adapter I mentioned was the only adapter on the market that would actually do 4k 60 10bit and HDR. Just getting a 2.0a compliant won't give you HDR. Plus you will want a 2.0b at least. Not sure if since I got my club 3d if any others actually work but that information was valid about 7 months ago when I did mine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I may just go for broke and have a Philips 558M1RY imported and solve the whole issue, since it has a DP input and AMD Freesync Premium Pro.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not a bad idea. Honestly I think Display Port is better. I wish it was the standard on TV's but the they have to have jacks they can charge a license fee for, that are still technologically inferior.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to obtain the proper adapter. Using the following adapters and cables on W5700 following pixel formats and color depths become available

Club 3D Adapter DisplayPort 1.4 > HDMI 2.1 HDR 4K120Hz aktiv retail |

Delock Mini DisplayPort 1.4 zu DisplayPort Adapter mit Einrastfunktion 8K 60 Hz |

2.00m Club 3D HDMI2.1 Anschlusskabel Ultra High Speed HDMI-Stecker auf HDMI-Stecker Schwarz 10K / 120Hz / DSC 1.2 / eARC / QFT / QMS |