General Discussions

- AMD Community

- Support Forums

- General Discussions

- AMD fires back at 'Super' NVIDIA with Radeon RX 57...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

MD unveiled its new Radeon RX 5700 line of graphics cards with 7nm chips at E3 last month, and with just days to go before they launch on July 7th, the company has announced new pricing. In the "spirit" of competition that it says is "heating up" in the graphics market -- specifically NVIDIA's "Super" new RTX cards -- all three versions of the graphics card will be cheaper than we thought.

The standard Radeon RX 5700 with 36 compute units and speeds of up to 1.7GHz was originally announced at $379, but will instead hit shelves at $349 -- the same price as NVIDIA's RTX 2060. The 5700 XT card that brings 40 compute units and up to 1.9GHz speed will be $50 cheaper than expected, launching at $399. The same goes for the 50th Anniversary with a slightly higher boost speed and stylish gold trim that will cost $449 instead of $499.

That's enough to keep them both cheaper than the $499 RTX 2070 Super -- we'll have to wait for the performance reviews to find out if it's enough to make sure they're still relevant.

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

black_zion wrote:

It depends, if Navi Refresh launches at $150-$300 price range, taking the place of Polaris through existing Navi cards in the hierarchy, with RDNA2 going from above the 5700XT to however high they can get, it's going to be a big seller. Remember AMD said Navi Refresh and RDNA2 would bring lower prices, and they simply cannot afford to START their GPUs at $250, no matter how strong Renoir may be.

My RTX 2080 set be back substantially more than $250

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: Remember AMD said Navi Refresh and RDNA2 would bring lower prices.

I missed that.

All I have seen was an investor presentation where they stated they were going to increase ROI.

You do that by various means.

Easiest way is use Marketing to "big up" existing produyct stack and sell it for higher price than it deserves.

All I have seen in past year year from AMD is examples / attempts :

GPU: Radeon VII dropped like a hot potato, and large price increases on remaining cards.

Adrenalin 2020 = tarted up Adrenalin 2019 software to "look better" but a definite move in reverse.

RX5700XT = higher performance but unstable and stuttering RX690. Reality = 3 years later RX480 Polaris replacement with a cheap cooler and too many poor quality AIB cards.

Remamed as RX5700XT to hide what it really is. Try to sell it for 2X price uplift. Slower than RX Vega 64 Liquid at 4K even after 2 years of R&D and moving from GF14nm to TSMC 7nm. No HBCC. Still higher power requirement than Nvidia. Drivers.still unstable. Nvidia 2060.2070 Super cards beat them at similar price.

Lower end RX5000 models that are now "supposed" to replace Polaris cost more and perform worse. Apparently more Performance for same cost is something in the past.

Compute performance slashed versus Vega 64/56.

AMD down to only supporting Windows 10 64 bit OS in practice.

Linux Drivers forgotten.

Blender forgotten.

Bad VBIOS at launch and user VBIOS flash the "new normal".

CPU: Tweaked silicon in the case of the Ryzen XT offering 1-2% performance improvement in a few games at a whopping cost, and obsoleted at launch by older existing CPU in AMD own product stack.

Motherboard: Twisty turny rewrite of history about AM4 socket support for X470, B450 and X370 with Zen 3 support.

Try to claim X570 motherboards are so ridiculously expensive due to PCIe 4,0 support.

Mobile CPU: AMD Ryzen 4000H https://www.amd.com/en/products/apu/amd-ryzen-9-4900h

Good APU processor for laptop but still using Vega iGPU.

Paired with weak Nvidia Discrete Graphics and no attempt at Thunderbolt 3.

I am looking for a new gaming laptop but nothing from AMD is good enough.

AMD history with Switchable Graphics and Laptop Drivers not working just tells me not to even go there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

bet it still overheats. LOL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apple says bye bye to AMD GPUs.

However good Big Navi is AMD GPUs are being cut from future Apple Macs too | PC Gamer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't get Apple and more importantly they don't care. They are happy to just keep gouging the very few percent that will buy whatever they release.

I have used Macs since their inception for graphic arts. I still like them for production work.

I however an not happy with their decision to change architecture again.

It has been very rough transitioning the last couple of times but at least it seemed it was because they were moving forward with what is good for the end user.

I don't know that I think this is the case this time.

I see it as more of a regression, At least in the short term. Time will tell

I think one thing they very much are underestimating is that Macs be came more mainstream popular than the had been when they went to x86 compatible and IMHO this was because they could now also run Windows.

With this new change I don't think the ARM chips will do the same. Unless maybe Apple is planning some bootcamp emulation layer for that. If so I have not read that.

I can tell you that over the past 6-7 years I have seen a large increase in our customers Adobe files moving from having been created on the Mac to being now PC files. I think Apples change here will driver a lot more users to the PC side.

I don't think Apple will care as they are happy making a ton of money on the ridiculous margins they charge to a small percentage of the market.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just refuse to use Apple products.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

colesdav wrote:

Apple says bye bye to AMD GPUs.

However good Big Navi is AMD GPUs are being cut from future Apple Macs too | PC Gamer

Apple users have known this for a long time. Apple has wanted to standardize their hardware to make development easier.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is why I linked the video I watched. They are the ones that said it shutdown with heat. Looks like Dave also reference the same video.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Blowers are still preferred by OEMs. That way, they can drop the card into their various offerings without having to worry about the amount of heat being added to the case, blowers just "blow" it outside.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless they thermal shutdown in the menu of Shadow of the Tomb Raider ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is that a known issue with all 5700 Xt models and that game, or an isolated incident?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Really? I just googled about 2 dozen OEM gaming computers that have reviews online and have internal and had pictures and did not find one of them that had cards with blower models? Not saying there aren't any but they sure were not in the top search results I saw. I know of no non-reference designs on anyone's cards in many, many years that choose blowers. What are you referencing to come to your conclusion?

I searched by OEM graphics cards and Gaming Desktops. Then looked at Dell, HP, Lenovo and Acer's websites.

My educated guess would be that even when an OEM does use one it isn't a heat based decision and like cost as the reference designs are often cheaper at least on launch and in the early months after release.

I would also add that ZERO nVidia cards come with blowers anymore and have not for at least a couple generations as they are not preferred by most and you can find many videos out there proving they are the worst solution in general.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is actually a engineering standard. When developing a case, there are actually compliance standards that need to be met.

If you add in something like a GPU, you need to account for the increased heat in your case design, unless the excess heat is exhausted out, then your golden with a design that already meets specifications.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry not sure what you are speaking to here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

nice and helpful tips, i have a hp laptop with an AMD processor I love this processor its work really good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that you mention it, it does look like that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was really looking forward to the Navi launch this time, because I thought after Vega launch, after the Radeon VII launch, that AMD would get this one correct.

Sadly not.

It looks like Navi is actually a good leap in performance versus an RX480 Polaris which is the chip it should be compared with.

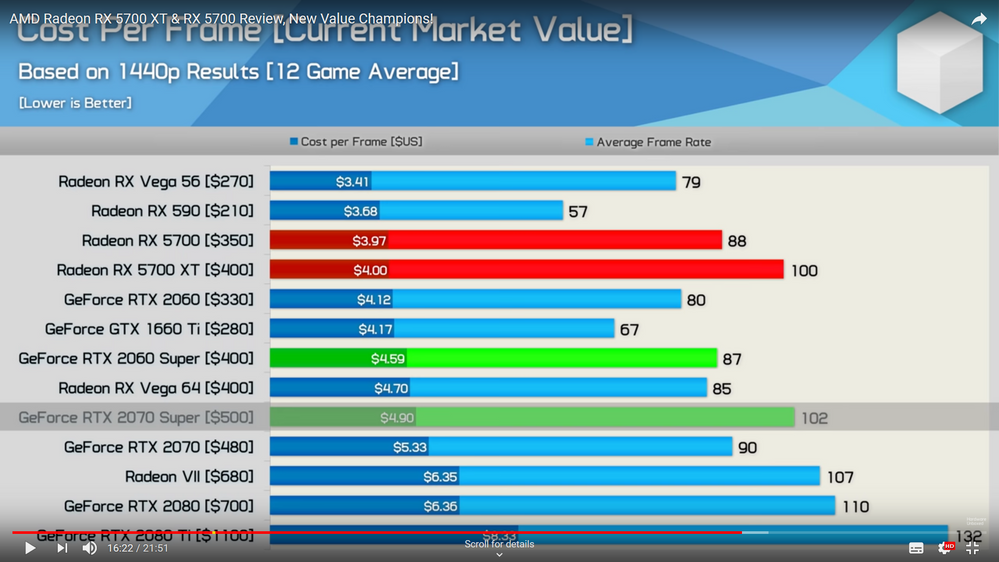

I watched all the reviews I could find. Hardware Unboxed review shows the RX5700XT solidly beating the Nvidia 2060 Super at same price point. AMD Radeon RX 5700 XT & RX 5700 Review, New Value Champions! - YouTube

This slide is interesting to me:

However it does not take note of the following:

(1). Nvidia are offering this game deal: Wolfenstein: Youngblood and Control in the Latest Nvidia Offer | gamepressure.com which will demo RTX. You get to keep those games. They look like good titles. They are worth much more than the Xbox Game Pass for PC which is $15 for 3 months. I recently subscribed to Xbox Game Pass for PC to try it out and it isn't great. Very long download times, and ties me to Windows 10 which I would prefer not to use at all.

(2). No Raytracing / DXR solution at all - and before anyone says anything - I know that there are still very few games released where it is supported. I own an RTX2080 and I run the games that support RayTracing at 4K or DLSS 4K (2K+DLSS) and I see the value in it. Others may not which is fine. An RTX2060 can run BFV at 1080p with RTX on at 60 FPS so RTX is not "only for RTX2080 and above").

(3).The Vega legacy and driver stability.

RX Vega 64 for gaming was a failure. Even though I own an RX Vega 64 Liquid purchased brand new November 2018 and I just purchased a PowerColor Vega 56 Red Dragon last week. I bought them for a project I am working on that needs AMD cards. I would not have purchased either for gaming.

The Vega 64 reference was a recommended "do not buy". The AIB cards took ages to turn up with working Drivers and BIOS. None of them were attractive options to me. All 2.2-2.5 slot designs. Heavy. Large. Needed GPU Support bracket in some cases. Hard to get waterblocks.

Those Vega cards are unstable in gaming. They frequently crash. They do have bad drivers. I almost returned the RX Vega 64 Liquid because it was so unstable.ReLive recording and the Radeon Performance Overlay didn't even work, wheras it worked on my R9 Fury X/Fury/Nano cards. I spent time to report many issues and I thank AMD for fixing them but that RX Vega 64 Liquid had been out on the GPU market for years. I should not have had to spend time reporting bugs on that GPU.

Auto-Overclocking on the RX Vega 64 Liquid blackscreened my PC and took out my Windows 10 OS. I am still genuinely "afraid" to hit those Auto Overclocking Wattman Options on that GPU. I wish there was an option to completely disable them.

FYI The brand new PowerColor Vega 56 Red Dragon is showing similar stability issues / Driver bugs to the ones I currently see on the RX Vega 64 Liquid. I have tried different PC's and fresh Windows 10 and AMD Driver DDU clean installations.

The FuryX/Fury/Nano cards I own also had a bad time with stability for about 3 months after the Adrenalin Driver launch and again for a few months after Adrenalin 2019 launch.

That is why I bought the RTX2080. I had enough of driver crashes and bad performance. The RTX2080 was purchased in December 2018 and no driver crashes yet. It is the best GPU I have ever owned. It was similar cost to the RX Vega 64 Liquid.

So as you can guess, even though I see those great performance and performance/dollar numbers for the RX5700XT I am reluctant to go back to AMD again for gaming unless driver stability improves. This is not helped by broken launch drivers again (same story on Vega and Radeon 7 see: AMD Radeon RX 5700 XT Review: Thermals, Noise, Gaming, & Broken Drivers - YouTube

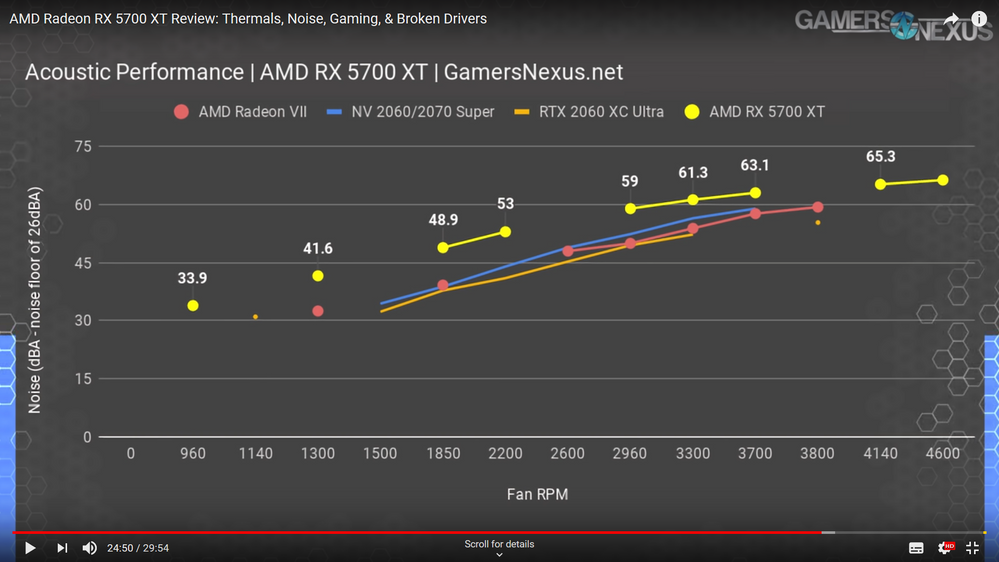

(4). That RX5700XT reference blower design. I thought AMD had learnt the lesson with the Radeon 7 triple fan cooler which is much better than a blower design. These are gaming cards. They will go in gaming PC's. They need dual fan cooler option at launch.Also I think the RX5700XT "dent" makes the GPU look broken. The RX5700 shroud looks cheap and lack of a backplate is just stupid. It will cost the user anbother $20-30 + time to order and fit a backplate design for the card.

AMD said the new blower cooler design would be quiet. It appears not:

(5). Feature differentiation.

Radeon Chill needs working on to fix it so it is useable. I have made many posts and run many tests. I cannot use it to save power and be able to hit 60 FPS with keyboard only input FPS. The interface for Chill is broken in the Radeon Overlay. Chill Max is tied to Local FRTC it should not be that way. CHill_Max is just a FPS sclaing factor. Global FRTC should always be available and not tied to Chill_Max. I have made many posts about this pleading for it to be fixed. New Chill feature to limit rapid mouse movement to Chill_Min to save power does not work consistently.

Radeon Image Sharpening - there is already a contrast aware sharpening algorithm in ReShade. This is not a big deal. An Nivida or AMD user can use ReShade to get this feature.

GPU scaling - I do not know if the new RX5700XT implements new integer scaling or not. I think this was down as a new feature in next big Adrenalin Update mentioned here: Vote for Radeon Software Ideas - Feb 2019

Since there is not even a brief spec describing the feature I do not know.

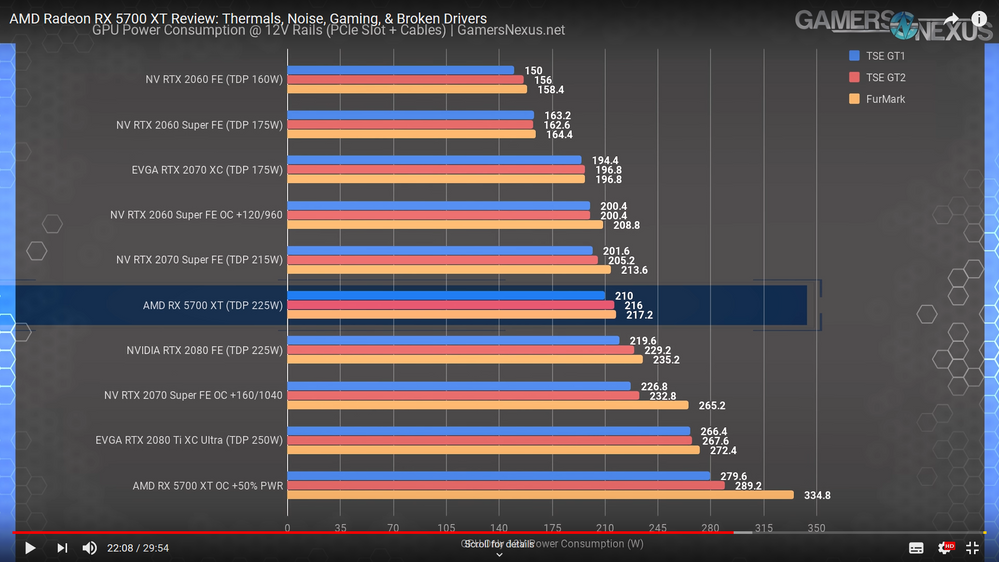

(6). Power consumption and thermals in that blower cooler.

Look at this graph:

Wattman is broken and so the reviewer set the power limit to +50% on the AMD GPU.

It is pulling ~ 335 Watts with a +50% power limit in a blower cooler. That is interesting.

It is telling me that the RX5700XT in the review is operating near the edge of thermal stability at 0% power (default) settings.

That 335 Watts is a similar power draw to that I see reported on an RX Vega 64 Liquid running BFV DX12 in turbo mode settings with power slider then set to +50% and using an undervolt on the GPU core and the HBM overclocked to 1120MHz. I was attempting to hit 60FPS at 4K Ultra. No chance.

I recently saw my RX Vega 64 Liquid pull 350 Watts in Far Cry 5. Thats on a liquid cooled card. It crashes when the GPU temp gets to about 58'C.

So now I wait to see if AIBs can launch a dual fan 2 PCIe slot high (40mm) Navi GPU in a standard reference card form factor, but even then it would have to be cheaper than the Nvidia card because of the above points.

Sorry but that is my honest opinion. I wish I could ber more positive about it.

The Navi GPU itself looks good as an RX 480/580/590 replacement but the price is too high, the blower cooler decision and design is bad. AMD drivers are not stable enough and feature differentiation is insufficient.

AIB cards must turn up quickly with working BIOS and Drivers this time, not take six months. Even then when they do the price will likely be 50-100 higher than AMD reference cards which puts the RTX5700XT directly against the RTX2070 Super in which case no chance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think one thing to remember when comparing cards speed wise is not to just compare stock speed. For instance my RTX 2060 over clocks like crazy. I get very close to 2070 speed. I bring this up as it is said that a stock 5700 xt is faster than a stock 2060 super. Question is how do they compare at a reasonable overclock? Plus AIB makers will release factory OC supers too. So far it is looking like navi doesn't have much headroom for an overclock so we will see if AIB partners can change that. So if you can easily bridge the gap with a little OC, get a couple of wanted games, have ray tracing support and IMHO a better driver experience, it would still be hard for me to want a navi at this point. Me personally I will be more interested in what happens when they finally bring ray tracing support to the cards. By then Intel will likely have there card out too. The great thing about all this though is we once again have real competition in the market so that is always good for us, the consumer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree. Already said it here: https://community.amd.com/thread/240214

Auto Overclocking actually works on Nvidia GPU's. I can run Thundermaster or Asus GPU Tweak II on my Palit RTX 2080 OC (It's already an overclocked RTX 2080) and I consistently get additional 10% overclock on the GPU core and the power consumption does not go through the roof. I have manually overcloked the GDDR6 on it by 5%. It definitely improves FPS performance no problem.

An RTX2060 will auto overclock by ~ 9%. It is nice to see Navi is clearly competition to a 2060 and 2070 and has resulted in these new Super cards.

I would like to be able to support AMD by buying a Navi GPU, but they have messed this launch up and running the Nvidia GPU's I own is just more reliable and less hassle for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I actually get just over a 20% OC on my RTX 2060. Probably hit the silicon lottery there but it runs great. The big thing for me is no longer having to tweak my card to get things stable. Just plug and play and so far it all works. On the AMD side I really only have the one computer I game on anymore and the others are all browser, office a video playback but these latest drivers screwed them up again. Had to revert to 19.5.1. That to me has been the most continuing trend for going on 2 years now is how bad the drivers are. It's a shame because before Wattman they truly were rock solid.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Judging by the fact that over 24 hours since they first appeared, there are still plenty of 5700 XT and even more 5700 cards on Newegg, showing that, unlike Ryzen 3000 series, demand isn't exactly there, though some of it no doubt comes from the RTX 2060 and 2070 liquidations. Hopefully the board partners, especially Sapphire and XFX, will pressure Lisa Su heavily into issuing rebates when the custom cards hit the market next month. Cryptocurrency won't bail AMD out of this one, only a price war will.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems to me that the biggest mistake AMD made with this launch is not including even one AAA game with it. The 3 months of game pass would be nothing to me. The Super is coming with 2 brand new games that many people will want to play. So that will make up the price difference in many cases. I have also read now that the new 2060 does in fact OC enough to make up the speed difference, at least on the reference designs. The numbers I saw had the overclocked 2060 super literally only a few frames behind the Vega II in many benchmarks. So a pretty significant result. I will want to see more benchmarks on other sites to confirm that. That result was on Guru3d.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that I have found an affordable X570 motherboard, I can still afford a video card

Right now the Radeon VII is the best card AMD has for < $1000 canadian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I disagree on the games, but you know my stance, a $400 card with 2 "free games worth $120" is still a $400 card, especially because I'm one of those people who wait a year or so before getting new games, both because they're significantly cheaper and because they're bug fixed, but it would be better than 3 months of something you have to keep paying for or you lose. In this case though I doubt even 3 AAA games could make Navi the better choice over the 2060 or 2070 at the current price point and upcoming price hike with the custom editions, they just need to be $100-$150 cheaper than they currently are.

And with all this talk about the 2060 and 2070, don't forget there's GTX 1660 sitting pretty at $220 and 1660 Ti at $280. The RX 5700 as it stands is only, at 1920x1080 (the 1660s target res) 29% faster than the 1660 and 19% faster, but costs 59% and 25% more, and again it's a reference edition vs a custom edition so that gap will grow. This is going to become even more of a problem with the way Navi is priced because when AMD has to derive the 5600 from it, which means there is going to be a massive price gap between the 5700 and 5600, and another reason why AMD needs to axe $100 and $150 off the 5700 and 5700XT's price right now, and give the people who bought one already game vouchers or gift cards else they'd lose them to nVidia guaranteed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm just saying that if you are on the fence between 2 comparable products at near the same price point then the game bundles for many will make the choice for you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm pretty sure Raja wasn't given free reign, knowing Intel they likely said, "We want X Y and Z by this date, and if you don't do it, your ßutt is fired, and good luck finding a job at nVidia". If it's true that Raja went both overbudget and overschedule on Vega, Intel isn't going to tolerate that, not when they're looking to make up a lot of lost CPU revenue with GPU, especially in the enterprise market. I'm assuming with the lack of articles about Intel using TSMC or Samsung that Intel will be fabbing their own GPUs, and right now that means they're going to be competing with 10nm (or even 14+++++++ if there are any more delays) against TSMC and Samsung made 7nm chips for AMD and nVidia, and unless their 10nm process is as efficient out of the gate as TSMC's more mature 7nm EUV is, they're going to be facing an uphill climb.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have no doubt on any of that. I'm sure he is answering I just bet he had a lot more r&d budget to work with. I do kinda wish Intel some luck on the GPU front if only because real 3 way competition again would just be good for pricing and moving technology forward. Gotta say I did LOL when I read the articles about the losses they are taking. Karma is a b*tch.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like this: Intel's Embree is enabling ray tracing on all DirectX 11 graphics cards. This sort of implementation will go along with AMD's RT implementation as shown in their patents where some of the work is done in software, and some in dedicated hardware vs nVidia's all hardware approach. It's not as full fledged as nVidia's approach yet (considering nVidia is throwing a ton of resources behind their RT tech), but this is how Intel is helping move gaming tech forward already.

https://www.wccftech.com/world-of-tanks-encore-rt-demo-lets-you-test-ray-traced-shadows-on-all-dx11-gpus-made-with-intels-embree/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Plus it's not really just software AMD has lots of general compute units and utilizing them through a different approach in software is just smart.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A DXR shader has been available for quite some time.

I do not know how many here have seen what radiosity can do for a scene?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which was supposed to be a benefit of unified, programmable shaders, though it'd be impossibly complex to instruct a program to use X% of a CU to do computations and the remainder for graphical processing. Combine that with AMD having steadily lost user share for the last, what, 5 years, as they've stagnated, and it's just not been utilized.

Remember when the Radeon 4000 series was released with VLIW5 architecture? So much of it was underutilized that the Radeon 6000 series pared it back to VLIW4 and gained performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately kinda been AMD's MO over the years developing hardware and api that would be awesome if they had the market share for it to get adopted in a meaningful way. Even with Nvidia being as big as it is, I would still say very few coming games have announce RTX support overall. It is hard to get developers to do new tricks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thing about nVidia is is that they have the money to have been in developer's pockets for ages though the TWIMTBP program to push nVidia performance and deny AMD the ability to optimize easily, and now with nVidia Studios they are remastering classics to include ray tracing. Also as AMD powered PS and XBOX will be supporting RT next year, RT will be a standard feature, though it will be interesting to see if it really starts to take off, or if it's like AMD's TressFX or nVidia HairWorks...

https://www.techspot.com/news/82326-nvidia-bringing-ray-tracing-support-more-remastered-pc.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The question still though is what level and how they implement the Ray Tracing? They don't have to take such closed dedicated hardware path with it like Nvidia. I think the fact that they will have it on consoles will likely help bring it to more PC games ultimately even though on PC they have less market share, but time will tell.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which is a good question. We know that AMD filed this patent application long ago (in 2017) for hybrid Ray Tracing setup, and we also know that AMD drivers now support Microsoft's DXR Ray Tracing on RX 5000 series cards. The current rumors are that AMD's annual feature update in December will bring RT to Navi, but there's still that question as to what level they will allow it to run at considering there's not any hardware acceleration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess it just depends on the performance impact. We sure know that on the Nvidia side with their dedicated hardware they still take a huge performance hit turning it on. Time will tell....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I remember when ambient occlusion surfaced and it was about a 250 GFLOPS load on the GPU at 1920x1080 and worse at 2560x1440 and 3840x2160

Crysis uses ambient occlusion which made the games rather demanding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Too be fair, the methods don't really seem that different. The RT cores accelerate the BVH search for the rays, but it sounds like AMD will just reuse the texture units for this purpose, so both are effectively hardware accelerated.

Based on how I read that patent blurb, the big advantage here is that requires way less additional transistors because much of the existing hardware is being reused. BVH can be cached using the texture cache instead of dedicated memory in the RT hardware, and the traversal stack can be stored in the VGPR registers. The denoising then is done by existing shader cores which, due to rapid packed math, already can execute FP16 instruction sets (NVidia has the Tensor cores do FP16 instructions). Also, it doesn't really require the developer to do anything. The NVidia approach requires direct programming of the shader, RT, and tensor cores to be effective, while the AMD implementation can just happen on it's own with little developer intervention which should help with uptake.

That is most likely the software side of this equation. The AMD implementation allows the shader to bypass the texture unit ray call. So if the TMUs are busy, or the compute units are busy rather than wait for a ray intersect data or denoising data, the shader can just opt not to do it. So in ray-tracing enabled games with heavy TMU use, the image quality of the AMD solution may decrease compared to NVidia. In the NVidia implementation, the RT cores will always do the BVH calc and the tensor cores always do the denoising because they don't do anything else. The AMD approach reuses existing hardware, and then uses a bit of software overhead to "decide" when to do it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Crytek raytraced demo used a Vega 56 card to rub it in nVidia's face that their overpriced hardware was not needed to have better lighting and reflections.

NEON NOIR: Real-Time Ray Traced Reflections - Achieved With CRYENGINE - YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also have to remember that while nVidia was first in the game with ray tracing, it was horridly inefficient and incurred a large performance penalty, and that they have gained massive performance improvements as ray tracing has matured as they figure out how to make it more efficient, and there are now more API options for ray tracing as well. If AMD has the guts to enable ray tracing support on Navi cards in December (still rumor of course) , they have to feel confident that the 5700XT and 5700 will be able to compete against the 2060S and 2070S as far as ray tracing performance goes.