General Discussions

- AMD Community

- Support Forums

- General Discussions

- AMD fires back at 'Super' NVIDIA with Radeon RX 57...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

MD unveiled its new Radeon RX 5700 line of graphics cards with 7nm chips at E3 last month, and with just days to go before they launch on July 7th, the company has announced new pricing. In the "spirit" of competition that it says is "heating up" in the graphics market -- specifically NVIDIA's "Super" new RTX cards -- all three versions of the graphics card will be cheaper than we thought.

The standard Radeon RX 5700 with 36 compute units and speeds of up to 1.7GHz was originally announced at $379, but will instead hit shelves at $349 -- the same price as NVIDIA's RTX 2060. The 5700 XT card that brings 40 compute units and up to 1.9GHz speed will be $50 cheaper than expected, launching at $399. The same goes for the 50th Anniversary with a slightly higher boost speed and stylish gold trim that will cost $449 instead of $499.

That's enough to keep them both cheaper than the $499 RTX 2070 Super -- we'll have to wait for the performance reviews to find out if it's enough to make sure they're still relevant.

AMD fires back at 'Super' NVIDIA with Radeon RX 5700 price cuts

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Really if you think about it the gaming market hasn't really advanced much at all in the last...5 years yea, since as GPU power has increased, game detail levels and complexity has increased with it, and as AMD has not really been pushing the envelope since the HD 7000 series, nVidia follows suit and settles for minor performance increases with some new features on top of it. nVidia has the resources at their disposal and could have easily crushed AMD by now, but the money is in small incremental updates, Intel is king of that strategy. Conversely, AMD could have shown they were back in the game by pricing the 5700 series as aggressively against the 2070 as they did with the HD 4000 series against the whatever card nVidia had at the time, can't think of it off the top of my head.

All of that results in 4K and really 2560x1440 being relegated still to the high end except in the case older games and some lower detail levels which gives game studios very little incentive to optimize their games for those resolutions. When the next Xbox and PS are released, both targeting 4K60 I believe we might start seeing more overall 4K performance, but it's still going to be relegated to the high end as long as prices stay the way they are, since as you know the mid range is what drives the market.

I think the most surprising thing is that the AIBs are not screaming at both AMD and nVidia. Revenues are down, PC shipments continue to fall by the millions, ASUS's stock, for example, is down 25% in the last year, and they're faced with such high prices that GPUs are not priced to be in demand especially not when you consider the impending tariffs which will increase the prices in the US to be about what they are in the UK.

Though look on the bright side, when China invades Hong Kong then sets their sights on Taiwan, we won't have to worry about GPU prices, we'll all glow in the dark.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pokester wrote:

No doubt customers are not stupid. They are not going to flock to replace cards with new ones that are only incrementally faster. RTX should have been a value added addition to the product line. Not the driving force behind the reason to upgrade, especially when the demand for the feature would be at best a few years down the line. It is great they could add it but they should have made a larger performance improvement with the release. These graphics card prices are just too much, they act like the mining boom is still on. I would bet that the high failure rate Nvidia has had with the 2070 and above has not helped the sales. The reviews on sites like Amazon and Newegg are riddled with people complaining of those cards failing in just months. It seems that AMD and Nvidia have opposite problems. Nvidia has more hardware problems not software. Kind of the opposite for AMD. I think both companies honestly have been expected to produce too much too fast when it comes to ability. 4K is requires so much power to render at. In many ways when you look at where things were just 5 years ago, with all the short comings it is pretty amazing where we are now but now that surprising that a lot of issues because of it exist.

The failure rate was above average but it was not that bad for the Turing cards early on. Once the dual fan models came to market the cards were cooler running and more durable.

With my GTX 1060, a RTX 2070 SUPER would be a strong upgrade. Still I am wondering what Navi 2 and Ampere are going to offer to perk up games.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree the issue is that all current tech has been pushed extremely past what current architectures were really intended to do all at the cost of trying to support 4k. I really doubt that even with the upcoming console gen if it indeed follows suit with what has been done in the past it still will not be like ultra settings at 4k on a PC with the highest ability tech. They just can't afford it to put it in a console. Likely it will be more like 4k with medium settings at between 30 and 60 fps. Which will still be a huge increase. On the PC the next gen will struggle to begin to deliver 4k ultra settings on mid level cards in that same 30 to 60 fps. Again this is the next gen to come not what we have now. I think we are still at least a couple generations from real high refresh rate gaming on 4k. It is why the high failure rates exist. Heat and high power just kills processors and the only way we have to do 4k and high refresh 1440p is to run architectures way beyond what they should be efficiently run at.

I get your thoughts on China too. It would be funny if not so accurate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you are absolutely correct and the Super cards really rectify those shortcomings and up the performance to a point that starts to justify upgrading from GTX cards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

gotta link?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

High refresh rates really aren't an issue unless you play a certain old game which requires 240hz, and adaptive sync technologies have helped mitigate the need to keep 60/75 FPS at all times, but when a 2080 Ti can't even achieve that across the board...Considering the likely development cycles it will probably be 2022 before uncompromised 4k60 gaming at the HIGH END becomes a reality, with it trickling down to the mid-range in 2025. nVidia will no doubt achieve this first with Intel probably second as they have the money to spend on R&D and Intel is going to want to come in like a hurricane, but the question is how fast AMD will adapt to a three manufacturer market. Next year's cards are going to be a bust by all early accounts. It's going to be Navi based, not next gen, so quite likely it's going to be pushing 250-300w if it is an "nVidia killer" UNLESS Navi has a glaring inefficiency in it they can rectify since we know that AMD will be implementing their hybrid ray tracing technology. Then there's the cost of HBM2E, which we know those cards will use, which will add a large cost to the cards, to Vega it added $160 plus the cost of the packaging...Plus you figure that if the mid range cards are costing $400-$500, AMD's high end cards will be $750-1000 like nViida...

Next year is going to be very interesting for sure. nVidia's releasing their RTX 3000 series which will likely be a refinement of the RTX 2000 series so I think we can expect 25% higher performance with a larger jump with ray tracing, Intel's going to release their new cards next year starting at $200 and will likely target the entire mid range, and, let us not forget, if AMD will be releasing their 4000 series processors which should hopefully fix the 3000 series' shortcomings and will be the final processors for Socket AM4, and Intel will release their long awaited 10nm units.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I consider Ray Tracking to be more gimmick than anything else.

I am more interested in Halo: MCC when it comes to Steam hopefully this fall. I still have my original Halo disk.

I am still annoyed at not being able to use my X570 motherboard either

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pokester wrote:

you are absolutely correct and the Super cards really rectify those shortcomings and up the performance to a point that starts to justify upgrading from GTX cards.

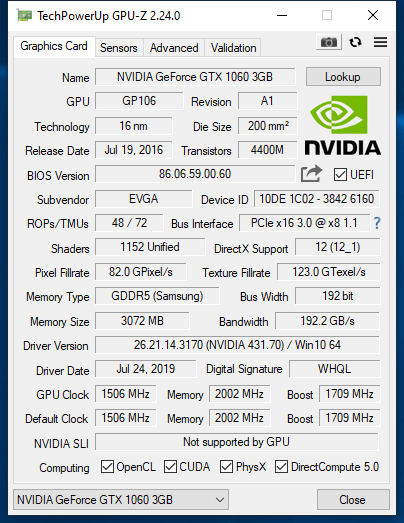

I consider my GTX 1060 to be an example of 16 nm hardware but recent marketing nonsense makes that metric dubious

I am starting to look at number of transistors per square millimeter and maybe a better metric. Simple calculation will clarify the real density of the logic.

so with 4400M transistors into a 200mm chunk of silicon, i get 22M per square mm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will the 3000 series also include a die shrink? I would think they have quite a jump if they get down to 7 nano too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I sure don't think it is a gimmick I have used it now in few games and it honestly is pretty awesome. It just gives you another layer of realism that was not there before. Now the trick is having enough power to run it without sacrificing FPS. That is the current issue. If the next gen ups the shader cores enough at the upper mid level cards to make 1440p gaming a possibility with ray tracing on then we are going to really start seing faster card adoption rates. Plus regardless by that time most the GTX users will definitely be looking to upgrade as well as any converts for RX480 on up too. It will be interesting to see the 3 leg race at that point to fight for those replacement card purchases with red, blue and green competing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

shaders are parallel friendly which favors more and more logic to speed up the work

the top cards now have some 4,000 logic cores so they can do a lot of number crunching

intel has improved with their integrated graphics very slowly, they need to put more effort into that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would imagine you will see a new mobile line up in conjunction with their new GPU desktop cards when they release.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I expect Nvidia will quickly re-spin RTX2080 Turing to 7nm or just drop prices of RTX2080/2070 Super if they start to see significant uptake of RX5700/XT

I expect they will provide RTX and non RTX versions.

That should bring down power consumption, die size and give two lower cost options.

Meantime I hope they spend more effort on getting new RTX games out onto the market because there are ~ 3 games BFV, Metro Exodus, and Shadow of the Tomb Raider that are established and working ~ ok right now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Powercolor.com do not have the specs up for the Red Dragon or basic dual fan RX5700XT yet.

I will post them as soon as I see them.

Some UK and German retailers have them advertised but no seen them on US retailer yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

actually yes it is old but the RTX version of Quake 2 is pretty darn cool. I completely played through the campaign again and it was really fun and not just in a retro way. The Ray Tracing really adds to the experience IMHO.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks - I saw a "review" about RTX version of Quake 2 YouTube from "Joker Productions" some time ago.

He was really harsh about it, and I didn't really think he gave it a fair review.

I do intend to try it out when I get time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well I think they, think it somehow is going to overhaul all the graphics too. It does not, but the lighting and water difference is awesome. The global lighting IMHO is night and day different. But to each is own as they say and you can't please everyone. I honesty did not mind a reason to play an old favorite again. Plus it also comes with a interface that works great in Windows 10. I think if you already have the files for it you can just load the RTX version in steam and import the full version game files to the RTX demo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

colesdav wrote:

I expect Nvidia will quickly re-spin RTX2080 Turing to 7nm or just drop prices of RTX2080/2070 Super if they start to see significant uptake of RX5700/XT

I expect they will provide RTX and non RTX versions.

That should bring down power consumption, die size and give two lower cost options.

Meantime I hope they spend more effort on getting new RTX games out onto the market because there are ~ 3 games BFV, Metro Exodus, and Shadow of the Tomb Raider that are established and working ~ ok right now.

All I know is that my GTX 1060 has not been fired yet. This card has blown away my expectations for what can be done.

For the cost of a RTX 2070 SUPER, I could buy a new CPU for my X570 along with a 750D chassis and a water cooler.

I have an HX1000i PSU, so no worries about any power pig hardware but my R5 2400G uses so little power to do wonders for gaming.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The 3000 series is already 7nm, but TSMC has two variants that the 4000 series could be based on. 5nm will also be released next year so it is -possible- AMD will use it to stay ahead of Intel's process, but one would imagine it would be much more costly to redesign for that node especially when a noticeable performance gain will be attained simply from perfecting yields to eliminate the "one fast many slow" arrangement they use now, plus holding off on 5nm would give TSMC time to perfect the node for the new architecture 5000 series so AMD will not have the issues with them they do now with the 3000 series. Also the fact that 5nm isn't going to ramp up until the middle of next year would put AMD behind their "7" launch strategy, and would also delay the 5000 series as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My CPU is 4/8 which is all I need, no need for more cores to run one gaming card. More cores is going to bottleneck main memory.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And now with Ray Tracing coming to Minecraft, it really does seem that this once niche feature is ubiquitous, no doubt due to massive amounts of cash from nVidia...

https://www.engadget.com/2019/08/19/nvidia-ray-tracing-on-minecraft/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was into Ray Tracing back in the 1980s when the PC was 100x more expensive and 1000x less powerful

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not AMD 3000, the upcoming Nvidia 3000 series next year. I was wondering if you knew about that? Great info though. Nice read, learned a lot I did not know so thanks. You and Dave are humble me and make realize how much I don't know all the time!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh those...I would say it's going to depend on how real they feel the threat is from Intel. If they think they have a chance at taking a measurable amount of market share they'll go to one of the more optimized 7nm processes, but if they don't consider them a threat, then I think they could hold off until 5nm. Their architecture is more efficient than AMDs, and when not under threat of a price war, stagnation is the result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nvidia seem to have responded / pre-empted a few more things versus AMD RX5700XT with their latest Driver update.

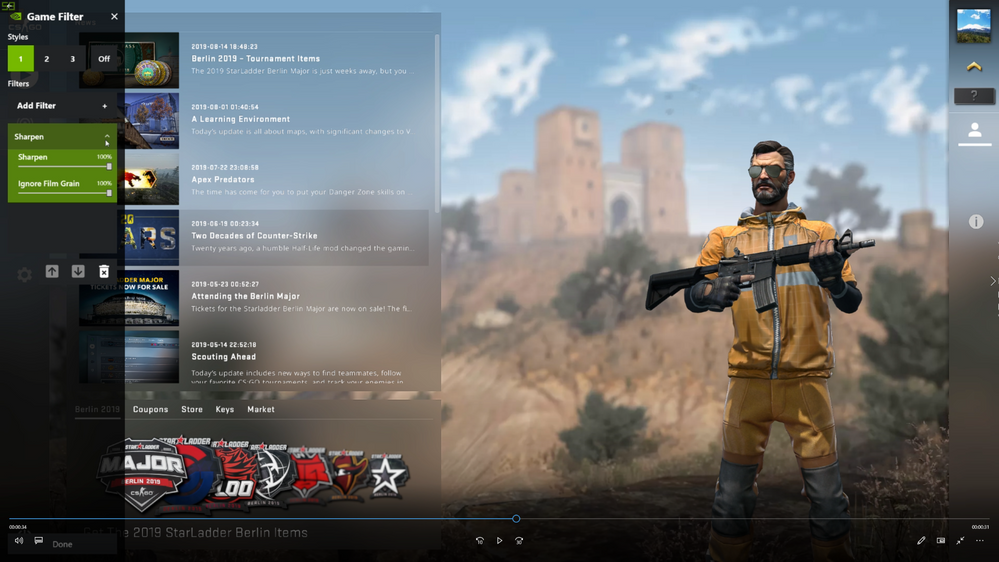

(1). They have added a new Sharpening Filter and added the number of games supported by Nvidia Freestyle. That seems to be a response to AMD Contrast Adaptive Sharpeneing (Radeon Image Sharpening). I just took a look at it. The new Sharpening menu appears above the SpecialFX one in the Game Filter. They have added support for many more games. Use of ReShade is blocked on CSGO, so I am unable to use CAS.fx or Smart_Sharp.fx or even Software based Ray Tracing on CSGO with my AMD GPUs.

It provides two slider controls. Sharpen, and Ignore Film Grain.

Here are a couple of screenshots showing the filter in action:

Default:

New Sharpening:

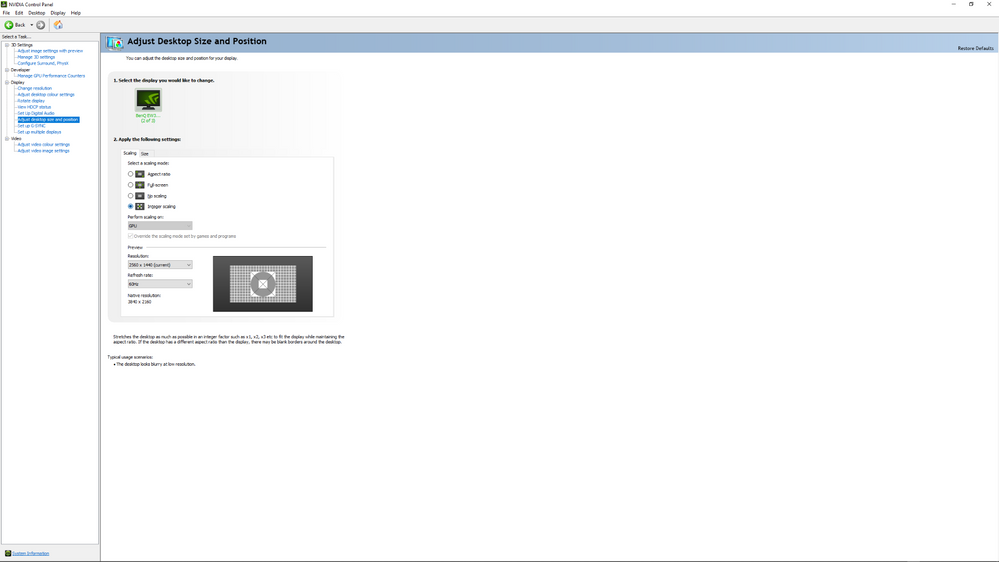

(2) They have added Integer Scaling Support in Control Panel.

It looked like AMD was going to be first to deliver Integer Scaling - it is in the "Vote for new features List" for Adrenalin 2019.

Intel implemented it for their new GPUs and now Nvidia have it.

I do not know if integer scaling is implented in the RX5700XT.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the heads up. Downloading the latest update. Integer scaling if it works as intended should be the best method yet. Very excited to see it in action!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I read the press release correctly, the new sharpening filter is an addition to nVidia Freestyle and not a Turing specific feature, which means that any currently supported nVidia card, from the 600 series to the new RTX series, should be able to use it in any of the currently supported 600 games, which is in contrast to AMD's requirement of a Navi card although RIS works in any game. That's a large win for nVidia as AMD has no way of delivering an image sharpening filter to Vega and earlier products.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above screenshots are from a Palit RTX2080 OC (Turing) card.

I have a PC with an ASUS GTX780Ti OC. It is Kepler architecture - oldest currently supported generation.

I will let you know today if that filter works on GTX780Ti when I update the drivers.

FYI I can run ReShade.me + Contrast Aware/Adaptive filters on that GPU.

Also from the AMD side:

I have tested ReShade.me with Contrast Aware and Contrast Adaptive filters on the following connections on older GCN GPUs and they all work.

Fiji XT - GCN 3.0.

==============

R9 FuryX on PCIe 3.0x8.

R9 Nano on PCIe 3.0x4.

R9 Nano on PCIe 2.0x1 mining adapter.

Hawaii XT - GCN 2.0.

================

R9 390X on PCIe2.0x1 mining adapter.

Tahiti XTL - GCN 1.0.

=================

R9 280x in Razer Core X eGPU box via Thunderbolt 3.0 at reduced PCIe2.0x4 bandwidth.

I do not see any reason why AMD would not enable Radeon Image Sharpening (CAS.fx) on all > GCN 1.0 GPUs based on testing the above GPUs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that nVidia has a pre-Turing sharpening feature they're going to have to else risk further alienating owners, but given AMD's long history of abandoning cards after only a year or two, I'm not holding my breath.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hope AMD do enable Radeon Image Sharpening on all GPU from GCN 1.0 up if they can do it.

ReShade is blocked completely on some very popular multiplayer games because of fear that customised compiles of ReShade can be done.

This means that Contrast Aware/Adaptive Sharpening Filters cannot be used in those cases, so no Radeon Image Sharpening on those games.

Those customised compiles of ReShade can allow use of Depth Buffer based shaders to cheat in multiplayer online games by revealing enemies.

Standard pre-compiled ReShade automatically disables depth buffer access in online multiplayer games to prevent cheating.

I was really hoping Integer Scaling would be implemented on the GCN cards as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yep I can confirm it works on all the way down to my 1050ti. Does not seem to affect performance in any way either. Pretty cool!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My old HD 7870 is the first gen GCN but I retired that card long ago for more recent and more powerful cards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just updated the drivers on the GTX780Ti.

The new sharpening filter is available and works using Nvidia Freestyle overlay. I tested it running on BFV.

Unfortunately Integer Scaling option is not available for the GTX780Ti.

I will put in a support request with Nvidia.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are still technical reasons to use HD7970 GHz OC 6GB GPU.

But sure, RX580/590/Vega56/64 prices will soon have to drop significantly.

I wasn't suggesting anyone goes out and buys an HD7970 now unless they have a very good reason.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

colesdav wrote:

There are still technical reasons to use HD7970 GHz OC 6GB GPU.

But sure, RX580/590/Vega56/64 prices will soon have to drop significantly.I wasn't suggesting anyone goes out and buys an HD7970 now unless they have a very good reason.

Polaris cards are selling at around 1/2 of new on eBay and price rot is gnawing away at them fast.

Pascal cards are also now also around /1 of new on eBay.

No reason to get a 28nm class card with the low prices for 16nm hardware

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RE: No reason to get a 28nm class card with the low prices for 16nm hardware

I wouldn't be so sure about that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Go look at this FP64 table.

AMD Radeon and NVIDIA GeForce FP32/FP64 GFLOPS Table | Geeks3D

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The new sharpening filter does throw a wrench into things. AMD's higher end Polaris card, the RX 590, is now $190-$200, and the GTX 1660 can be found for $215-$220. The 1660 already consumes nearly half as much power, and is much quieter, than the 590, which already are two things very much in favor for nVidia considering the very small price difference, but add in image sharpening and it becomes very difficult to recommend using an AMD card even for the most popular resolution, 1920x1080.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still have a HD 7870 and 7950 in service. They still work great. I have 3 newer cards than that, later GCN versions sitting on a shelf replaced by green cards as those would no longer work properly with current drivers. Those older cards as I do not game with them I leave running with older drivers and are and have always been flawless. So for me I consider them to be the better cards as at their age they have pre wattman drivers that make the more stable and trouble free thus BETTER CARDS. It certainly isn't all about gaming when it comes to GPU's the one machine I solely use it for streaming but that GPU is needed for that and the other I still do some video editing on.

I would add though that BF1 was the first game I ran into that the 7950 could not run well enough to my liking. Out of my many hundreds of games it is still less than about 10 games I can not run great at 1080p on that card.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Plus add you what your experience will be with driver features actually working as they should. Plus most users still prefer a card that works as it should out of the box without having to so called TWEAK to get them to run. If both 2 card choices are about the same price or even the one with the better ease of use reputation is slightly higher. I know which one I would choose.