Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Re: Erroneous GPU behavior for atomic_add and atom...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Erroneous GPU behavior for atomic_add and atomic_sub

While running some OpenCL kernels on R7-240 and RX-550, I see this behavior:

float w_rnd(__global unsigned int * intSeeds,int id)

{

uint maxint=0;

maxint--;

uint rndint=w_hash(intSeeds[id]);

uint tmp0=atomic_add(&intSeeds[id],0);

atomic_sub(&intSeeds[id],tmp0);

atomic_add(&intSeeds[id],rndint);

//intSeeds[id]=rndint;

return ((float)rndint)/(float)maxint;

}

here, the commented-out line instead of 3 atomic functions works fine on a R7-240 but does not work fine on a RX550 so I tried these 3 atomics to make sure"value update" but this caused wrong values for the last 75 percent of buffer. I need the values updated because some kernels use random number generator more than once and I thought atomics could do this but they can not.

R7-240 works with both atomics version and simple version but Rx550 can't work with either version.

whash function is just a randomizer.

uint whash(uint seed)

{

seed = (seed ^ 61) ^ (seed >> 16);

seed *= 9;

seed = seed ^ (seed >> 4);

seed *= 0x27d4eb2d;

seed = seed ^ (seed >> 15);

return seed;

}

a kernel uses random number generator to pick a random value and then depending on that random value, it does unit-positioning on a map using random number generator two more times which makes 75% of units on a zigzag line instead of normally distributed on map. R7-240 uniformly distributes all units but RX-550 distributes only to 25% (upper-left quadrant) and leaves all remaining units on an inclined line. This is for atomics version.

Non-atomic version distributes better for RX550 but this time distributions are dependent on first usage of random number generator such that if first value is between 0 and 0.33 then it distributes right but all 0.34 and up to 0.99 (randomized) values cause map positionings wrong places and more narrow area of map instead of uniformly to whole map. So its like first usage of generator makes second and third usages generate a narrower range of values but works totally ok for R7-240. I suspect this is due to R7-240 not caching these values for read-only in kernel but RX-550 is caching and reading non-updated values, I may be wrong. Since OpenCL uses a relaxed-memory hierarchy, this is possible right?

What could be the hardware dependency affecting this?

Also If I generate random numbers 10 times more, it works for RX550 too. Is this working due to 10 times running same function forces a value update or is it simply a randomness issue? I use only thread-id values as seeds to start each random number generation independently so both R7-240 and RX-550 should do same with whash() function from same thread-id values and same device buffers.

Here is a kernel using this generator(commented-out for loop fixes the erroneous behavior, also I asked someone with a Vega card to try, same thing happened as RX550(and also this for-loop fix worked!)):

__kernel void initShips(__global float * shipX, __global float * shipY,

__global uchar * shipState, __global float * shipRotation,

__global uchar * shipTeam, __global unsigned int * randSeeds,

__global int * shipHp, __global int * shipShield)

{

float mapWidth=@@mapWidth@@;

float mapHeight=@@mapHeight@@;

int i=get_global_id(0); // ship id

uchar team = (int)((w_rnd(randSeeds,i)*2.9999f));

/*

for(int j=0;j<10;j++)

{

team = (int)((w_rnd(randSeeds,i)*2.9999f));

}

*/

shipTeam=team;

if(team==0)

{

shipX=0.001f+w_rnd(randSeeds,i)*mapWidth*0.498f;

shipY=mapHeight*0.05f + wa_rnd(randSeeds,i)*mapHeight*0.90f;

shipRotation=w_rnd(randSeeds,i)*30.0f-15.0f;

}

else if(team==1)

{

shipX=mapWidth*0.501f+ w_rnd(randSeeds,i)*mapWidth*0.498f;

shipY=mapHeight*0.5f + w_rnd(randSeeds,i)*mapHeight*0.5f;

shipRotation=w_rnd(randSeeds,i)*30.0f+165.0f;

}

else if(team==2)

{

shipX=mapWidth*0.501f+w_rnd(randSeeds,i)*mapWidth*0.498f;

shipY=w_rnd(randSeeds,i)*mapHeight*0.5f;

shipRotationrnd(randSeeds,i)*30.0f+165.0f;

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reporting it.

First, please help me to understand the issue. From the above description, it seems that w_rand() is the main suspected function and there are primarily two issues observed on Rx550:

- Non atomic version i.e direct assignment not working

- Atomic version works for initial values, but then produces erroneous result

Please correct or add anything important if I missed. Also, please mention about OS and driver version. It would be really helpful if you could share the host-code and a reference to verify the correct values.

One thing about the atomic version. Did you check with atomic_xchg() instead of atomic_add and atomic_sub?

Btw, testing w_rand() function with a simple host-code, it looks like both versions of code working fine on Cazzio as well as Hawaii.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Direct assignment is not working for 67% of a buffer (ship x-y coordinate buffers), in a way that first usage(direct assignment) affects those so distribution is corrupt and not giving 0-1 range but more like 0.7 - 0.9 range so alls ships get very crowded around that area of map if first assignment results in a team=1 or team=2 (this is at least not so bad since there is still some randomness (but different for each team, weirdly)). By first usage, I mean inside of a kernel, calling generator multiple times and mentioning the first gen.function call in kernel.

2. Atomic version is also similar(again, for team=0), but this time team-1 and team-2 have same x-y values{(23,23) (24.5,24.5) (8100,8100)} hence they make a line instead of a cloud. I guess y values just overrride x values or the opposite or they somehow get two consequently same values which is very low probability to be seen on consecutively 670000 ships which is obviously much worse than case (1.) .

I just installed CodeXL 1.9 and see no errors related to any buffer or any kernel, it just complains about device not found since check all kinds of devices GPU,CPU,ACC distinctively and get only successfully returned devices.

Editor here bugged somehow and stopped me editing article further so I coouldn't see rest of kernel here(I did include system specs, they're gone), even for editing.

64-bit Windows-10 fall creators update, all updates done. Driver: 17.11.1. Project is 64-bit compiled.

You can find kernel file in this rar. KaloriferBenchmarkGPU/EpicWarCL017.rar at master · tugrul512bit/KaloriferBenchmarkGPU · GitHub

The software design of project in this link is so horrible that I feel embarrassment to give source code. But all OpenCL kernels are in cl file. R7-240 fills map as intended, but RX550 nor Vega can't do same unless the mentioned dummy for loop is added.

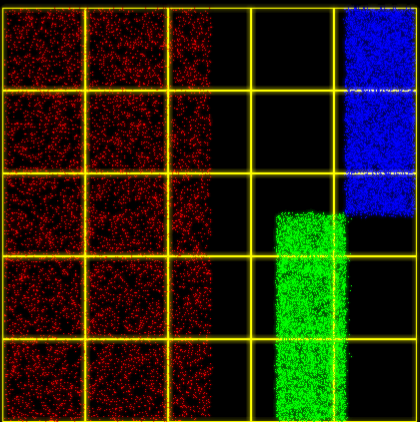

R7-240(both normal and atomics make this):

RX-550 / VEGA normal:

RX550 Atomics: they start as thin lines then collisions make them look like this. I think even first team gets somewhat unexpected values but randomized at least.

So just because their team(and indirectly color) is different, their x,y positions become like this and using atomics make green and blue two inclined lines instead of a dense cloud.

Best Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My graphics driver stopped responding when I ran the above executable and clicked the detected device. Not sure if anything to configure before running the application.

I think it would be better if we focus on the main suspected area of the code that you pointed out. I tried to create a simple test-case that could be used as a repro for this case. Please find the attachment.

The test-case compares the result produced by the device with CPU result and prints whether there is a match or not. It's very simple. Please try both direct-assignment and atomic version in the kernel.

As per your above description, the code should produce a match on R7 240 but a mismatch on RX550.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run the attachment at it said "Matched" for all cases. Then I made simple additions to use it 3 times per workitem(as in project, team -> x and y). It said "Not Match" only for RX550.

Here are the changes I've done:

I don't know why but only chaning generator name permits me put codes here:

C++:

ELE_TYPE w_hash(ELE_TYPE seed)

{

seed = (seed ^ 61) ^ (seed >> 16);

seed *= 9;

seed = seed ^ (seed >> 4);

seed *= 0x27d4eb2d;

seed = seed ^ (seed >> 15);

return seed;

}

C++ - test part:

bool isMatched = true;

unsigned int maxint = 0; maxint--;

for (int i = 0; i < NUM_ELEMENTS; ++i) {

unsigned int testVal = w_hash(hostBuff);

if (testVal < maxint / 2)

{

testVal = w_hash(w_hash(w_hash(hostBuff)));

}

if (testVal != devBuff) {

isMatched = false;

break;

}

}

and in kernel:

uint w_rnd_direct(__global unsigned int * intSeeds, int id)

{

//uint maxint=0;

//maxint--;

uint rndint=w_hash(intSeeds[id]);

intSeeds[id]=rndint;

return rndint;

}

uint w_rnd_atomic(__global unsigned int * intSeeds, int id)

{

//uint maxint=0;

//maxint--;

uint rndint=w_hash(intSeeds[id]);

uint tmp0=atomic_add(&intSeeds[id],0);

atomic_sub(&intSeeds[id],tmp0);

atomic_add(&intSeeds[id],rndint);

return rndint;

}

void kernel testkernel(global ELE_TYPE *intSeeds)

{

int id = get_global_id(0);

uint maxint=0;

maxint--;

/*

uint testVal=w_rnd_direct(intSeeds, id);

if(testVal<(maxint/2)){

w_rnd_direct(intSeeds, id);

w_rnd_direct(intSeeds, id);

}

*/

uint testVal=w_rnd_atomic(intSeeds, id);

if(testVal<(maxint/2)){

w_rnd_atomic(intSeeds, id);

w_rnd_atomic(intSeeds, id);

}

}

strangely, direct assignment version works this time. Also I tested in project that uses only private registers and writes only once to global at the end, both cards produce desired output.

In my opinion, direct version gets wrong only when three random values are saved to global arrays (so that optimizer can't omit somethings maybe).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks interesting. I don't have a Rx550 to verify myself. Could you please try below options and share your findings?

- atomic_xchg instead of atomic_add and atomic_sub

- A global memfence after each w_rnd_atomic

- Compilation with -O0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

atomic_xchg failed, -O0 succeeded and also mem_fence(CLK_GLOBAL_MEM_FENCE) between all generator calls succeeded. All tests wwere done independently. Also adding fences inside of generator works too! (again, all for RX550. R7 240 runs fine with all of these)

I don't know if it helps but, in the project, I didn't use "-cl-std=CL1.2". Is this needed always? Maybe it is the reason for the Nvidia cards unable to run my project?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing these findings. I suspected something like that. It means that use of memfence in between w_rnd_atomic calls keeps order and visibility of the atomic operations as expected and prevents the compiler from any reordering. The compiler tool-chain used for R7 240 is different so, it might be worked there. I'll still verify with the compiler team.

Usage of "-cl-std" flag is optional so you can avoid it. I copied the host code from one of my test-projects where the flag was used to compile the kernel for multiple versions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for helping. I'll start to convert global versions to register version(instead of using atomics on globals) and do the writing to global only once per kernel, this should be working for all GPUs. I always thought fence functions were for other workitems to see a workitems change on global/local, didn't think about reordering. I was going to compare this to nvidia cards(to see if those act same as R7-240 or RX550) but this doesn't work and gives cl_invalid_command_queue error(according to an nvidia user) even though queue/context/buffer/ndrange commands are all fine for the codexl1.9.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a nice thread barrier vs mem_fence? where MicahVillmow explained about the mem_fence very clearly as below:

As for a fence operation:

A fence operation instructs the compiler not to reorder any memory instructions around the fence instruction. There is no synchronization done, so on a mem_fence instruction, there is no guarantee that any load/store from another work-item to either local or global memory is visible to the current work-item. The only guarantee of mem_fence is that loads/stores before the fence will be executed before load/stores after the fence. Memory consistency in OpenCL is only guaranteed within a work-item and that work-item is unique in it's access of memory throughout the NDRange. The only exception is synchronization on the local address space by work-items in a work group via the barrier instruction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you again. So, after compiler team verifies, then its my fault to not put fences between all generator(simple direct assignment or atomic) calls right? I'm sorry.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nothing to say sorry here. Sometimes it is little bit tricky to understand the behavior. As said earlier, I'll still verify the same from the compiler team and confirm you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if this is a compiler bug or not yet. I haven't really looked very deeply into your code.

But the idea of a memory_fence is to provide the ability for higher-level programs to create mutexes... AND for the compiler / CPU / cache to provide optimizations that are beneficial to the programmer. Communication between the programmer and the compiler+system is important.

Lets look at some very basic Mutex code.

x=0; // At the start of the program

....

// Later in some thread

x++;

mutex.lock();

x++;

mutex.unlock();

x++;

In this case, lets assume the compiler and the CPU does nothing (for simplicity. The compiler definitely wants to move code around, but cache is all I need for this theoretical situation). Lets look at this purely from a cache level. The cache wants to reorder your code, because "x" is being written to multiple times. So the cache wants to update x "all at once", because its very expensive to talk to main memory!

Lets say "mutex" is in main memory, while "x" is in cache. Therefore, the cache will want to do the following:

x++;

x++;

x++;

mutex.lock();

mutex.unlock();

The cache WANTS to do this, but if it did, then your code would be incorrect. Clearly, the x++ needs to at very least be on the "inside" of the locked region, the so called "critical section". How do we communicate this idea of reorderings to the cache, while still giving the cache (or any other part of the system) the ability to make "sane" optimizations?

Well, any good "mutex.lock()" will put an "acquire fence". And any good "mutex.unlock" will be a "release fence". Roughly speaking, an "acquire fence" means that the following section of code is acquiring a critical section. While the "release fence" means that the previous lines of code are "releasing" the critical section. Of course, a mutex does more than just fences, so there's still lots of other code to be done.

x++;

mutex.lock(); // This is an acquire fence internally

x++;

mutex.unlock(); // This is a release fence internally

x++;

Now, the middle x++ cannot move! Because we have a properly specified memory fence.

But... the compiler / cache / CPU is STILL free to reorder the instructions to some degree, to improve the speed of our code. In this case, what will most likely be done is:

mutex.lock();

x++; // The first ++ is "moved down"

x++; // The original ++

x++; // The final ++ is "moved up"

mutex.unlock();

In this case, we were writing x++ "outside" of the critical section, the 1st and 3rd ones. Therefore, the first and 3rd x++ instructions are a data-race. Other threads might see any value of x, from 1, 2, or 3, and still be a valid from. The compiler / CPU / cache systems SHOULD be able to "pick any data race" and choose the fastest one. In this case...

mutex.lock();

x+=3;

mutex.unlock();

This is clearly the fastest implementation that's still correct. The compiler merged all the ++ instructions, and is simply defining that "this thread wins the undefined data-race, so other threads will always see x=3".

Does that make sense? The formal definition is more formal. But the "basic idea" of this whole setup is to make it easy to write mutexes. One fence at the start of a critical section, and one fence at the end of a critical section. The "mutex logic" still needs to be implemented with atomics however (!!!). Because synchronization is more than just juggling the potential reorderings of memory. Furthermore, a fence technically still needs an atomic to be "relative" towards to be properly defined. The fence defines which instructions can't move, while the atomic defines what the instructions can't move past.

x++;

y++;

z++;

atomic_work_item_fence(CLK_GLOBAL_MEM_FENCE,

memory_order_acquire,memory_scope_device); // This fence starts a critical section below itwhile(!atomic_compare_exchange_weak(mutex_variable, 0, 1)); // Simple CAS-based mutex. Keep trying to turn the 0-flag into a 1. Failure means someone else is in the critical section

// This code is now protected by the acquire fence. None of the code written here can move "above" the "atomic_compare_exchange_weak" instruction.

Notice that x, y, and z are NOT protected by the acquire fence. Becacuse they're in a "data-race" area. If they were moved into the critical section, then the code is still correct. All of this post describes OpenCL 2.0 atomics and fences (as well as C++ atomics). Again, I don't know how to use OpenCL1.2 atomics, but the same idea probably exists for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this makes sense. Especially for the random number generators here. 3 calls can be changed between themselves since I don't care which one is which as long as they do randomize. Similar to x+=3. I learn a new thing everyday but I wish I don't forget much. Very enlightening.

So much responsibility for controlling compiler/cache/cpu reorderings! But is fun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey tugrul_512bit,

I'm just a hobbyist, but I think you're making a key beginner's mistake in your "atomics" code. Atomics do NOT provide ordering of memory operations. Ordering is provided by mem_fence (see: mem_fence).

The three atomics you wrote:

- uint tmp0=atomic_add(&intSeeds[id],0);

- atomic_sub(&intSeeds[id],tmp0);

- atomic_add(&intSeeds[id],rndint);

Without a memory fence, these three atomics can theoretically execute in any order (when viewed from the lens of another thread), and therefore are not going to be the protection you want. Again, atomics do NOT provide any guarantees about ordering! If you used a single atomic (such as atom_cmpxchg ), you'd have stronger guarantees.

With that said, I don't see how your code ever synchronizes, since it all accesses intSeeds[id] and "id" is a value based on get_global_id(0). So the problem ultimately remains a mystery to me. But I just felt like chiming in about the memory fence issue. To have properly synchronized code, you need to correctly be using atomics AND fences. With that said, I don't see any reason why things would be different on the two cards.

EDIT:

>>> Here is a kernel using this generator(commented-out for loop fixes the erroneous behavior, also I asked someone with a Vega card to try, same thing happened as RX550(and also this for-loop fix worked!)):

Honestly, I've never seen a random number generator like "whash" before. Where did you get it from? And why aren't you using something more standard like an LCGRNG? (See: this Linear congruential generator - Wikipedia ). Some random number generators require 'ramp up' time to get going. Since you are only using each RNG 4 or 5 times and then returning, the RNGs on the RX550 / Vega might be the correct behavior. IE: its not random yet.

I'll have to play around with this later to really see whats going on. Have you verified the whash generator on both the 240 and 550?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried this:

uint maxint=0;

maxint--;

uint rndint=w_hash(intSeeds[id]);

mem_fence(CLK_GLOBAL_MEM_FENCE);

uint tmp0=atomic_add(&intSeeds[id],0);

mem_fence(CLK_GLOBAL_MEM_FENCE);

atomic_sub(&intSeeds[id],tmp0);

mem_fence(CLK_GLOBAL_MEM_FENCE);

atomic_add(&intSeeds[id],rndint);

mem_fence(CLK_GLOBAL_MEM_FENCE);

//intSeeds[id]=rndint;

return ((float)rndint)/(float)maxint;

does same wrong distribution(lines) again as before(for RX550) and works for for R7-240(again).

Thank you for this knowledge you share, what can I do for the simple assignment version? I think compiler shouldn't re-order assignments if there are dependency right?

Edit: Thomas Whang is writer of algorithm. Original link: http://www.concentric.net/~Ttwang/tech/inthash.htm, Taken from: http://web.archive.or... and Quick And Easy GPU Random Numbers In D3D11 – Nathan Reed’s coding blog

Edit-2: The R7-240 GPU does what I intended to do, gets a distribution(Normal or Gaussion, doesn't matter as it is a game) that I needed.

Edit-3: mem_fence() doesn't fix simple assignment version neighter.

Edit-4: if compiler can freely reorder everything, what can I do for, for example a Kahan-Adder that I use in a scientific calculation? Does it get reordered so Kahan-Addition is actually broken too? In G++, I used assembly inline blocks to counter G++'s free reorderings. Whats the key for OpenCL version of Kahan Addition, for example? Should I expect mem_fence do the job between Kahan-Addition instructions?

Edit-5: In this project, there are codes like these:

int something = atomic_add(somethingElse,foo);

if(something)

{

int somethingDifferent=atomic_add(things,bar);

...

}

does this force a priority that something gets calculated first, always?

Edit-6: What if there is an agressive optimization similar to reordering here:

- seed = (seed ^ 61) ^ (seed >> 16);

- seed *= 9;

- seed = seed ^ (seed >> 4);

- seed *= 0x27d4eb2d;

- seed = seed ^ (seed >> 15);

- return seed;

how do I make sure private register operations are done serially with exact numbers given here? Fence operations work only for local and global memories. Do we always need assembler/asm blocks to keep order intact (especially for science)?

Assuming somehow I get binaries of compiled kernels(offline compiled). Does GPU change those codes(reordering, optimizing) on-the-fly?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey tugrul_512bit,

To be honest, I haven't studied the OpenCL 1.2 atomics at all. So I don't really know the "proper" way to write a CAS loop using the 1.2 version of the atomics. This stuff is also new to me, but I think its good practice if I try to explain it to someone else. I hope you don't mind my own inexperience in this matter...

> I think compiler shouldn't re-order assignments if there are dependency right?

Its more than the compiler: its also the memory subsystem (cache and write-back buffers especially) and CPU (I don't think AMD's processor is out-of-order... but x86 for example executes instructions out of order sometimes). So the job of the programmer is to say when reorderings are a potentially bad thing, and when they're okay.

> if compiler can freely reorder everything

The compilers / CPUs / Caches / Memory don't reorder "anything". They reorder in particular ways that make our code faster. Memory fences exist so that the programmer can say "Hey, don't do that". From a single-threaded perspective, the compiler / CPU / cache can reorder anything as long as the thread looks the same later on. But for multithreaded code, the reorderings may mess with the logic elsewhere (ie: another thread is looking at the memory and RELIES on the order in which the memory changes).

If a programmer places a memory_fence, then it tells the Compiler / CPU / cache to not reorder anything (causing a drop in performance). And acquire fences and release fences allow reorderings in such a way that we probably don't care about. (IE: Only if there's a data-race would the programmer be able to notice a difference).

So ultimately... no, that's not how you are supposed to use fences. Roughly speaking, a memory fence is used to correlate to a "Mutex Lock" and a "Mutex unlock". It says "Yo Compiler / CPU / Cache: please don't move the memory stores/loads through this fence. I'm writing a Mutex here and its really, really important that the following memory loads / stores are done in the correct order, because another thread is watching you".

Does that make sense? Under single-threaded conditions, memory fences and atomic operations don't really do anything.

-------------

> how do I make sure private register operations are done serially with exact numbers given here?

They will be done in the exact order from a single-threaded perspective. The main issue is when you're talking to SLOW global memory, the transfer from L1 cache -> Main Memory may make some of those operations look like they happened out of order.

So from a single thread, everything that you write in your program should look the same (unless there's a compiler bug). Memory fences and Atomics exist when you have multiple threads talking using the same memory location. Because at that point, memory is so slow and CPUs / GPUs are so fast... that the Main Memory isn't updated fast enough to keep up with all of the memory changes. Indeed, memory is coalesced, batched up, distributed, cut up, and put back together again.

-------------

In short: it doesn't seem like your code "depends on other threads", so I'm not sure if you need fences or atomics at all. Sorry for the wild goose chase...

-------------

Now, to fully answer your question... the proper way to write a CAS loop is described in this blog post: You Can Do Any Kind of Atomic Read-Modify-Write Operation

Unfortunately, that's using the C++11 CompareAndExchange instruction (which only exists in OpenCL 2.0). I'm sure there's a way to do it correctly in OpenCL 1.2, but I'm not confident in my ability to write that for you...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Dragontamer,

If I do

a[id]=5;

b[id]=a[id];

should I not expect 5 in element of b, always, especially in relaxed memory of OpenCL? (edit: a and b are global)

It seems having a kernel that only generates(using only once per kernel repeat) random numbers and a separate kernel that uses them is more suitable to be stable on different GPUs.

Then I should generate random number using private registers then pass it to other generators in a chain-input-return way until all generations use same private register and write the result seed value(to global array) only once and only at the end of kernel.

Best regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is that "Between" line 1 and 2, ANOTHER thread or work-item could have changed the value of a[id].

If you can guarantee me that NO ONE ELSE touches a[id], then b[id] == 5 and all is fine in the world. The problem, is that there's thousands of cores working simultaneously, updating memory in complicated ways. Even the CPU could theoretically be changing the value of a[id] for example, since global memory is theoretically accessible by the host!

That's what it means to have a parallel computer. Other cores might be changing your memory, "in between" your lines of code! So first, you have to promise me that no other core (including the CPU) is touching a[id].

If you can promise me that, then b[id] == 5.

-----------

EDIT:

> Then I should generate random number using private registers then pass it to other generators in a chain-input-return way until all generations use same private register and write the result seed value(to global array) only once and only at the end of kernel.

I think as long as you can ensure that each work item only touches "its personal seed", you should be fine. The main issue is synchronization. Its fine to have a bunch of seeds in global memory, as long as no other thread is messing with them. Work Item #1 gets seed #1, Work Item #2 gets seed #2... etc. etc.

As long as you work in that scheme, you shouldn't have a synchronization issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I guarantee no workitem ever tries to access that element. Perfectly parallel, 1-to-1 data flow. (edit: and yes, personal random number generators for all ships, edit-2: I don't add time factor to seeds so whole simulations must act same for every new simulation)