I have two buffers which are processed at two GPUs (AMD and nVidia) in parallel (parallelized with OpenMP),

Part 1:

Active OpenCL platform : AMD Accelerated Parallel Processing

Active OpenCL Device : Cayman OpenCL 1.2 AMD-APP (1800.11)

Part 2:

Active OpenCL platform : NVIDIA CUDA

Active OpenCL device : GeForce GTX 560 Ti OpenCL 1.1 CUDA

The buffers are created with the following flags: CL_MEM_READ_WRITE | CL_MEM_COPY_HOST_PTR.

On each iteration I need to exchange edge values between them. In order to do this, I would like to use map/unmap operations in parallel (as I know, this is faster than read/write).

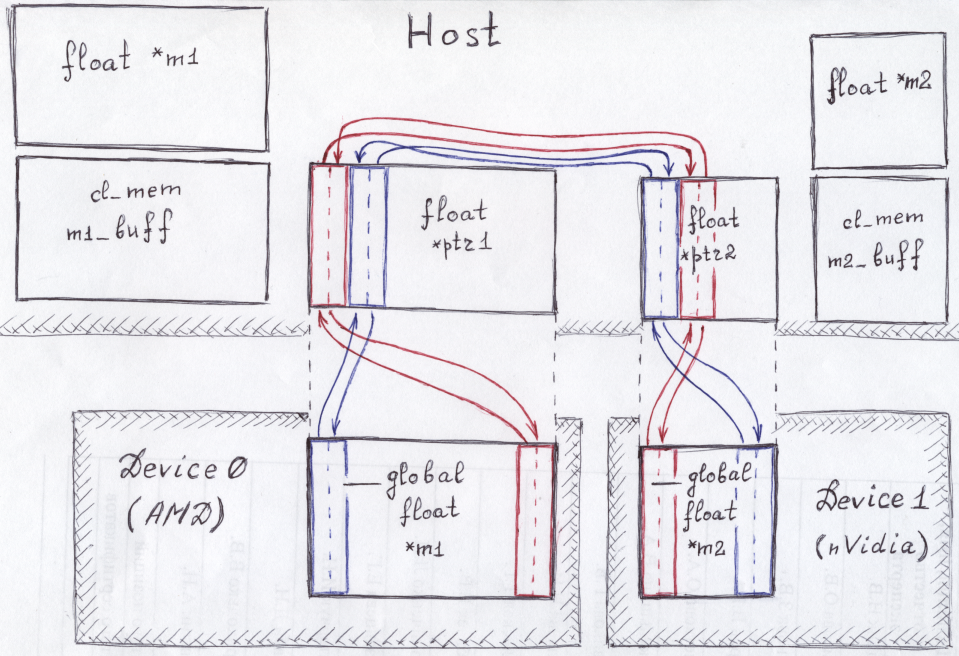

It should work like this:

Red and blue colors correspond to the different OpenMP threads. Each OpenMP thread should perform map of the corresponding region, memcpy between mapped edges, and unmap to the devices.

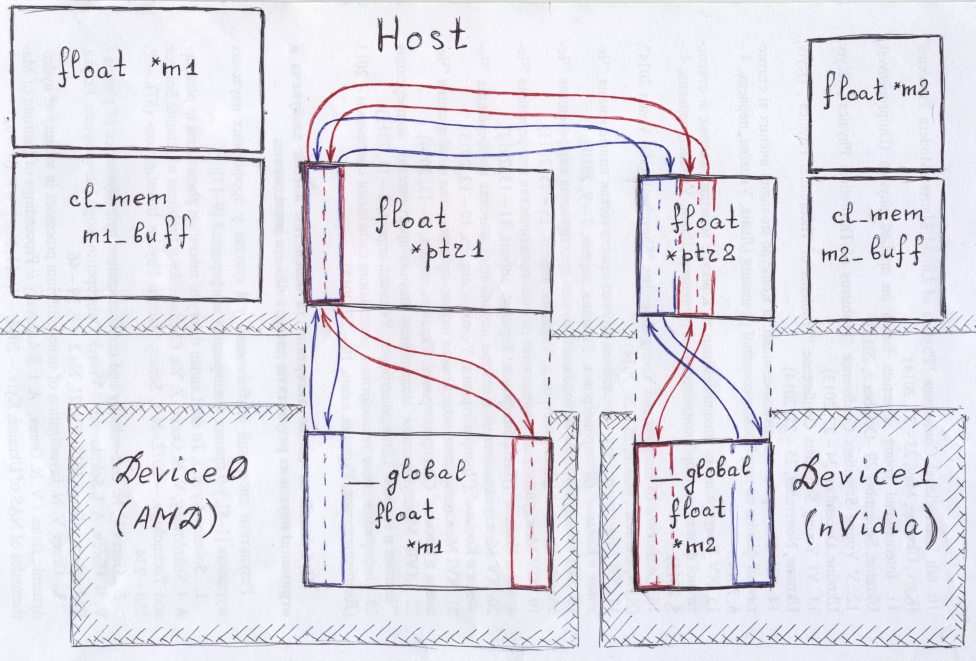

But actually it works like this,

From AMD GPU different parts of data are mapped into the same addresses, so when the program runs in parallel, it leads to wrong result. The nVidia card works correctly. The following sequence of the pointers to the mapped memory for both devices (in the brackets -- number of the device) illustrates this behavior:

[0] 0x7f362a7e3000

[1] 0x7f363c1f5000

>>> [1] 0x7f363c210000

>>> [0] 0x7f362a7e3000

[1] 0x7f363c22b000

[0] 0x7f362a7e3000

>>> [1] 0x7f363c246000

>>> [0] 0x7f362a7e3000

[1] 0x7f363c261000

[0] 0x7f362a7e3000

>>> [1] 0x7f363c27c000

>>> [0] 0x7f362a7e3000

[1] 0x7f363c297000

[0] 0x7f362a7e3000

>>> [1] 0x7f363c2b2000

>>> [0] 0x7f362a7e3000

[0] 0x7f362a7e3000

[1] 0x7f363c2cd000

>>> [0] 0x7f362a7e3000

>>> [1] 0x7f36464aa000

[1] 0x7f36464c5000

[0] 0x7f362a7e3000

>>> [1] 0x7f36464e0000

>>> [0] 0x7f362a7e3000

[1] 0x7f36464fb000

[0] 0x7f362a7e3000

>>> [1] 0x7f3646516000

>>> [0] 0x7f362a7e3000

[1] 0x7f3646531000

[0] 0x7f362a7e3000

>>> [1] 0x7f364654c000

>>> [0] 0x7f362a7e3000

[1] 0x7f3646567000

[0] 0x7f362a7e3000

>>> [1] 0x7f3646582000

>>> [0] 0x7f362a7e3000

[0] 0x7f362a7e3000

[1] 0x7f36463aa000

>>> [1] 0x7f36463c5000

>>> [0] 0x7f362a7e3000

[1] 0x7f36463e0000

[0] 0x7f362a7e3000

>>> [1] 0x7f36463fb000

>>> [0] 0x7f362a7e3000

[1] 0x7f3646416000

[0] 0x7f362a7e3000

>>> [1] 0x7f3646431000

>>> [0] 0x7f362a7e3000

">>>" signs the "left" sub-buffer in the device (i.e. blue sub-buffer at AMD GPU and red at nVidia). It is seen that from 0-th device data are mapped into the same address, independently of the initial position in the GPU memory.

Is this mapping to the same pointer a bug in the driver, or this is made purposely?

If this is purposely, is it possible to perform map/unmap operations in parallel somehow?

Thank you for help in advance.

Natalia