This article was originally published on June 15, 2022.

Editor’s Note: This content is contributed by Bingqing Guo, Software and AI Product Marketing Manager.

As a widely loved AI acceleration development platform, Vitis AI has ushered in a new release, which is now available on June 15th.

We expect AI to play a more critical role in different workloads and device platforms. With tremendous market demand from the data center to the edge, AMD Xilinx has focused on expanding and enhancing the functions of Vitis AI to provide faster AI acceleration. This article provides an overview of the new and enhanced features in the 2.5 release.

More Optimized AI models in the AI Model Zoo

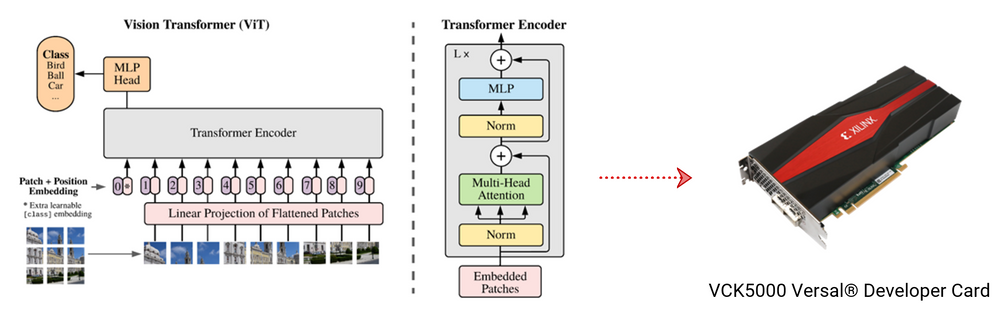

I’m delighted that the most popular BERT-NLP model, vision transformer, end-to-end OCR model, and real-time SLAM SuperPoint and HFNet, are AI models that joined the 2.5 release list. As the transformative acquisition of Xilinx provides AMD with an unmatched set of hardware and software capabilities, Vitis AI 2.5 now supports 38 base and optimized models used by AMD Zen Deep Neural Network (ZenDNN) library on AMD EPYC server processors. As you can imagine, more AMD CPU users will experience faster AI acceleration with the Vitis AI solution.

High Efficient AI Development Flow in Data Center

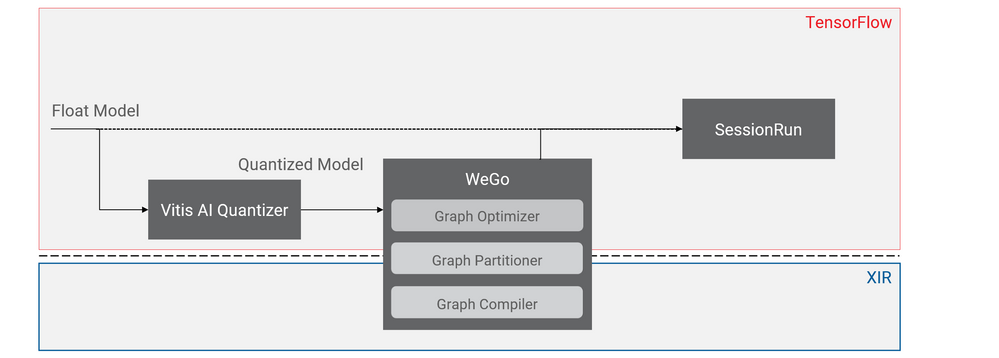

Since we have added Whole Graph Optimizer (WeGO) in the previous release, we have received positive feedback from developers deploying AI in the data center. WeGo offers a smooth methodology to deploy AI models on cloud Deep-learning Processor Units (DPUs) by integrating the Vitis AI stack into the AI framework. In Vitis AI 2.5, WeGo supports more frameworks such as Pytorch and Tensorflow 2. Vitis AI 2.5 release added 19 examples, including image classification, object detection, and segmentation, to make the AI model deployment on data center platforms easier for users.

Whole Graph Optimizer (WeGO)

Whole Graph Optimizer (WeGO)

Optimized Deep Learning Processor Unit and Software Tools

The AI acceleration performance on Xilinx platforms is tightly coupled with a series of powerful acceleration engines and easy-to-use software tools. Currently, we are offering scalable DPUs for the key Xilinx FPGA, adaptable SoC, Versal ACAP, and Alveo™ data center accelerator cards.

With the 2.5 release, Versal DPU IP supports multiple compute units on Versal AI Core devices. A new Arithmetic Logic Unit (ALU) replaced the Pool engine and Depthwise convolution engine to support new features such as large-kernel-size MaxPool, AveragePool, rectangle-kernel-size AveragePool, and 16bit const weights.

Additionally, DPU IPs now support more patterns of model layer combination like the Depthwise convolution plus LeakyRelu.

The cloud DPUs now support larger kernel size of Depthwise convolution, larger kernel size pooling, AI Engine-based pooling, and more.

Learn More

This article only briefly explains key features in the Vitis AI 2.5 release. To see the complete list of new and enhanced features in the 2.5, visit what’s new page.

For more information about AI model zoo, quantizer, optimizer, compiler, DPU IP, WeGO and whole application acceleration (WAA), please visit Vitis AI page.

Don’t forget to visit Vitis AI GitHub page to get the latest tool and a docker to try!