Archives Discussions

- AMD Community

- Communities

- Developers

- Devgurus Archives

- Archives Discussions

- Re: Multi-GPU scaling

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multi-GPU scaling

I have two RX 470 cards. I am running a series of OpenCL kernels which are fairly memory intensive : this is a video compression application, so a lot of data passes from host to GPU and back. There is also high CPU usage.

When I run my kernels on a single 470, total frame rate is 40 FPS. When I use two 470s, frame rate equals

60 FPS. There is no dependency in the code between the two devices.

So, it looks like scaling is sub-optimal. I was hoping/expecting to get around 80 FPS for two cards. What factors may be affecting compute scaling on multiple cards?

How can I trouble-shoot this issue?

Any advice would be greatly appreciated.

Thanks!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using multiple command queues concurrently, they are using multiple threads. Constrain other works to 1 or 2 less cores(or threads) than before so CPU can upload data to cards faster.

If thread/core affinity of your program is not an option, you can try having pinned arrays on host side and using map/unmap instead of read/write on device side buffers so they don't use cpu, they use DMA engines of cards and access directly to RAM.

If these not enough, add pipelining of read+write so one of them is hidden and it takes less to communicate. If computing is heavier, you can pipeline it with them too.

If you still have low performance, then you can always look at code-xl gpu profiling graphs timeline-data to see whats wrong.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using multiple command queues concurrently, they are using multiple threads. Constrain other works to 1 or 2 less cores(or threads) than before so CPU can upload data to cards faster.

If thread/core affinity of your program is not an option, you can try having pinned arrays on host side and using map/unmap instead of read/write on device side buffers so they don't use cpu, they use DMA engines of cards and access directly to RAM.

If these not enough, add pipelining of read+write so one of them is hidden and it takes less to communicate. If computing is heavier, you can pipeline it with them too.

If you still have low performance, then you can always look at code-xl gpu profiling graphs timeline-data to see whats wrong.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. So, are you saying that

clEnqueueWriteImage

uses more CPU than

clEnqueueMapImage/clEnqueueUnmapImage ?

And that Map/Unmap uses DMA engine, while clEnqueueWriteImage does not ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yepp. Read Write uses CPU(and its cache probably) and map unmap uses dma (but only for one way or both ways ? idk) and performs better with a USE_HOST_PTR and an array supplied by you which has starting address aligned to multiple of 4096. So instead of host allocating array for you, you give your own array and use USE_HOST_PTR (4096 aligned) then memory-bound(which is also pci-e bound) scenarios is nearly doubles speed. On top of that, adding pipelining can overcome latency of pci-e bottleneck and give you 100 FPS for single card. Pipelining needs division of work into smaller works and lets other gpu get its turn without waiting much and compute at the same time (sometimes drivers are better withing a single context but you can try your own scheduler system against it)

If you are using CPU as an opencl device at the same time, leave 1 or 2 cores untouched(using device fission(opencl) and core affinity(host)) so CPU doesn't cough while feeding GPUs(or whatever communication with GPUs).

Actually map/unmap with USE_HOST_PTR is for "streaming" to/from gpu and if gpu code accesses __global memory space, it access RAM instead of GDDR so be cautious. Pipeline acts better for buffers created with CL_MEM_READ_WRITE(device side buffers) at least on my computer with R7_240 and HD7870 because map/unmap already maxes some things out.

If you don't have your own pinned arrays, you can use ALLOC_HOST_PTR for internal arrays to be managed by opencl runtime but it needs one extra copy (at least host to host) and still faster than pure device-side(only MEM_READ_WRITE for example) buffers' communications.

For my machine and kernel configuration (low end gpus low end motherboard kernel with only a sin() function and 1 float write 1 floar read), pipelining gives x1.9 performance for CL_MEM_READ_WRITE and x1.3 for ALLOC_HOST_PTR but it is much faster like x2.5.

Max performance for my system is achieved when I use USE_HOST_PTR and pipelining with explicit event control on 3 command queues per device like:

r=read

w=write

c=compute

(somewhat more complex to implement)

| time 1 | time 2 | time 3 | time 4 | time 5 | time 6 | time 7 | time 8 | time 9 |

|---|---|---|---|---|---|---|---|---|

| r1 | r2 | r3 | r4 | r5 | r6 | r7 | ||

| c1 | c2 | c3 | c4 | c5 | c6 | c7 | ||

| w1 | w2 | w3 | w4 | w5 | w6 | w7 |

or

using many more queues per device and letting drivers handle the order of executions(16 queues per gpu but this example has 4 queues)

(easy to implement)

| command1 | command2 | command3 | command4 | command5 | command6 |

|---|---|---|---|---|---|

| r1 | c1 | w1 | r5 | c5 | w5 |

| r2 | c2 | w2 | r6 | c6 | w6 |

| r3 | c3 | w3 | r7 | c7 | w7 |

| r4 | c4 | w4 | r8 | c8 | w8 |

which has unknown execution time slices that driver chooses and optimizes, has more performance variation than event based version but can get faster since there is no event handling overhead. Everything works with top speed. Engine checks if read operation in a queue is bottlenecking, then executes a write or compute on another queue, this way all pipelines of gpu is filled at the same time.

Both my gpus have only 2 asynchronous compute engines so your gpus may have(and need) more queues to perform at top speed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tugrul_512bit thanks a lot for the detailed answer!

May I ask a few questions:

what do you mean by pipelining?

What do you mean by pinned array? I use CL_MEM_USE_HOST_PTR. Is this what you mean by pinned ?

Thanks again. It's nice to hear from someone else struggling with these issues ![]()

Aaron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way, I do send data back and forth from card in parallel to card running kernels, so perhaps this is what

you mean by pipelining?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pipelining means that you have N-stage processing where each stage takes result of previous stage. So to archive optimal performance you need to have at least N chunk of data processing at same time. You don't wait until first chunk goes through whole pipeline but you send second one immediately as it finish first stage. https://en.wikipedia.org/wiki/Instruction_pipelining <= same principle

That way you get parallel execution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By pipelining, I mean breaking work into 16 pieces, uploading first, then uploading second while computing first, then uploading third while downloading first and computing second .... or giving control to opencl drivers and have 4 commandqueues each running 4 parts (out of of 16 total) without any events. Of course this can be only done for divisible workloads.

Pinned array means nothing moves array an it satisfies alignment conditions. Such as C# 's GC HANDLE pinning of an array before computing or having a C++ aligned array that doesn't move anywhere.

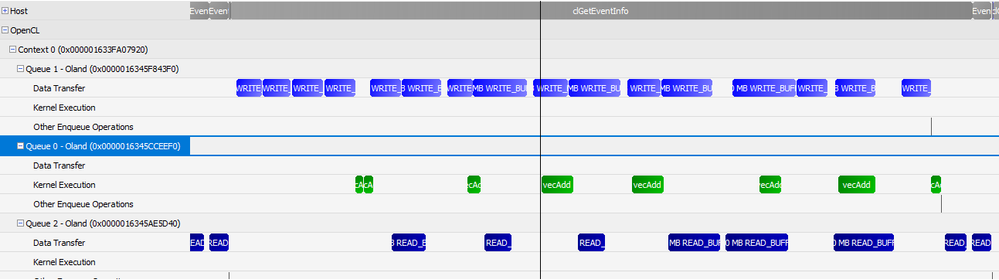

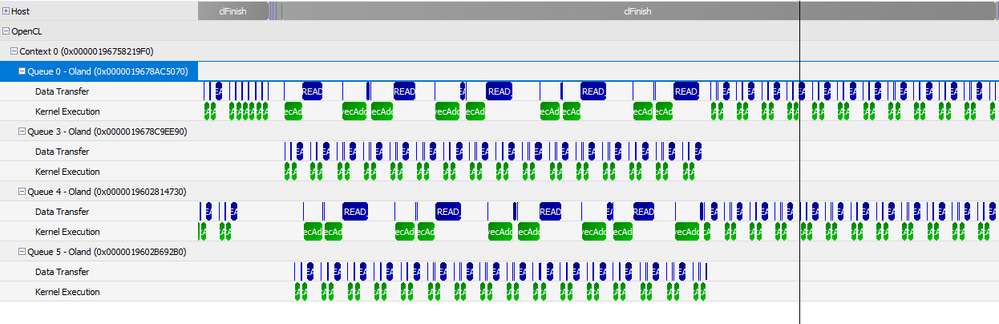

You said you already have these optimizations so the bottleneck must be elsewhere. Can you post your kernel execution timeline graph screenshot from CodeXL profiler please?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! I will try to post my codexl timeline.

Getting back to DMA vs CPU, are you saying that clEnqueueWriteImage using image created with

CL_MEM_USE_HOST_PTR. is not using DMA engine ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only thing I know is, only map/unmap operations use dma while enqueueWrite or Read uses CPU, CL_MEM_USE_HOST_PTR works with map+unmap, not write+read, CL_MEM_ALLOC_HOST_PTR works with map+write+unmap and map+read+unmap. Did you mean clenqueueWriteImage between map and unmap?

Maybe I'm wrong and everything uses DMA, just map unmap is efficient.

I'm using CL_MAP_WRITE_INVALIDATE_REGION for the mapping(as writes) in my home-brewn opencl wrapper api.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just found this on developer.amd

Host Memory

This regular CPU memory can be access by the CPU at full memory bandwidth; however, it is not directly accessible by the GPU. For the GPU to transfer host memory to device memory (for example, as a parameter to clEnqueueReadBuffer or clEnqueueWriteBuffer), it first must be pinned (see section 5.5.1.2). Pinning takes time, so avoid incurring pinning costs where CPU overhead must be avoided. When host memory is copied to device memory, the OpenCL runtime uses the following transfer methods.

• <=32 kB: For transfers from the host to device, the data is copied by the CPU to a runtime pinned host memory buffer, and the DMA engine transfers the data to device memory. The opposite is done for transfers from the device to the host.

• >32 kB and <=16 MB: The host memory physical pages containing the data are pinned, the GPU DMA engine is used, and the pages then are unpinned.

• >16 MB: Runtime pins host memory in stages of 16 MB blocks and transfer data to the device using the GPU DMA engine.

Double buffering for pinning is used to overlap the pinning cost of each 16 MB block with the DMA transfer.

Due to the cost of copying to staging buffers, or pinning/unpinning host memory, host memory does not offer the best transfer performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

and this:

Pinned Host Memory

This is host memory that the operating system has bound to a fixed physical address and that the operating system ensures is resident. The CPU can access pinned host memory at full memory bandwidth. The runtime limits the total amount of pinned host memory that can be used for memory objects. (See Section 5.5.2, “Placement,” page 5-18, for information about pinning memory. If the runtime knows the data is in pinned host memory, it can be transferred to, and from, device memory without requiring staging buffers or having to perform pinning/unpinning on each transfer. This offers improved transfer performance. CPU R GPU W GPU Shader R GPU Shader W GPU DMA R GPU DMA W Host Memory 10 - 20 10 - 20 9 - 10 2.5 11 - 12 11 - 12 GPU Memory .01 9 - 10 230 120 -150 n/a n/a AMD A CCELERATED P ARALLEL P ROCESSING 5.5 OpenCL Memory Objects 5-17 Copyright © 2013 Advanced Micro Devices, Inc. All rights reserved. Currently, the runtime recognizes only data that is in pinned host memory for operation arguments that are memory objects it has allocated in pinned host memory. For example, the buffer argument of clEnqueueReadBuffer/clEnqueueWriteBuffer and image argument of clEnqueueReadImage/clEnqueueWriteImage. It does not detect that the ptr arguments of these operations addresses pinned host memory, even if they are the result of clEnqueueMapBuffer/clEnqueueMapImage on a memory object that is in pinned host memory. The runtime can make pinned host memory directly accessible from the GPU. Like regular host memory, the CPU uses caching when accessing pinned host memory. Thus, GPU accesses must use the CPU cache coherency protocol when accessing. For discrete devices, the GPU access to this memory is through the PCIe bus, which also limits bandwidth. For APU devices that do not have the PCIe overhead, GPU access is significantly slower than accessing device-visible host memory (see section 5.5.1.3), which does not use the cache coherency

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So it seems normal writes using dma too, but with an extra copying to managed internal opencl host-side buffers (to enable dma copy) causing an overhead. Also it says a dma needs 4096-aligned buffer with a length of multiple-of 64.

How I think "only map/unmap was using dma" was "fastest data streaming was map/unmap and gpu was accessing RAM --directly--(as in Direct Memory Access)"

But I was wrong. USE_HOST_PTR + map/map is a zero copy and does not add an extra buffer copy (host to host) so does not need any read/write. Only map/unmap. But alloc_host_ptr needs a read/write between map/unmap as I experimented.

everything here(1.2):

also CL_MAP_WRITE_INVALIDATE_REGION disables an extra copy when mapping for ALLOC_HOST_PTR and CL_MAP_WRITE was enough for USE_HOST_PTR for mapping.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for this information! It is starting to become clear to me ![]()

Currently, my code operates like this:

1) Create an image with CL_MEM_USE_HOST_PTR | CL_MEM_READ_ONLY | CL_MEM_HOST_WRITE_ONLY

flags. Host memory buffer is allocated by me, aligned to 4096 bytes

2) Copy data from my own data buffer #1 into host memory buffer

3) trigger clEnqueueWriteImage

4) on receiving enqueue complete event, trigger series of N kernels

5) when first kernel completes, copy data from my own data buffer #2 into host memory buffer, and repeat above steps.

So, how would this flow work with map/unmap?

My guess is:

1) Create an image with CL_MEM_READ_ONLY | CL_MEM_HOST_WRITE_ONLY

flags.

2) map the image

3) when map returns, I will have a pointer to the pinned buffer

2) Copy data from my own data buffer #1 into pinned buffer pointer

3) unmap the image

4) on receiving unmap complete event, trigger series of N kernels

5) when first kernel completes, repeat above steps

Once again, thanks so much for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think your guess is right. Except 5), should not repeat creating image part. You are not creating buffers at each iteration, are you?

Please inform us about performance uplift when you done program update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, no, not creating buffers with each iteration ![]()

Will update with perf numbers once I do my experiment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, I am still using clEnqueueWriteImage, but I managed to remove one copy of image data

to the host buffer I provided when creating the image with CL_MEM_USE_HOST_PTR.

I used to read the image from disk, write to a buffer, then copy from that buffer to my host buffer.

Now, I write directly to host buffer and skip the intermediate buffer.

Frame time has decreased by around 6%, which is pretty nice for one night's work.

So, when I switch to map/unmap, I will be removing another buffer copy, so this should also help.

Also, scaling is improving as I reduce CPU usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good. %6 means 42.4 fps per gpu. And this is without removal of unnecessary writes(for streaming ofcourse).

By the way, I'm adding cluster option to my cl wrapper now and I'm seesaw between doing tcp-ip connections myself or using mpi. I couldn't find mpi benchmarks about it when both server and client is same machine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for help so far ![]()

I'm afraid I don't have experience with mpi, so don't have an opinion on the tradeoffs. What is advantage

of doing tcp connections yourself ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if this is an advantage, but I can choose which array starts at which byte location in a byte array( as a serialized form of an opencl command) and it can copy faster or does not need copying(because wrapper having USE_HOST_PTR) ever. Just gets data from tcp-ip buffer(1024-byte length) and copies it to "serialized" object representation (the byte array mentioned at beginning). It is intended to be used for iterative approaches so it doesn't allocate a new array everytime.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Later I learned that MPI supports infiniband devices so it is automatically using them but infiniband can be used as "ip over infiniband" for tcp-ip api. Either way, it needs more work with tcp api than MPI as it seems (tweaking the MTU and various kernel TCP buffer and window settings). I just tested on single home computer with just a simple modem, it reaches about 40 MB/s one-way (both client and server is on same computer). This is really lower than pci-e bandwidth. So scaling mostly depending on network speed(cluster - grid - jungle) and memory-pci-e bandwidth(gaming computer).

If infiniband is QDR type, it is comparable to PCI-e 2.0 x8 (even cool for kernels like c=sin(a)+cos(b) )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting - I was looking into ethernet over infiniband, for a fast NAS system.

Have you looked at ROCm for compute? It supports RDMA between cards,

of course much faster than network. But, only for pro cards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, in the end, I found no performance difference between

1) creating a buffer with CL_MEM_USE_HOST_PTR and my own aligned buffer

or

2) creating a buffer with CL_MEM_ALLOC_HOST_PTR

Buffer size was around 15 MB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to test there is no communication bottleneck, could you benchmark this kernel with 1024*1280 elements for each float array(15.7 MB total):

1024*1280 thread (with 256 local thread group size)

__kernel void benchmark1( __global float *a,

__global float *b,

__global float *c)

{

int id=get_global_id(0);

c[id]=a[id]+b[id];

}

On my system,

read + compute + write: 8.88 ms

with alloc_host_ptr(map unmap): 7.98 ms

with use_host_ptr(map unmap): 3.96 ms

Test system: PCI-E 2.0 @ 8x link width and FX8150 @ 3.3 GHz and R7-240(320 cores) @ 900 MHz

dual channel ddr3 1866MHz with interleaving enabled

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't done this benchmark, but it looks like I get a perf improvement by switching to ALLOC_HOST_PTR.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tugrul_512bit thanks again for your advice on switching to map/unmap and ALLOC_HOST_PTR.

Paying more attention to optimizing transfer from host to device and back again, I am seeing a significant

uplift in performance - around 30% faster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As a deep note, the benchmark I did for alloc_host_ptr (7.98 ms) became 4.70 ms when I enabled pipelining. (you said you had pipelining too, is it frame level pipelining or thread-group level pipelining? mine is thread-group level so it overlaps parts of a divided ndrangekernel(16 parts for example))